Archive

Source code chapter of ‘evidence-based software engineering’ reworked

The Source code chapter of my evidence-based software engineering book has been reworked (draft pdf).

When writing the first version of this chapter, I was not certain whether source code was a topic warranting a chapter to itself, in an evidence-based software engineering book. Now I am certain. Source code is the primary product delivery, for a software system, and it is takes up much of the available cognitive effort.

What are the desirable characteristics that source code should have, to minimise production costs per unit of functionality? This is what an evidence-based chapter on source code is all about.

The release of this chapter completes my second pass over the material. Readers will notice the text still contains ... and ?‘s. The third pass will either delete these, or say something interesting (I suspect mostly the former, because of lack of data).

Talking of data, February has been a bumper month for data (apologies if you responded to my email asking for data, and it has not appeared in this release; a higher than average number of people have replied with data).

The plan is to spend a few months getting a beta release ready. Have the beta release run over the summer, with the book in the shops for Christmas.

I’m looking at getting a few hundred printed, for those wanting paper.

The only publisher that did not mind me making the pdf freely available was MIT Press. Unfortunately one of the reviewers was foaming at the mouth about the things I had to say about software engineering researcher (it did not help that I had written a blog post containing a less than glowing commentary on academic researchers, the week of the review {mid-2017}); the second reviewer was mildly against, and the third recommended it.

If any readers knows the editors at MIT Press, do suggest they have another look at the book. I would rather a real publisher make paper available.

Next, getting the ‘statistics for software engineers’ second half of the book ready for a beta release.

Source code has a brief and lonely existence

The majority of source code has a short lifespan (i.e., a few years), and is only ever modified by one person (i.e., 60%).

Literate programming is all well and good for code written to appear in a book that the author hopes will be read for many years, but this is a tiny sliver of the source code ecosystem. The majority of code is never modified, once written, and does not hang around for very long; an investment is source code futures will make a loss unless the returns are spectacular.

What evidence do I have for these claims?

There is lots of evidence for the code having a short lifespan, and not so much for the number of people modifying it (and none for the number of people reading it).

The lifespan evidence is derived from data in my evidence-based software engineering book, and blog posts on software system lifespans, and survival times of Linux distributions. Lifespan in short because Packages are updated, and third-parties change/delete APIs (things may settle down in the future).

People who think source code has a long lifespan are suffering from survivorship bias, i.e., there are a small percentage of programs that are actively used for many years.

Around 60% of functions are only ever modified by one author; based on a study of the change history of functions in Evolution (114,485 changes to functions over 10 years), and Apache (14,072 changes over 12 years); a study investigating the number of people modifying files in Eclipse. Pointers to other studies that have welcome.

One consequence of the short life expectancy of source code is that, any investment made with the expectation of saving on future maintenance costs needs to return many multiples of the original investment. When many programs don’t live long enough to be maintained, those with a long lifespan have to pay the original investments made in all the source that quickly disappeared.

One benefit of short life expectancy is that most coding mistakes don’t live long enough to trigger a fault experience; the code containing the mistake is deleted or replaced before anybody notices the mistake.

Update: a few days later

I was remiss in not posting some plots for people to look at (code+data).

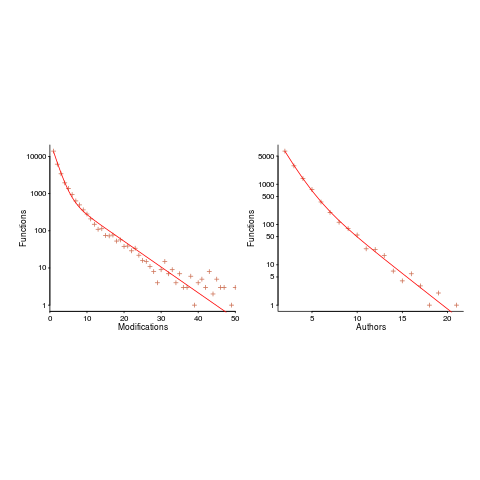

The plot below shows number of function, in Evolution, modified a given number of times (left), and functions modified by a given number of authors (right). The lines are a bi-exponential fit.

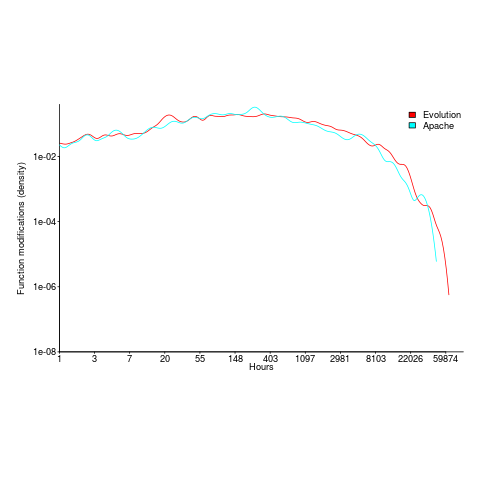

What is the interval (in hours) between between modifications of a function? The plot below has a logarithmic x-axis, and is sort-of flat over most of the range (you need to squint a bit). This is roughly saying that the probability of modification in hours 1-3 is the same as in hours 3-7, and hours, 7-15, etc (i.e., keep doubling the hour interval). At round 10,000 hours function modification probability drops like a stone.

Source code chapter added to “Evidence-based software engineering using R”

The Source Code chapter of my evidence-based software engineering book has been added to the draft pdf (download here).

This chapter has suffered from coming last and there is still lots of work to be done. Almost all the source code related data has been plundered to fill up earlier chapters. Some data did not make the cut-off for release of the draft; a global review will probably result in some data migrating back to this chapter.

When talking to developers about the book I am constantly being asked ‘what is empirical software engineering?’ My explanation uses the phrase ‘evidence-based’, which everybody seems to immediately understand. It is counterproductive having a title that has to be explained, so I have changed the title to “Evidence-based Software Engineering using R”.

What is the purpose of a chapter discussing source code in a book on evidence-based software engineering? Source code is obviously an essential component of the topics discussed in the other chapters, but what is so particular to source code that it could not be said elsewhere? Having spent most of my professional life studying source code, first as a compiler writer and then involved with static analysis, am I just being driven by an attachment to the subject?

My view of source code is very different from most other developers: when developers talk about code, they spend most of the time talking about how they do things, when I talk about code I spend most of the time talking about how other developers do things (I’m a mongrel writer of code). Developers’ blinkered view of code prevents them seeing bigger pictures. I take a Gricean view of code and refrain from using meaningless marketing terms such as maintainability, readability and testability.

I have lots of source code data of interest to compiler writers (who are not the target audience) and I have lots of data related to static analysis (tool developers are not the audience). The target audience is professional software developers and hopefully what has been written is of interest to that readership.

I have been promised all sorts of data. Hopefully some of it will arrive. If somebody tells you they promised to send me data, please encourage them to take some time to sort out the data and send it.

As always, if you know of any interesting software engineering data, please tell me.

Finalizing the statistical analysis material in the second half of the book (released almost two years ago) next.

First use of: software, software engineering and source code

While reading some software related books/reports/articles written during the 1950s, I suddenly realized that the word ‘software’ was not being used. This set me off looking for the earliest use of various computer terms.

My search process consisted of using pfgrep on my collection of pdfs of documents from the 1950s and 60s, and looking in the index of the few old computer books I still have.

Software: The Oxford English Dictionary (OED) cites an article by John Tukey published in the American Mathematical Monthly during 1958 as the first published use of software: “The ‘software’ comprising … interpretive routines, compilers, and other aspects of automotive programming are at least as important to the modern electronic calculator as its ‘hardware’.”

I have a copy of the second edition of “An Introduction to Automatic Computers” by Ned Chapin, published in 1963, which does a great job of defining the various kinds of software. Earlier editions were published in 1955 and 1957. Did these earlier edition also contain various definitions of software? I cannot find any reasonably prices copies on the second-hand book market. Do any readers have a copy?

Update: I now have a copy of the 1957 edition of Chapin’s book. It discusses programming, but there is no mention of software.

Software engineering: The OED cites a 1966 “letter to the ACM membership” by Anthony A. Oettinger, then ACM President: “We must recognize ourselves … as members of an engineering profession, be it hardware engineering or software engineering.”

The June 1965 issue of COMPUTERS and AUTOMATION, in its Roster of organizations in the computer field, has the list of services offered by Abacus Information Management Co.: “systems software engineering”, and by Halbrecht Associates, Inc.: “software engineering”. This pushes the first use of software engineering back by a year.

Source code: The OED cites a 1965 issue of Communications ACM: “The PUFFT source language listing provides a cross reference between the source code and the object code.”

The December 1959 Proceedings of the EASTERN JOINT COMPUTER CONFERENCE contains the article: “SIMCOM – The Simulator Compiler” by Thomas G. Sanborn. On page 140 we have: “The compiler uses this convention to aid in distinguishing between SIMCOM statements and SCAT instructions which may be included in the source code.”

Update: The October 1956 issue of Computers and Automation contains an extensive glossary. It does not have any entries for software or source code.

Running pdfgrep over the archive of documents on bitsavers would probably throw up all manners of early users of software related terms.

The paper: What’s in a name? Origins, transpositions and transformations of the triptych Algorithm -Code -Program is a detailed survey of the use of three software terms.

Collecting all of the publicly available source code

Collecting together all the software ever written is impossible, but collecting everything that can be found would create something very useful (since I have always been interested in source code analysis, my opinion is biased).

Collecting together source available on the internet is easy. Creating a copy of Github is one of the first actions of anybody with ambitions of collecting lots of source (back in the day it was collecting shareware 5″ 1/4, then 3″ 1/2 floppy discs, then CDs and then DVDs).

The very hard to collect items “exist” on line-printer paper, punched cards, rolls of punched tape and mag tape. These are the items where serious collectors should concentrate their efforts (NASA lost a lot of Voyager data when magnetic particles fell off the plastic strips of tape reels in storage, because the adhesive had degraded).

The Internet Archive is doing a great job of collecting and making available ‘antique’ source code (old computer games is a popular genre; other collectors concentrate on being able to execute ROM images of games), but they are primarily US based.

Collecting the World’s source code requires collection organizations in every country. Collecting old code is a people intensive business and requires lots of local knowledge.

A new source code collection organization has recently been setup in France; the Software Heritage currently aims to collect all software that is publicly available. So far they have done what everybody else does, made a copy of Github and a couple of the well-known source repos.

I hope this organization is not just the French government throwing money at another one-upmanship US vs. France project.

If those involved are serious about collecting source code, rather than enjoying the perks of a tax-funded show project, they will realise that lots of French specific source code is dotted around the country needing to be collected now (before the media decomposes and those who know how to read it die).

Human vs automatically generated source code: an arms race?

How well do I understand the process of writing source, as performed by human developers? If I could write a program that could generate source code that was indistinguishable from human written code, then I think I could claim to have a decent understanding of the human processes.

An important question is the skill of the entity attempting to distinguish automatically generated and human written code. As of today I think I could fool existing tools (mostly because such tools don’t really exist; n-gram analysis is really all there is), but a human developer with a smattering of coding experience would not be fooled.

I think I know enough to write a tool that could generate single function definitions that would fool most developers, but not a moderately sophisticated analysis tool (I don’t know enough to combine multiple functions into a plausible call graph).

At the CREST workshop today I met Martin Monperrus who shared my view that the only way to prove an understanding of source is to be able to generate it; other people at the workshop seemed more interested in the detection side of things.

Perhaps the time is ripe to kick off a generator/detector arms race would certainly increase our knowledge of the characteristics of human written code.

What counts as automatically generated code? Printing complete function definitions mined from Github is obviously not what most people would associate with the concept of automatic source generation. What about printing statements mined from Github (with each statement coming from a different function definition)? Well, if people could make that work, then good luck to them (it hardly seems worth the bother because most statements are very simple and easily generated on a token by token basis).

Should functions be judged in isolation, or should a detector get to see multiple instances of each case (which would make the detector’s life easier)? It might be best to go with whatever rules make for an interesting arms race.

To start the ball rolling, here is my first entry to the generator side of the race (a rather minimal entry, but still the leader in its field; the recurrent neural network trained on the Linux source would easily beat it, but my interest is in understanding developer behavior and will leave it to others to go down the neural network path).

Deluxe Paint: 30 year old C showing its age in places

Electronic Arts have just released the source code of a version of Deluxe Paint from 1986.

The C source does not look anything like my memories of code from that era, probably because it was written on and for the Amiga. The Amiga used a Motorola 68000 cpu, which meant a flat address space, i.e., no peppering the code with near/far/huge data/function declaration modifiers (i.e., which cpu register will Sir be using to call his functions and access his data).

Function definitions use what is known as K&R style, because the C++ way of doing things (i.e., function prototypes) was just being accepted into C by the ANSI committee (the following is from BLEND.C):

void DoBlend(ob, canv, clip) BMOB *ob; BoxBM *canv; Box *clip; {...} |

The difference between the two ways of doing things (apart from the syntax of putting the type information outside/inside the brackets) is that the compiler does not do any type checking between definition/call for the K&R style. So it is possible to call DoBlend with any number of arguments having any defined type and the compiler will generate code for the call assuming that the receiving end is expecting what is being passed. Having an exception raised in the first few lines of a function used to be a sure sign of an argument mismatch, while having things mysteriously fail well into the body of the code regularly ruined a developer’s day. Companies sold static analysis tools that did little more than check calls against function definitions.

The void on the return type is unusual for 1986, as is the use of enum types. I would have been willing to believe that this code was from 1996. The clue that this code is from 1986 and not 1996 is that int is 16 bits, a characteristic that is implicit in the code (the switch to 32 bits started in the early 1990s when large amounts of memory started to become cheaply available in end-user systems).

So the source code for a major App, in its day, is smaller than the jpegs on many’ish web pages (jpegs use a compressed format and the zip file of the source is 164K). The reason that modern Apps contain orders of magnitude more code is that our expectation of the features they should contain has grown in magnitude (plus marketing likes bells and whistles and developers enjoy writing lots of new code).

When the source of one of today’s major Apps is released in 30 years time, will people be able to recognise patterns in the code that localise it to being from around 2015?

Ah yes, that’s how people wrote code for computers that worked by moving electrons around, not really a good fit for photonic computers…

Source code usage via n-gram analysis

In the last 18 months or so source code analysis using n-grams has started to take off as a research topic, with most of the current research centered around lexical tokens as the base unit. The usefulness of n-grams for getting an idea of the main kinds of patterns that occur in code is one of those techniques that has been long been spread via word of mouth in industry (I used to use it a lot to find common patterns in generated code, with the intent of optimizing that common pattern).

At the general token level n-grams can be used to suggest code completion sequences in IDEs and syntax error recovery tokens for compilers (I have never seen this idea implemented in a production compiler). Token n-grams are often very context specific, for instance the logical binary operators are common in control-expressions (e.g., in an if-statement) and rare outside of that context. Of course any context can be handled if the n in n-gram is very large, but every increase in n requires a lot more code to be counted, to separate out common features from the noise of many single instances ((n+1)-grams require roughly an order of T more tokens than n-gram, where T is the number of unique tokens, for the same signal/noise ratio).

At a higher conceptual level n-grams of language components (e.g, array/member accesses, expressions and function calls) provide other kinds of insights into patterns of code usage. Probably more than you want to know of this kind of stuff in table/graphical form and some Java data for those wanting to wave their own stick.

An n-gram analysis sometimes involves a subset of the possible tokens, for instance treating sequences of function/method api calls that does not match the any of the common sequences as possible faults (e.g., a call that should have been made, perhaps closing a file, was omitted).

It is easy to spot n-gram researchers who have no real ideas of their own and are jumping on the bandwagon; they spend most of their time talking about entropy.

Like all research topics, suggested uses for n-grams sometimes gets rather carried away with its own importance. For instance, the proposal that common code sequences are “natural” and have a style that must be followed by other code. Common code sequences are common for a reason and we ought to find out what that reason is before suggesting that developers blindly mimic this usage.

Entropy: Software researchers go to topic when they have no idea what else to talk about

If I’m reading a software engineering paper or blog and it starts discussing entropy my default behavior is to stop reading and move along. Entropy is what software researchers talk about when they cannot think of anything else to say about a topic.

The term entropy was first used in thermodynamics, around the mid-1800s, to define the relationship between the temperature of a body and its heat content. Once people found out that molecules could exist in different energy states within solids they realized that temperature was actually an average of the different energies of the molecules in a body and that heat content was the sum of the vibrational and kinetic energies of the molecules within a body. These insights enabled entropy to also be defined in terms of the number of states that the molecules within a body could exist in, and the probability of these states being occupied; statistical mechanics, a specialist field within thermodynamics, was born. Note, it is a common mistake to associate entropy with disorder (and lets bang that point home).

The problem with the term Entropy, outside of thermodynamics, is that people conflate, confuse and co-mingle various concepts associated with it. In fact this is one of the reasons Shannon chose to associate the word Entropy with his new theory of information, as he told it: Von Neumann told me, “You should call it entropy, for two reasons. In the first place your uncertainty function has been used in statistical mechanics under that name, so it already has a name. In the second place, and more important, nobody knows what entropy really is, so in a debate you will always have the advantage.”

While Shannon’s paper A Mathematical Theory of Communication is his most cited work, the second paper Prediction and Entropy of English has probably had a bigger impact in the world of techno-babble.

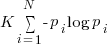

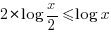

Shannon’s famous entropy formula is  , where there are

, where there are  possible events with

possible events with  the probability of event

the probability of event  occurring and

occurring and  some constant. The derivation and use of this formula depends on various assumptions being true, perhaps the most important being that successive symbols are independent; if the next symbol depends on what went before it the formula is more complicated and we are now dealing with conditional entropy.

some constant. The derivation and use of this formula depends on various assumptions being true, perhaps the most important being that successive symbols are independent; if the next symbol depends on what went before it the formula is more complicated and we are now dealing with conditional entropy.

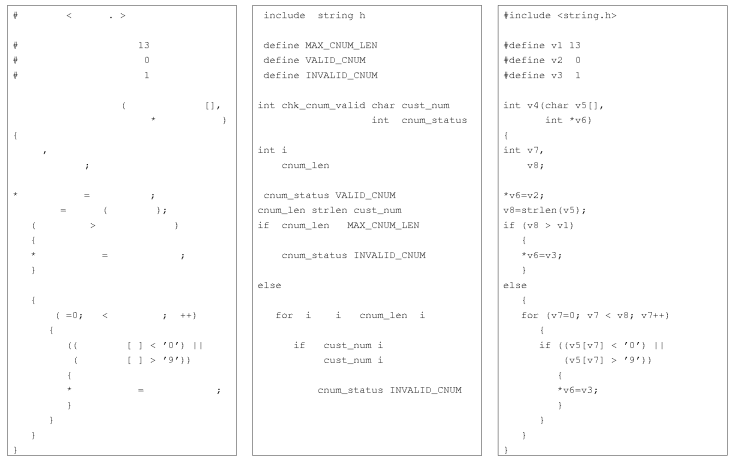

Source code, to quiet a good approximation, consists of a sequence of symbols that alternate between symbols selected from two separate alphabets, the alphabet of punctuators/operators and the alphabet of ‘words’. The following shows the source of a program separated into these two sets of symbols.

Source code, created as it is from an alternating sequence of two different symbol sets, has none of the characteristics assumed in Shannon’s formula derivation or by Maxwell & Boltzmann in their statistical mechanics derivation. Now source code might be usefully analysed using n-grams, but unless we let the  go to infinity

go to infinity  does not get a look-in.

does not get a look-in.

Lets drive another nail into this source code entropy nonsense.

Entropy techno-babble and the second law of thermodynamics are frequent bedfellows. This law specifies that the entropy of a closed system never decreases, it either stays the same or increases. Now very many source code attributes have been found to be proportional to the log of the amount of code (as measured in lines); this is potentially a big problem for those wanting to calculate the entropy of source (it is a repeat of the Gibbs paradox).

If a function (which is claimed to have entropy  ) is split in half to create two functions, the second law of thermodynamics requires that the sum of the entropies of the two new functions should be at least

) is split in half to create two functions, the second law of thermodynamics requires that the sum of the entropies of the two new functions should be at least  . Entropy cannot scale logarithmically because splitting a function in two would reduce total entropy (i.e.,

. Entropy cannot scale logarithmically because splitting a function in two would reduce total entropy (i.e.,  , when

, when  ). So source code entropy has to be an attributes that does not scale logarithmically, which goes against most of what is known about source.

). So source code entropy has to be an attributes that does not scale logarithmically, which goes against most of what is known about source.

If source code does not follow Maxwell–Boltzmann statistics (the equations obtained by working through the ideas behind statistical mechanics), what kind of statistics might it follow? Strange as it might sound, quantum mechanics offers some pointers. Fermi–Dirac and Bose–Einstein statistics are the result of working through the mathematics of small numbers of particles having particular attributes; most functions are small and far removed from the large number of items assumed in the derivation of Maxwell–Boltzmann statistics.

I appreciate that by pointing out the parallel with quantum mechanics I am running the risk of entanglement replacing entropy as the go to topic for researchers scraping the barrel for something to talk about. But at least the mathematics of small numbers of items obeying certain rules is a model that is closer to source code.

Source code will soon need to be radiation hardened

I think I have discovered a new kind of program testing that may soon need to be performed by anybody wanting to create ultra-reliable software.

A previous post discussed the compiler related work being done to reduce the probability that a random bit-flip in the memory used by an executing program will result in a change in behavior. At the moment 4G of ram is expected to experience 1 bit-flip every 33 hours due to cosmic rays and the rate of occurrence is likely to increase.

Random corrupts on communications links are protected by various kinds of CRC checks. But these checks don’t catch every corruption, some get through.

Research by Artem Dinaburg looked for, and found, occurrences of bit-flips in domain names appearing within HTTP requests, e.g., a page from the domain ikamai.net being requested rather than from akamai.net. A subsequent analysis of DNS queries to VERISIGN’S name servers found “… that bit-level errors in the network are relatively rare and occur at an expected rate.” (the  bit error rate was thought to occur inside routers and switches).

bit error rate was thought to occur inside routers and switches).

Javascript is the web scripting language supported by all the major web browsers and the source code of JavaScript programs is transmitted, along with the HTML, for requested web pages. The amount of JavaScript source can dwarf the amount of HTML in a web page; measurements from four years ago show users of Facebook, Google maps and gmail receiving 2M bytes of Javascript source when visiting those sites.

If all the checksums involved in TCP/IP transmission are enabled the theoretical error rate is 1 in  bits. Which for 1 billion users visiting Facebook on average once per day and downloading 2M of Javascript per visit is an expected bit flip rate of once every 5 days somewhere in the world; not really something to worry about.

bits. Which for 1 billion users visiting Facebook on average once per day and downloading 2M of Javascript per visit is an expected bit flip rate of once every 5 days somewhere in the world; not really something to worry about.

There is plenty of evidence that the actual error rate is much higher (because, for instance, some checksums are not always enabled; see papers linked to above). How much worse does the error rate have to get before developers need to start checking that a single bit-flip to the source of their Javascript program does not result in something nasty happening?

What we really need is a way of automatically radiation hardening source code.

Recent Comments