Archive

Semantic vs phonetic similarity for word pairs: a weekend investigation

The Computational Semantics hackathon was one of the events I attended last weekend. Most if the suggested problems either looked like they could not reasonably be done in a weekend (it ran 10:00-17:00 on both days, I know academics hacks) or were uninspiring coding problems. Chatting to some of the academics present threw up an interesting idea that involved comparing word pair semantic and phonetic similarity (I have written about my interest in sounds-like and source code identifiers).

Team Semantic-sounds consisted of Pavel and yours truly (code and data).

The linguists I chatted to seemed to think that there would be a lot of word pairs that sounded alike and were semantically similar; I did not succeed it getting any of them to put a percentage to “a lot”. From the human communication point of view, words that both sound alike and have a similar meaning are likely to be confused with each other; should such pairs come into existence they are likely to quickly disappear, at least if the words are in common usage. Sound symbolism related issues got mentioned several times, but we did not have any data to check out the academic enthusiasm.

One of the datasets supplied by the organizers was word semantic similarity data extracted from the Google news corpus. The similarity measure is based on similarity of occurrence, e.g., the two sentences “I like licking ice-cream” and “I like eating ice-cream” suggest a degree of semantic similarity between the words licking and eating; given enough sentences containing licking and eating there are clever ways of calculating a value that can be viewed as a measure of word similarity.

The data contained 72,000+ words, giving a possible half a billion pairs (most having zero similarity). To prune this down a bit we took, for each word, the 150 other words that were most similar to it, giving around 10 million word pairs. Each word was converted to a phoneme sequence and a similarity distance calculated for each pair of phoneme sequences (which we called phonetic distance and claimed it was a measure of how similar the words sounded to each other).

The list of word pairs with high semantic/phonetic similarity was very noisy, with lots of pairs containing the same base word in plural, past tense or some other form, e.g., billion and billions. A Porter stemmer was used to remove all pairs where the words shared the same stem, reducing the list to 2.5 million pairs. Most of the noise now came from differences in British/American spelling. We removed all word pairs that contained a word that was not in the list of words that occurred in the common subset of the British and American dictionaries used by aspell; this reduced the list to half a million pairs.

The output contained some interesting pairs, including: faultless/flawless, astonishingly/astoundingly, abysmal/dismal and elusive/illusive. These look like rarely used words to me (not enough time to add in word frequency counts).

Some pairs had surprisingly low similarity, e.g., artifact/artefact (the British/American spelling equality idea has a far from perfect implementation). Is this lower than expected semantic similarity because there is a noticeable British/American usage difference? An idea for a future hack.

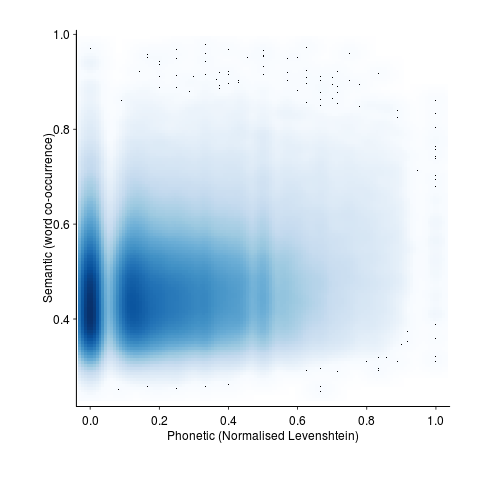

A smoothed scatter plot of semantic vs phonetic similarity (for the most filtered pair list) shows lots of semantically similar pairs that don’t sound alike, but a few that do (I suspect that most of these are noise that better stemming and spell checking will filter out). The following uses Levenshtein distance for phoneme similarity, normalised by the maximum distance for a given phoneme sequence with all phoneme differences having equal weight:

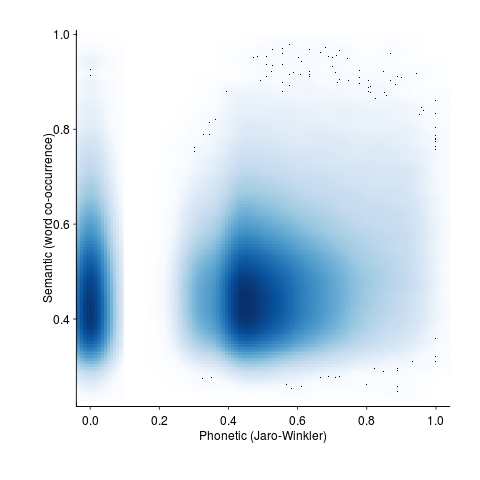

and using Jaro-Winkler distance (an alternative distance metric that is faster to calculate):

The empty band at low phonetic similarity is an artifact of the data being quantized (i.e., words contain a small number of components).

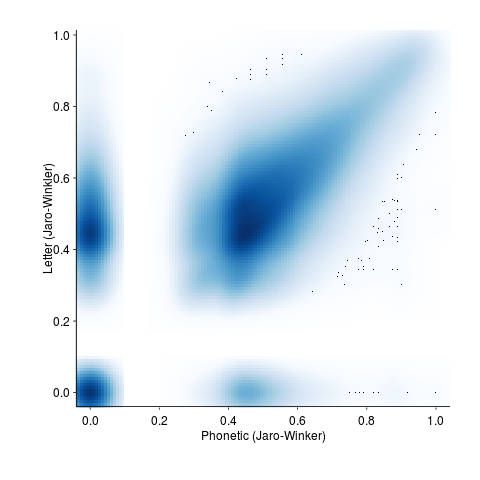

Is it worth going to the trouble of comparing phoneme sequences? Would comparing letter sequences be just as good? The following plot shows word pair letter distance vs phonetic similarity distance (there is a noticeable amount of off-diagonal data, i.e., for some pairs letter/phoneme differences are large):

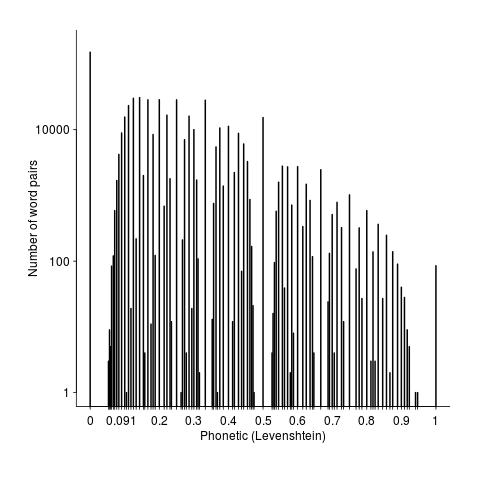

Its always good to have some numbers to go with graphical data. The following is the number of pairs having a given phonetic similarity (remember the starting point was the top 150 most semantically similar pairs). The spikes are cause by the discrete nature of word components.

Squinting at the above it is possible to see an exponential decline as phonetic word similarity increases. It would be interesting to have enough data to display a meaningful 3D plot, perhaps a plane can be fitted (with a log scale on the z-axis).

Rather than using the Google news corpus data as the basis of word pair semantic similarity we could have used the synonym sets from Wordnet. This rather obvious idea did not occur to me until later Saturday and there was no time to investigate. How did the small number of people who created the Wordnet data come up with lists of synonyms? If they simply thought very hard they might have been subject to the availability bias, preferentially producing lists of synonyms that contained many words that sounded alike because those that did not sound alike were less likely to be recalled. Another interesting idea to check out at another hack.

It was an interesting hack and as often happens more new questions were raised than were answered.

Generating sounds-like and accented words

I have always been surprised by the approaches other people have taken to generating words that sound like a particular word or judging whether two words sound alike. The aspell program letter sequence is in its dictionary; the Soundex algorithm is often used to compare whether two words sound alike and has the advantage of being very simple and delivers results that many people seem willing to accept. Over 25 years ago I wrote some software that used a phoneme based approach and while sorting through a pile of papers recently I came across an old report used as the basis for that software. I decided to implement a word sounds-like tool to show people how I think sounds-like should be done. To reduce the work involved this initial tool is based on what I already know, which in some cases is very out of date.

Phonemes are the basic units of sound and any sounds-like software needs to operate on a word’s phoneme sequence, not its letter sequence. The way to proceed is to convert a word’s letter sequence to its phoneme sequence, manipulate the phoneme sequence to create other sequences that have a spoken form similar to the original word and then convert these new sequences back to letter sequences.

A 1976 report by Elovitz, Johnson, McHugh and Shore contains a list of 329 rules for converting a word’s letter sequence into a phoneme sequence. It seemed to me that this same set of rules could be driven in reverse to map a phoneme sequence back to a letter sequence (the complications involved in making this simple idea work will be discussed in another article).

Once we have a phoneme sequence how might it be modified to create similar sounding words?

The distinctive feature theory assigns ten or so features to every phoneme, these denote details such as such things as manner and place of articulation. I decided to use these features as the basis of a distance metric between two phonemes (e.g., the more features two phonemes had in common the more similar they sounded). The book “Phonology theory and analysis” by Larry M. Hyman contains the required table of phoneme/distinctive features. Yes, I am using a theory from the 1950s and a book from the 1970s, but to start with I want to recreate what I know can be done before moving on to use more modern theories/data.

In practice this approach generates too many letter sequences that just don’t look like English words. The underlying problem is that the letter/phoneme rules were not designed to be run in reverse. Rather than tune the existing rules to do a better job when run in reverse I used the simpler method of filtering using letter bigrams to remove non-English letter sequences (e.g., ‘ck’ is not acceptable at the start of a word letter sequence). In preInternet times word bigram information was obtained from specialist cryptographic publishers, but these days psychologists researching human reading are a very good source of reliable information (or at least one I am familiar with).

I have implemented this approach and the system currently supports the generation of:

- letter sequences that sound the same as the input word, e.g., cowd, coad, kowd, koad.

- letter sequences that sound similar to the input word, e.g., bite, dight, duyt, gight, guyt, might, muyt, pight, puyt, bit, byt, bait, bayt, beight, beet, beat, beit, beyt, boyt, boit, but, bied, bighd, buyd, bighp, buyp, bighng, buyng, bighth, buyth, bight, buyt

- letter sequences that sound like the input word said with a German accent, e.g., one, vun and woven, voughen, vuphen.

The output can be piped through a spell checker to remove nondictionary letter sequences.

How accurate are the various sequence translations? Based on a comparison against manual translation of several thousand words from the Brown corpus Elovitz et al claim around 90% of words in random text will be correctly translated to phonemes. I have not done any empirical analysis of the performance of the rules when used to convert phoneme sequences to letters; it will obviously be less than 90%.

The source code of the somewhat experimental tool is available for download. Please note that the code has only been built on Linux, is likely to be fragile in various places and needs a recent copy of the pcre library. Bug reports welcome.

Some of the uses for a word’s phoneme sequence include:

- matching names contained in information transcribed using different conventions or by different people (i.e., slight spelling differences).

- better word splitting at the end of line in LaTeX. Word splitting decisions are best made using sound units.

- better spell checking, particularly for non-native English speakers when coupled with a sound model of common mistakes made by speakers of other languages.

- aid to remembering partially forgotten words whose approximate sound can be remembered.

- inventing trendy spellings for words.

Where next?

Knowledge of the written and spoken word had moved forward in the last 25 years and various other techniques that might improve the performance of the tool are now available. My interest in the written, rather than the spoken, form of code means I have only followed written/sound conversion at a superficial level; reader suggestions on more modern theories, models and data sources that might be used to improve the tools performance are most welcome. A few of my own thoughts:

- As I understand it modern text to speech systems are driven by models derived through machine learning (i.e., some learning algorithm has processed lots of data). There might be existing models out there that can be used, otherwise the MRC Psycholinguistic Database is a good source for lots for word phoneme sequences and perhaps might be used to learn rules for both letter to phoneme and phoneme to letter conversion.

- Is Distinctive feature theory the best basis for a phoneme sounds-like metric? If not which theory should be used and where can the required detailed phoneme information be found? Hyman gives yes/no values for each feature while the first edition of Ladeforded’s “A Course in Phonetics” gives percentage contribution values for the distinctive features of some phonemes; subsequent editions don’t include this information. Is a complete table of percentage contribution of each feature to every phoneme available somewhere?

- A more sophisticated approach to sounds-like would take phoneme context into account. A slightly less crude approach would be to make use of phoneme bigram information extracted from the MRC database. What is really needed is a theory of sounds-like and some machine usable rules; this would hopefully support the adding and removal of phonemes and not just changing existing ones.

As part of my R education I plan to create an R sounds-like package.

In the next article I will talk about how I used and abused the PCRE (Perl Compatible Regular Expressions) library to recognize a context dependent set of rules and generate corresponding output.

Incorrect spelling

While even a mediocre identifier name can provide useful information to a reader of the source a poorly chosen name can create confusion and require extra effort to remember. An author’s good intent can be spoiled by spelling mistakes, which are likely to be common if the developer is not a native speaker of the English (or whatever natural language is applicable).

Identifiers have characteristics which make them difficult targets for traditional spell checking algorithms; they often contain specialized words, dictionary words may be abbreviated in some way (making phonetic techniques impossible) and there is unlikely to be any reliable surrounding context.

Identifiers share many of the characteristics of search engine queries, they contain a small number of words that don’t fit together into a syntactically correct sentence and any surrounding context (e.g., previous queries or other identifiers) cannot be trusted. However, search engines have their logs of millions of previous search queries to fall back on, enabling them to suggest (often remarkably accurate) alternatives to non-dictionary words, specialist domains and recently coined terms. Because developers don’t receive any feedback on their spelling mistakes revision control systems are unlikely to contain any relevant information that can be mined.

One solution is for source code editors to require authors to fully specify all of the words used in an identifier when it is declared; spell checking and suitable abbreviation rules being applied at this point. Subsequent uses of the identifier can be input using the abbreviated form. This approach could considerably improve consistency of identifier usage across a project’s source code (it could also flag attempts to use both orderings of a word pair, e.g., number count and count number). The word abbreviation mapping could be stored (perhaps in a comment at the end of the source) for use by other tools and personalized developer preferences stored in a local configuration file. It is time for source code editors to start taking a more active role in helping developers write readable code.

Recent Comments