Archive

Sample size needed to compare performance of two languages

A humungous organization wants to minimise one or more of: program development time/cost, coding mistakes made, maintenance time/cost, and have decided to use either of the existing languages X or Y.

To make an informed decision, it is necessary to collect the required data on time/cost/mistakes by monitoring the development process, and recording the appropriate information.

The variability of developer performance, and language/problem interaction means that it is necessary to monitor multiple development teams and multiple language/problem pairs, using statistical techniques to detect any language driven implementation performance differences.

How many development teams need to be monitored to reliably detect a performance difference driven by language used, given the variability of the major factors involved in the process?

If we assume that implementation times, for the same program, have a normal distribution (it might lean towards lognormal, but the maths is horrible), then there is a known formula. Three values need to be specified, and plug into this formula: the statistical significance (i.e., the probability of detecting an effect when none is present, say 5%), the statistical power (i.e., the probability of detecting that an effect is present, say 80%), and Cohen’s d; for an overview see section 10.2.

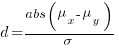

Cohen’s d is the ratio  , where

, where  and

and  is the mean value of the quantity being measured for the programs written in the respective languages, and

is the mean value of the quantity being measured for the programs written in the respective languages, and  is the pooled standard deviation.

is the pooled standard deviation.

Say the mean time to implement a program is  , what is a good estimate for the pooled standard deviation,

, what is a good estimate for the pooled standard deviation,  , of the implementation times?

, of the implementation times?

Having 66% of teams delivering within a factor of two of the mean delivery time is consistent with variation in LOC for the same program and estimation accuracy, and if anything sound slow (to me).

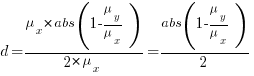

Rewriting the Cohen’s d ratio:

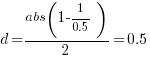

If the implementation time when using language X is half that of using Y, we get  . Plugging the three values into the

. Plugging the three values into the pwr.t.test function, in R’s pwr package, we get:

> library("pwr")

> pwr.t.test(d=0.5, sig.level=0.05, power=0.8)

Two-sample t test power calculation

n = 63.76561

d = 0.5

sig.level = 0.05

power = 0.8

alternative = two.sided

NOTE: n is number in *each* group |

In other words, data from 64 teams using language X and 64 teams using language Y is needed to reliably detect (at the chosen level of significance and power) whether there is a difference in the mean performance (of whatever was measured) when implementing the same project.

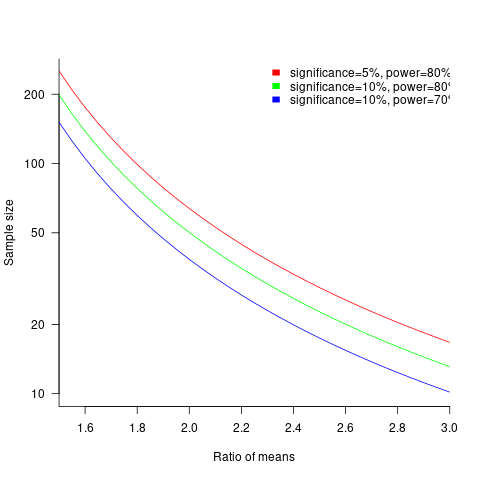

The plot below shows sample size required for a t-test testing for a difference between two means, for a range of X/Y mean performance ratios, with red line showing the commonly used values (listed above) and other colors showing sample sizes for more relaxed acceptance bounds (code):

Unless the performance difference between languages is very large (e.g., a factor of three) the required sample size is going to push measurement costs into many tens of millions (£1 million per team, to develop a realistic application, multiplied by two and then multiplied by sample size).

For small programs solving certain kinds of problems, a factor of three, or more, performance difference between languages is not unusual (e.g., me using R for this post, versus using Python). As programs grow, the mundane code becomes more and more dominant, with the special case language performance gains playing an outsized role in story telling.

There have been studies comparing pairs of languages. Unfortunately, most have involved students implementing short problems, one attempted to measure the impact of programming language on coding competition performance (and gets very confused), the largest study I know of compared Fortran and Ada implementations of a satellite ground station support system.

The performance difference detected may be due to the particular problem implemented. The language/problem performance correlation can be solved by implementing a wide range of problems (using 64 teams per language).

A statistically meaningful comparison of the implementation costs of language pairs will take many years and cost many millions. This question is unlikely to every be answered. Move on.

My view is that, at least for the widely used languages, the implementation/maintenance performance issues are primarily driven by the ecosystem, rather than the language.

Sampling is now an issue in software engineering research

Data analysis in software engineering often has to make do with measurements extracted from the handful of measurable/measured instances at hand, but every now and again the abundance of stuff to measure is such that a subset has to be selected. How should the subset be selected?

Population sampling is a well established part of statistics and a variety of terms have sprung up to label the various strategies used. I think ‘Accidental sampling’ accurately describes the provenance of many software engineering datasets seen in research papers and some of my work. It is quite common to see academic papers using exactly the same sample as previously published papers, perhaps a new term is needed to describe using samples that are identical to those used in previously papers: lazy sampling, coat-tail sampling…

Program source code, which was once so hard to obtain in any significant quantity, is now available by the terabyte load, a population that all but the fastest analysis and most general of questions warrant processing as a whole.

The question being asked can itself intrinsically lead to a reduction in the size of the population, e.g., properties of programs written in X, or programs with more than 10 active developers.

What should be the unit of sampling? The package making up a standalone system/library is a common choice (e.g., all the files in the tar or zip archive from which binaries are built); this can result in unexpected source files being included in the measurement process, such as test programs. A less common choice is to use individual source files as the sampling unit (it is so much easier to randomly select a list of packages, download, extract and measure them one by one).

Are the source file characteristics of the contents of 1,000 packages statistically very similar to 25,000 files obtained by randomly selecting 1 file from 25,000 packages? I don’t know.

A recent paper by Nagappan, Zimmermann and Bird proposes a sampling algorithm which looks like quota or coverage sampling in that candidate similarity to the current sample is used to decide whether to add that candidate (too much similarity results in exclusion). The authors misleadingly associate the term ‘representativeness’ with this algorithm, where common statistical usage of representative requires that if 40% of a population have attribute Z then 40% of a sample’s members will have this attribute (within sampling tolerances).

If software engineering research is to be useful to commercial software engineering any discoveries need to be applicable to samples outside of those used in the original analysis. At the moment researchers are having a hard enough time finding any useful patterns in their data, this is not a reason to continue with the practice of coat-tail sampling and we all need to start addressing sampling issues.

Recent Comments