Archive

The Whitehouse report on adopting memory safety

Last month’s Whitehouse report: BACK TO THE BUILDING BLOCKS: A Path Towards Secure and Measurable Software “… outlines two fundamental shifts: the need to both rebalance the responsibility to defend cyberspace and realign incentives to favor long-term cybersecurity investments.”

From the abstract: “First, in order to reduce memory safety vulnerabilities at scale, … This report focuses on the programming language as a primary building block, …” Wow, I never expected to see the term ‘memory safety’ in a report from the Whitehouse (not that I recall ever reading a Whitehouse report). And, is this the first Whitehouse report to talk about programming languages?

tl;dr They mistakenly to focus on the tools (i.e., programming languages), the focus needs to be on how the tools are used, e.g., require switching on C compiler’s memory safety checks which currently default to off.

The report’s intent is to get the community to progress from defence (e.g., virus scanning) to offence (e.g., removing the vulnerabilities at source). The three-pronged attack focuses on programming languages, hardware (e.g., CHERI), and formal methods. The report is a rallying call to the troops, who are, I assume, senior executives with no little or no knowledge of writing software.

How did memory safety and programming languages enter the political limelight? What caused the Whitehouse claim that “…, one of the most impactful actions software and hardware manufacturers can take is adopting memory safe programming languages.”?

The cited reference is a report published two months earlier: The Case for Memory Safe Roadmaps: Why Both C-Suite Executives and Technical Experts Need to Take Memory Safe Coding Seriously, published by an alphabet soup of national security agencies.

This report starts by stating the obvious (at least to developers): “Memory safety vulnerabilities are the most prevalent type of disclosed software vulnerability.” (one Microsoft reports says 70%). It then goes on to make the optimistic claim that: “Memory safe programming languages (MSLs) can eliminate memory safety vulnerabilities.”

This concept of a ‘memory safe programming language’ leads the authors to fall into the trap of believing that tools are the problem, rather than how the tools are used.

C and C++ are memory safe programming languages when the appropriate compiler options are switched on, e.g., gcc’s sanitize flags. Rust and Ada are not memory safe programming languages when the appropriate compiler options are switched on/off, or object/function definitions include the unsafe keyword.

People argue over the definition of memory safety. At the implementation level, it includes checks that storage is not accessed outside of its defined bounds, e.g., arrays are not indexed outside the specified lower/upper bound.

I’m a great fan of array/pointer bounds checking and since the 1980s have been using bounds checking tools to check my C programs. I found bounds checking is a very cost-effective way of detecting coding mistakes.

Culture drives the (non)use of bounds checking. Pascal, Ada and now Rust have a culture of bounds checking during development, amongst other checks. C, C++, and other languages have a culture of not having switching on bounds checking.

Shipping programs with/without bounds checking enabled is a contentious issue. The three main factors are:

- Runtime performance overhead of doing the checks (which can vary from almost nothing to a factor of 5+, depending on the frequency of bounds checked accesses {checks don’t need to be made when the compiler can figure out that a particular access is always within bounds}). I would expect the performance overhead to be about the same for C/Rust compilers using the same compiler technology (as the Open source compilers do). A recent study found C (no checking) to be 1.77 times faster, on average, than Rust (with checking),

- Runtime memory overhead. Adding code to check memory accesses increases the size of programs. This can be an issue for embedded systems, where memory is not as plentiful as desktop systems (recent survey of Rust on embedded systems),

- Studies (here and here) have found that programs can be remarkably robust in the presence of errors. Developers’ everyday experience is that programs containing many coding mistakes regularly behave as expected most of the time.

If bounds checking is enabled on shipped applications, what should happen when a bounds violation is detected?

Many bounds violations are likely to be benign, and a few not so. Should users have the option of continuing program execution after a violation is flagged (assuming they have been trained to understand the program message they are seeing and are aware of the response options)?

Java programs ship with bounds checking enabled, but I have not seen any studies of user response to runtime errors.

The reason that C/C++ is the language used to write so many of the programs listed in vulnerability databases is that these languages are popular and widely used. The Rust security advisory database contains few entries because few widely used programs are written in Rust. It’s possible to write unsafe code in Rust, just like C/C++, and studies find that developers regularly write such code and security risks exist within the Rust ecosystem, just like C/C++.

There have been various attempts to implement bounds checking in x86

processors. Intel added the MPX instruction, but there were problems with the specification, and support was discontinued in 2019.

The CHERI hardware discussed in the Whitehouse report is not yet commercially available, but organizations are working towards commercial products.

Sample size needed to compare performance of two languages

A humungous organization wants to minimise one or more of: program development time/cost, coding mistakes made, maintenance time/cost, and have decided to use either of the existing languages X or Y.

To make an informed decision, it is necessary to collect the required data on time/cost/mistakes by monitoring the development process, and recording the appropriate information.

The variability of developer performance, and language/problem interaction means that it is necessary to monitor multiple development teams and multiple language/problem pairs, using statistical techniques to detect any language driven implementation performance differences.

How many development teams need to be monitored to reliably detect a performance difference driven by language used, given the variability of the major factors involved in the process?

If we assume that implementation times, for the same program, have a normal distribution (it might lean towards lognormal, but the maths is horrible), then there is a known formula. Three values need to be specified, and plug into this formula: the statistical significance (i.e., the probability of detecting an effect when none is present, say 5%), the statistical power (i.e., the probability of detecting that an effect is present, say 80%), and Cohen’s d; for an overview see section 10.2.

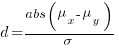

Cohen’s d is the ratio  , where

, where  and

and  is the mean value of the quantity being measured for the programs written in the respective languages, and

is the mean value of the quantity being measured for the programs written in the respective languages, and  is the pooled standard deviation.

is the pooled standard deviation.

Say the mean time to implement a program is  , what is a good estimate for the pooled standard deviation,

, what is a good estimate for the pooled standard deviation,  , of the implementation times?

, of the implementation times?

Having 66% of teams delivering within a factor of two of the mean delivery time is consistent with variation in LOC for the same program and estimation accuracy, and if anything sound slow (to me).

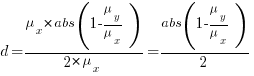

Rewriting the Cohen’s d ratio:

If the implementation time when using language X is half that of using Y, we get  . Plugging the three values into the

. Plugging the three values into the pwr.t.test function, in R’s pwr package, we get:

> library("pwr")

> pwr.t.test(d=0.5, sig.level=0.05, power=0.8)

Two-sample t test power calculation

n = 63.76561

d = 0.5

sig.level = 0.05

power = 0.8

alternative = two.sided

NOTE: n is number in *each* group |

In other words, data from 64 teams using language X and 64 teams using language Y is needed to reliably detect (at the chosen level of significance and power) whether there is a difference in the mean performance (of whatever was measured) when implementing the same project.

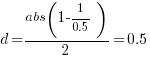

The plot below shows sample size required for a t-test testing for a difference between two means, for a range of X/Y mean performance ratios, with red line showing the commonly used values (listed above) and other colors showing sample sizes for more relaxed acceptance bounds (code):

Unless the performance difference between languages is very large (e.g., a factor of three) the required sample size is going to push measurement costs into many tens of millions (£1 million per team, to develop a realistic application, multiplied by two and then multiplied by sample size).

For small programs solving certain kinds of problems, a factor of three, or more, performance difference between languages is not unusual (e.g., me using R for this post, versus using Python). As programs grow, the mundane code becomes more and more dominant, with the special case language performance gains playing an outsized role in story telling.

There have been studies comparing pairs of languages. Unfortunately, most have involved students implementing short problems, one attempted to measure the impact of programming language on coding competition performance (and gets very confused), the largest study I know of compared Fortran and Ada implementations of a satellite ground station support system.

The performance difference detected may be due to the particular problem implemented. The language/problem performance correlation can be solved by implementing a wide range of problems (using 64 teams per language).

A statistically meaningful comparison of the implementation costs of language pairs will take many years and cost many millions. This question is unlikely to every be answered. Move on.

My view is that, at least for the widely used languages, the implementation/maintenance performance issues are primarily driven by the ecosystem, rather than the language.

2023 in the programming language standards’ world

Two weeks ago I was on a virtual meeting of IST/5, the committee responsible for programming language standards in the UK. IST/5 has a new chairman, Guy Davidson, whose efficiency is very unstandard’s like.

It’s been 18 months since I last reported on the programming language standards’ world, what has been going on?

2023 is going to be a bumper year for the publication of revised Standards of long-established programming language: COBOL, Fortran, C, and C++ (a revised Standard for Ada was published last year).

Yes, COBOL; a new COBOL Standard was published in January. Reports of its death were premature, e.g., my 2014 post suggesting that the latest version would be the last version of the Standard, and the closing of PL22.4, the US Cobol group, in 2017. There has even been progress on the COBOL front end for gcc, which now supports COBOL 85.

The size of the COBOL Standard has leapt from 955 to 1,229 pages (around new 200 pages in the normative text, 100 in the annexes). Comparing the 2014/2023 documents, I could not see any major additions, just lots of small changes spread throughout the document.

Every Standard has a project editor, the person tasked with creating a document that reflects the wishes/votes of its committee; the project editor sends the agreed upon document to ISO to be published as the official ISO Standard. The ISO editors would invariably request that the project editor make tiresome organizational changes to the document, and then add a front page and ISO copyright notice; from time to time an ISO editor took it upon themselves to reformat a document, sometimes completely mangling its contents. The latest diktat from ISO requires that submitted documents use the Cambria font. Why Cambria? What else other than it is the font used by the Microsoft Word template promoted by ISO as the standard format for Standard’s documents.

All project editors have stories to tell about shepherding their document through the ISO editing process. With three Standards (COBOL lives in a disjoint ecosystem) up for publication this year, ISO editorial issues have become a widespread topic of discussion in the bubble that is language standards.

Traditionally, anybody wanting to be actively involved with a language standard in the UK had to find the contact details of the convenor of the corresponding language panel, and then ask to be added to the panel mailing list. My, and others, understanding was that provided the person was a UK citizen or worked for a UK domiciled company, their application could not be turned down (not that people were/are banging on the door to join). BSI have slowly been computerizing everything, and, as of a few years ago, people can apply to join a panel via a web page; panel members are emailed the CV of applicants and asked if “… applicant’s knowledge would be beneficial to the work programme and panel…”. In the US, people pay an annual fee for membership of a language committee ($1,340/$2,275). Nobody seems to have asked whether the criteria for being accepted as a panel member has changed. Given that BSI had recently rejected somebodies application to join the C++ panel, the C++ panel convenor accepted the action to find out if the rules have changed.

In December, BSI emailed language panel members asking them to confirm that they were actively participating. One outcome of this review of active panel membership was the disbanding of panels with ‘few’ active members (‘few’ might be one or two, IST/5 members were not sure). The panels that I know to have survived this cull are: Fortran, C, Ada, and C++. I did not receive any email relating to two panels that I thought I was a member of; one or more panel convenors may be appealing their panel being culled.

Some language panels have been moribund for years, being little more than bullet points on the IST/5 agenda (those involved having retired or otherwise moved on).

Programming Languages: History and Fundamentals

Programming Languages: History and Fundamentals by Jean E. Sammet is often cited in discussions of language history, but very rarely read (I appreciate that many oft cited books have not been read by those citing them, but age further reduces the likelihood that anybody has read this book; it was published in 1969). I read this book as an undergraduate, but did not think much of it. For around five years it has been on my list of books to buy, should a second-hand copy become available below £10 (I buy anything vaguely interesting below this price, with most ending up left on trains or the book table of coffee shops).

Thanks to Adam Gashlin the Internet Archive now contains a downloadable copy.

The list of 120 languages covered contains a handful of the 28 languages covered in an article from 1957. Sammet says that of the 120, 20 are already dead or on obsolete computers (i.e., it is unlikely that another compiler will be written), and that about 15 are widely used/implemented).

Today, the book is no longer a discussion of the recent past, but a window in to the Cambrian explosion of programming languages that happened in the 1960s (almost everything since then has been a variation on a theme); languages from the 1950s are also included.

How does the material appear to me from a 2022 vantage-point?

The organization of the book reminded me that programming languages were once categorized by application domain, i.e., scientific/engineering users, business users, and string & list processing (i.e., academic users). This division reflected the market segmentation for computer hardware (back then, personal computers were still in the realm of science fiction). Modern programming language books (e.g., Scott’s “Programming Language Pragmatics”) often organize material based on implementation details, e.g., lexical analysis, and scoping rules.

The overview of programming languages given in the first three chapters covers nearly all the basic issues that beginners are taught today, but the emphasis is different (plus typographical differences, such as keyword spelt ‘key word’).

Two major language constructs are missing: Dynamic storage allocation is not discussed: Wirth’s book Algorithms + Data Structures = Programs is seven years in the future, and Kernighan and Ritchie’s The C Programming Language nine years; Simula gets a paragraph, but no mention of the object-oriented concepts it introduced.

What is a programming language, and what are the distinguishing features that make some of them high-level programming languages?

These questions may sound pointless or obvious today, but people used to spend lots of time arguing over what was, or was not, a high-level language.

Sammet says: “… the first characteristic of a programming language is that the user can write a program without knowing much—if anything—about the physical characteristics of the machine on which the program is to be run.”, and goes on to infer: “… a major characteristic of a programming language is that there must be a reasonable potential of having a source program written in that language run on two computers with different machine codes without rewriting the source program. … In most programming languages, some—but often very little—rewriting of the source program is necessary.”

The reason that some rewriting of the source was likely to be needed is that there were often a lot of small variations between compilers for the same language. Compilers tended to be bespoke, i.e., the Fortran compiler for the X cpu running OS Y was written specifically for that combination. Retargetting an existing compiler to a new cpu or OS was much talked about, but it was more fun to write a new compiler (and anyway, support for new features was needed, and it was simpler to start from scratch; page 149 lists differences in Fortran compilers across IBM machines). It didn’t help that there was also a lot of variation in fundamental quantities such as word length, e.g., 16, 18, 20, 24, 32, 36, 40, 48, 60 bit words; see page 18 of Dictionary of Computer Languages.

Sammet makes the distinction: “One of the prime differences between assembly and higher level languages is that to date the latter do not have the capability of modifying themselves at execution time.”

Sammet then goes on to list the advantages and disadvantages of what she calls higher level languages. Most of the claimed advantages will be familiar to readers: “Ease of Learning”, “Ease of Coding and Understanding”, “Ease of Debugging”, and “Ease of Maintaining and Documenting”. The disadvantages included: “Time Required for Compiling” (the issue here is converting assembler source to object code is much faster than compiling a high-level language), “Inefficient Object Code” (the translation process was often a one-to-one mapping of what was written, e.g., little reuse of register contents), “Difficulties in Debugging Without Learning Machine Language” (symbolic debuggers are still in the future).

Sammet’s observation: “In spite of the fact that higher level languages have been with us for over 10 years, there has been relatively little quantitative or qualitative analysis of their advantages and disadvantages.” is still true 50 years later.

If you enjoy learning about lots of different languages, you will like this book. The discussion of specific languages contains copious examples, which for me brought things to life.

Sites such as the Internet Archive and Bitsavers make the book’s references accessible (there are a few I had not seen before), and offer readers a path to pre-Cambrian times.

Saul Rosen’s 1967 book “Programming Systems and Languages” is sometimes cited in discussions of programming language history. This book is a collection of papers that discuss a variety of languages and the operating systems that support them. Fewer languages are covered, but in more depth, along with lots of implementation details. Again, lots of interesting references.

Programming language similarity based on their traits

A programming language is sometimes described as being similar to another, more wide known, language.

How might language similarity be measured?

Biologists ask a very similar question, and research goes back several hundred years; phenetics (also known as taximetrics) attempts to classify organisms based on overall similarity of observable traits.

One answer to this question is based on distance matrices.

The process starts by flagging the presence/absence of each observed trait. Taking language keywords (or reserved words) as an example, we have (for a subset of C, Fortran, and OCaml):

if then function for do dimension object C 1 1 0 1 1 0 0 Fortran 1 0 1 0 1 1 0 OCaml 1 1 1 1 1 0 1 |

The distance between these languages is calculated by treating this keyword presence/absence information as an n-dimensional space, with each language occupying a point in this space. The following shows the Euclidean distance between pairs of languages (using the full dataset; code+data):

C Fortran OCaml C 0 7.615773 8.717798 Fortran 7.615773 0 8.831761 OCaml 8.717798 8.831761 0 |

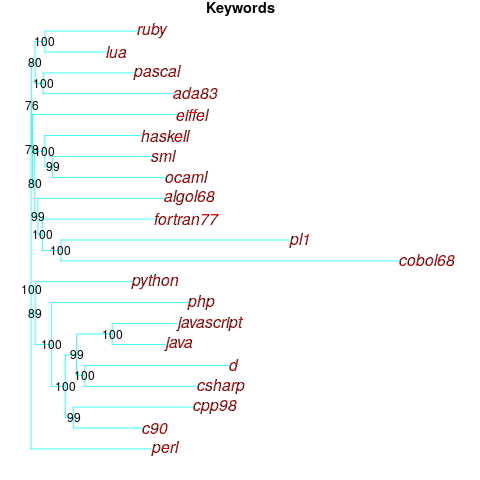

Algorithms are available to map these distance pairs into tree form; for biological organisms this is known as a phylogenetic tree. The plot below shows such a tree derived from the keywords supported by 21 languages (numbers explained below, code+data):

How confident should we be that this distance-based technique produced a robust result? For instance, would a small change to the set of keywords used by a particular language cause it to appear in a different branch of the tree?

The impact of small changes on the generated tree can be estimated using a bootstrap technique. The particular small-change algorithm used to estimate confidence levels for phylogenetic trees is not applicable for language keywords; genetic sequences contain multiple instances of four DNA bases, and can be sampled with replacement, while language keywords are a set of distinct items (i.e., cannot be sampled with replacement).

The bootstrap technique I used was: for each of the 21 languages in the data, was: add keywords to one language (the number added was 5% of the number of its existing keywords, randomly chosen from the set of all language keywords), calculate the distance matrix and build the corresponding tree, repeat 100 times. The 2,100 generated trees were then compared against the original tree, counting how many times each branch remained the same.

The numbers in the above plot show the percentage of generated trees where the same branching decision was made using the perturbed keyword data. The branching decisions all look very solid.

Can this keyword approach to language comparison be applied to all languages?

I think that most languages have some form of keywords. A few languages don’t use keywords (or reserved words), and there are some edge cases. Lisp doesn’t have any reserved words (they are functions), nor technically does Pl/1 in that the names of ‘word tokens’ can be defined as variables, and CHILL implementors have to choose between using Cobol or PL/1 syntax (giving CHILL two possible distinct sets of keywords).

To what extent are a language’s keywords representative of the language, compared to other languages?

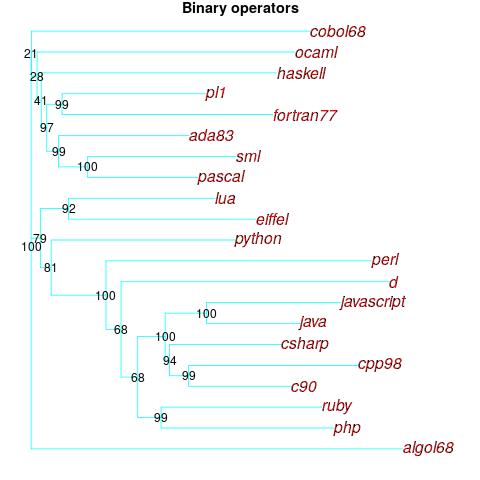

One way to try and answer this question is to apply the distance/tree approach using other language traits; do the resulting trees have the same form as the keyword tree? The plot below shows the tree derived from the characters used to represent binary operators (code+data):

A few of the branching decisions look as-if they are likely to change, if there are changes to the keywords used by some languages, e.g., OCaml and Haskell.

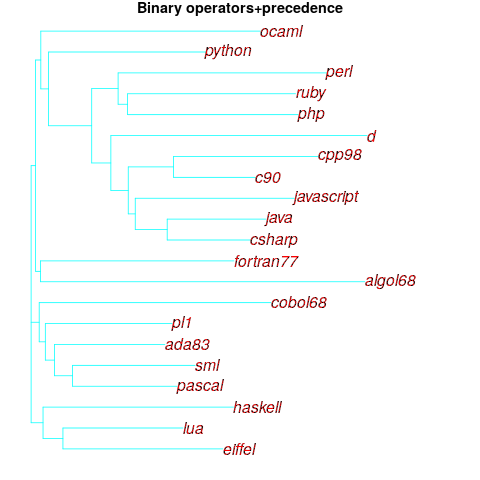

Binary operators don’t just have a character representation, they can also have a precedence and associativity (neither are needed in languages whose expressions are written using prefix or postfix notation).

The plot below shows the tree derived from combining binary operator and the corresponding precedence information (the distance pairs for the two characteristics, for each language, were added together, with precedence given a weight of 20%; see code for details).

No bootstrap percentages appear because I could not come up with a simple technique for handling a combination of traits.

Are binary operators more representative of a language than its keywords? Would a combined keyword/binary operator tree would be more representative, or would more traits need to be included?

Does reducing language comparison to a single number produce something useful?

Languages contain a complex collection of interrelated components, and it might be more useful to compare their similarity by discrete components, e.g., expressions, literals, types (and implicit conversions).

What is the purpose of comparing languages?

If it is for promotional purposes, then a measurement based approach is probably out of place.

If the comparison has a source code orientation, weighting items by source code occurrence might produce a more applicable tree.

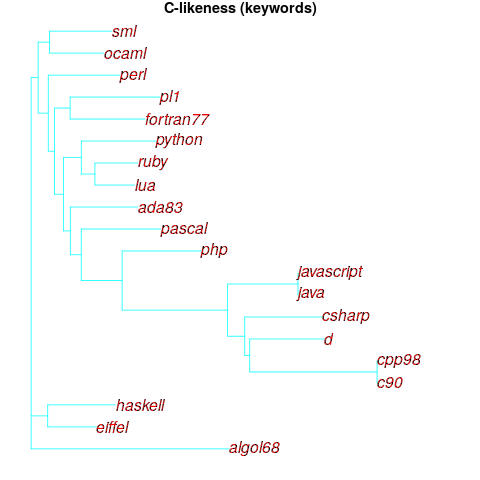

Sometimes one language is used as a reference, against which others are compared, e.g., C-like. How ‘C-like’ are other languages? Taking keywords as our reference-point, comparing languages based on just the keywords they have in common with C, the plot below is the resulting tree:

I had expected less branching, i.e., more languages having the same distance from C.

New languages can be supported by adding a language file containing the appropriate trait information. There is a Github repo, prog-lang-traits, send me a pull request to add your language file.

It’s also possible to add support for more language traits.

Creating and evolving a programming language: funding

The funding for artists and designers/implementors of programming languages shares some similarities.

Rich patrons used to sponsor a few talented painters/sculptors/etc, although many artists had no sponsors and worked for little or no money. Designers of programming languages sometimes have a rich patron, in the form of a company looking to gain some commercial advantage, with most language designers have a day job and work on their side project that might have a connection to their job (e.g., researchers).

Why would a rich patron sponsor the creation of an art work/language?

Possible reasons include: Enhancing the patron’s reputation within the culture in which they move (attracting followers, social or commercial), and influencing people’s thinking (to have views that are more in line with those of the patron).

The during 2009-2012 it suddenly became fashionable for major tech companies to have their own home-grown corporate language: Go, Rust, Dart and Typescript are some of the languages that achieved a notable level of brand recognition. Microsoft, with its long-standing focus on developers, was ahead of the game, with the introduction of F# in 2005 (and other languages in earlier and later years). The introduction of Swift and Hack in 2014 were driven by solid commercial motives (i.e., control of developers and reduced maintenance costs respectively); Google’s adoption of Kotlin, introduced by a minor patron in 2011, was driven by their losing of the Oracle Java lawsuit.

Less rich patrons also sponsor languages, with the idiosyncratic Ivor Tiefenbrun even sponsoring the creation of a bespoke cpu to speed up the execution of programs written in the company language.

The benefits of having a rich sponsor is the opportunity it provides to continue working on what has been created, evolving it into something new.

Self sponsored individuals and groups also create new languages, with recent more well known examples including Clojure and Julia.

What opportunities are available for initially self sponsored individuals to support themselves, while they continue to work on what has been created?

The growth of the middle class, and its interest in art, provided a means for artists to fund their work by attracting smaller sums from a wider audience.

In the last 10-15 years, some language creators have fostered a community driven approach to evolving and promoting their work. As well as being directly involved in working on the language and its infrastructure, members of a community may also contribute or help raise funds. There has been a tiny trickle of developers leaving their day job to work full time on ‘their’ language.

The term Hedonism driven development is a good description of this kind of community development.

People have been creating new languages since computers were invented, and I don’t expect this desire to create new languages to stop anytime soon. How long might a language community be expected to last?

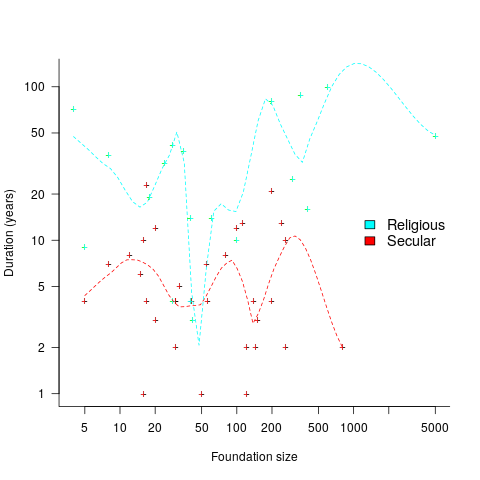

Having lots of commercially important code implemented in a language creates an incentive for that language’s continual existence, e.g., companies paying for support. When little or co commercial important code is available to create an external incentive, a language community will continue to be active for as long as its members invest in it. The plot below shows the lifetime of 32 secular and 19 religious 19th century American utopian communities, based on their size at foundation; lines are fitted loess regression (code+data):

How many self-sustaining language communities are there, and how many might the world’s population support?

My tracking of new language communities is a side effect of the blogs I follow and the few community sites a visit regularly; so a tiny subset of the possibilities. I know of a handful of ‘new’ language communities; with ‘new’ as in not having a Wikipedia page (yet).

One list contains, up until 2005, 7,446 languages. I would not be surprised if this was off by almost an order of magnitude. Wikipedia has a very idiosyncratic and brief timeline of programming languages, and a very incomplete list of programming languages.

I await a future social science PhD thesis for a more thorough analysis of current numbers.

Widely used programming languages: past, present, and future

Programming languages are like pop groups in that they have followers, fans and supporters; new ones are constantly being created and some eventually become widely popular, while those that were once popular slowly fade away or mutate into something else.

Creating a language is a relatively popular activity. Science fiction and fantasy authors have been doing it since before computers existed, e.g., the Elf language Quenya devised by Tolkien, and in the computer age Star Trek’s Klingon. Some very good how-to books have been written on the subject.

As soon as computers became available, people started inventing programming languages.

What have been the major factors influencing the growth to widespread use of a new programming languages (I’m ignoring languages that become widespread within application niches)?

Cobol and Fortran became widely used because there was widespread implementation support for them across computer manufacturers, and they did not have to compete with any existing widely used languages. Various niches had one or more languages that were widely used in that niche, e.g., Algol 60 in academia.

To become widely used during the mainframe/minicomputer age, a new language first had to be ported to the major computers of the day, whose products sometimes supported multiple, incompatible operating systems. No new languages became widely used, in the sense of across computer vendors. Some new languages were widely used by developers, because they were available on IBM computers; for several decades a large percentage of developers used IBM computers. Based on job adverts, RPG was widely used, but PL/1 not so. The use of RPG declined with the decline of IBM.

The introduction of microcomputers (originally 8-bit, then 16, then 32, and finally 64-bit) opened up an opportunity for new languages to become widely used in that niche (which would eventually grow to be the primary computing platform of its day). This opportunity occurred because compiler vendors for the major languages of the day did not want to cannibalize their existing market (i.e., selling compilers for a lot more than the price of a microcomputer) by selling a much lower priced product on microcomputers.

BASIC became available on practically all microcomputers, or rather some dialect of BASIC that was incompatible with all the other dialects. The availability of BASIC on a vendor’s computer promoted sales of the hardware, and it was not worthwhile for the major vendors to create a version of BASIC that reduced portability costs; the profit was in games.

The dominance of the Microsoft/Intel partnership removed the high cost of porting to lots of platforms (by driving them out of business), but created a major new obstacle to the wide adoption of new languages: Developer choice. There had always been lots of new languages floating around, but people only got to see the subset that were available on the particular hardware they targeted. Once the cpu/OS (essentially) became a monoculture most new languages had to compete for developer attention in one ecosystem.

Pascal was in widespread use for a few years on micros (in the form of Turbo Pascal) and university computers (the source of Wirth’s ETH compiler was freely available for porting), but eventually C won developer mindshare and became the most widely used language. In the early 1990s C++ compiler sales took off, but many developers were writing C with a few C++ constructs scattered about the code (e.g., use of new, rather than malloc/free).

Next, the Internet took off, and opened up an opportunity for new languages to become dominant. This opportunity occurred because Internet related software was being made freely available, and established compiler vendors were not interested in making their products freely available.

There were people willing to invest in creating a good-enough implementation of the language they had invented, and giving it away for free. Luck, plus being in the right place at the right time resulted in PHP and Javascript becoming widely used. Network effects prevent any other language becoming widely used. Compatible dialects of PHP and Javascript may migrate widespread usage to quite different languages over time, e.g., Facebook’s Hack.

Java rode to popularity on the coat-tails of the Internet, and when it looked like security issues would reduce it to niche status, it became the vendor supported language for one of the major smart-phone OSs.

Next, smart-phones took off, but the availability of Open Source compilers closed the opportunity window for new languages to become dominant through lack of interest from existing compiler vendors. Smart-phone vendors wanted to quickly attract developers, which meant throwing their weight behind a language that many developers were already familiar with; Apple went with Objective-C (which evolved to Swift), Google with Java (which evolved to Kotlin, because of the Oracle lawsuit).

Where does Python fit in this grand scheme? I don’t yet have an answer, or is my world-view wrong to treat Python usage as being as widespread as C/C++/Java?

New programming languages continue to be implemented; I don’t see this ever stopping. Most don’t attract more users than their implementer, but a few become fashionable amongst the young, who are always looking to attach themselves to something new and shiny.

Will a new programming language ever again become widely used?

Like human languages, programming languages experience strong networking effects. Widely used languages continue to be widely used because many companies depend on code written in it, and many developers who can use it can obtain jobs; what company wants to risk using a new language only to find they cannot hire staff who know it, and there are not many people willing to invest in becoming fluent in a language with no immediate job prospects.

Today’s widely used programmings languages succeeded in a niche that eventually grew larger than all the other computing ecosystems. The Internet and smart-phones are used by everybody on the planet, there are no bigger ecosystems to provide new languages with a possible route to widespread use. To be widely used a language first has to become fashionable, but from now on, new programming languages that don’t evolve from (i.e., be compatible with) current widely used languages are very unlikely to migrate from fashionable to widely used.

It has always been possible for a proficient developer to dedicate a year+ of effort to create a new language implementation. Adding the polish need to make it production ready used to take much longer, but these days tool chains such as LLVM supply a lot of the heavy lifting. The problem for almost all language creators/implementers is community building; they are terrible at dealing with other developers.

It’s no surprise that nearly all the new languages that become fashionable originate with language creators who work for a company that happens to feel a need for a new language. Examples include:

- Go created by Google for internal use, and attracted an outside fan base. Company languages are not new, with IBM’s PL/1 being the poster child (or is there a more modern poster child). At the moment Go is a trendy language, and this feeds a supply of young developers willing to invest in learning it. Once the trendiness wears off, Google will start to have problems recruiting developers, the reason: Being labelled as a Go developer limits job prospects when few other companies use the language. Talk to a manager who has tried to recruit developers to work on applications written in Fortran, Pascal and other once-widely used languages (and even wannabe widely used languages, such as Ada),

- Rust a vanity project from Mozilla, which they have now

abandonedcast adrift. Did Rust become fashionable because it arrived at the right time to become the not-Google language? I await a PhD thesis on the topic of the rise and fall of Rust, - Microsoft’s C# ceased being trendy some years ago. These days I don’t have much contact with developers working in the Microsoft ecosystem, so I don’t know anything about the state of the C# job market.

Every now and again a language creator has the social skills needed to start an active community. Zig caught my attention when I read that its creator, Andrew Kelley, had quit his job to work full-time on Zig. Two and a-half years later Zig has its own track at FOSEM’21.

Will Zig become the next fashionable language, as Rust/Go popularity fades? I’m rooting for Zig because of its name, there are relatively few languages whose name starts with Z; the start of the alphabet is over-represented with language names. It would be foolish to root for a language because of a belief that it has magical properties (e.g., powerful, readable, maintainable), but the young are foolish.

Changes in the shape of code during the twenties?

At the end of 2009 I made two predictions for the next decade; Chinese and Indian developers having a major impact on the shape of code (ok, still waiting for this to happen), and scripting languages playing a significant role (got that one right, but then they were already playing a large role).

Since this blog has just entered its second decade, I will bring the next decade’s predictions forward a year.

I don’t see any new major customer ecosystems appearing. Ecosystems are the drivers of software development, and no new ecosystems has several consequences, including:

- No major new languages: Creating a language is a vanity endeavor. Vanity project can take off if they are in the right place at the right time. New ecosystems provide opportunities for new languages to become widely used by being in at the start and growing with the ecosystem. There is another opportunity locus; it is fashionable for companies that see themselves as thought-leaders to have their own language, e.g., Google, Apple, and Mozilla. Invent your language at the right time, while working for a thought-leader company and your language could become well-known enough to take-off.

I don’t see any major new ecosystems appearing and all the likely companies already have their own language.

Any new language also faces the problem of not having a large collection packages.

- Software will be more thoroughly tested: When an ecosystem is new, the incentives drive early and frequent releases (to build a customer base); software just has to be good enough. Once a product is established, companies can invest in addressing issues that customers find annoying, like faulty behavior; the incentive change results in more testing.

There are other forces at work around testing. Companies are experiencing some very expensive faults (testing may be expensive, but not testing may be more expensive) and automatic test generation is becoming commercially usable (i.e., the cost of some kinds of testing is decreasing).

The evolution of widely used languages.

- I think Fortran and C will have new features added, with relatively little fuss, and will quietly continue to be widely used (to the dismay of the fashionista).

-

There is a strong expectation that C++ and Java should continue to evolve:

- I expect the ISO C++ work to implode, because there are too many people pulling in too many directions. It makes sense for the gcc and llvm teams to cooperate in taking C++ in a direction that satisfies developers’ needs, rather than the needs of bored consultants. What are Microsoft’s views? They only have their own compiler for strategic reasons (they make little if any profit selling compilers, compilers are an unnecessary drain on management time; who cares what happens to the language).

- It is going to be interesting watching the impact of Oracle’s move to charging for runtimes. I have no idea what might happen to Java.

In terms of code volume, the future surely has to be scripting languages, and in particular Python, Javascript and PHP. Ten years from now, will there be a widely used, single language? People have been predicting, for many years, that web languages will take over the world; perhaps there will be a sudden switch and I will see that the choice is obvious.

Moore’s law is now dead, which means researchers are going to have to look for completely new techniques for building logic gates. If photonic computers happen, then ternary notation may reappear again (it was used in at least one early Russian computer); I’m not holding my breath for this to occur.

Premium mediocrity is software engineering’s demographic

Software engineering is one of the skills needed to write software, but outside of student coursework is rarely an end in itself. Software is written to do something and the person writing the code needs to know about the something.

If enough people are involved in something, a job title gets created by inserting the appropriate application domain name before ‘software engineer’, e.g., the something software engineer; systems software engineering was one of the first recorded uses of ‘software engineering’, ’embedded software engineer’ is a common usage and more recently ‘research software engineer’ has been trending.

Customers want the software systems they use to fulfill their needs. Implementing a software system involves figuring out what the needs are, how best to implement them using the available resources and producing usable software; all within a given amount of time and money.

How much software engineering knowledge and skill does a something software engineer need? The obvious answer is: enough to get the something done. Ok, how much is needed to get the something done?

There are so many hours in a day: what percentage of available time is best spent learning about software engineering, what percentage leaning about the something and what percentage doing rather than learning?

The only data I have for answering this question is my own experience of talking to people, from a wide range of business and application areas, whose job includes writing software. My background is compilers (from C to Cobol) and static analysis, my knowledge of end-user application domains is derived from talking to the developers who were using the compilers or static analysis tools I was working on at the time.

I have always been struck by the minimalist knowledge of most developers, when it comes to the programming language they were using. It took a while, but eventually I accepted the obvious: most developers don’t need to know much about the language they are using to get their job done.

By a process that resembles incentivized trial and error, people learn how to write code that does what they want; the compiler does not complain and the output looks ok. For some languages, I used to be able to work out which books a relatively new developer had used to guide their learning, by matching a book’s example code snippets with the code they had written.

This minimalist knowledge approach to programming languages is cost effective because most code is simple and has a short lifetime; the cost of learning lots of language details does not provide enough benefit to be worthwhile.

I am a minimalist language Python developer. Why would I spend time learning more about the semantics of Python than I need to?

What are the benefits of being a language expert? Compiler writers get paid to learn the ins and outs of a language and I know a few people who became language experts without being compiler writers (they got hooked on knowing the language). I have found it useful for keeping my code simple (I am not tempted to write complicate code, or use obscure constructs, in the mistaken belief that they are better than the simple stuff), it is also useful for figuring out other people’s complicated or obscure usage (created intentionally or accidentally).

These benefits are not enough to convince me to learn more about Python, the language. I am content to wait until I need to learn more.

I have occasionally taught advanced programming courses, aimed at developers with a few years experience working in industry. These courses had to include the word ‘advanced’ in their title, otherwise developers with a few years experience would never have signed-up; ‘advanced’ is a necessary marketing signal (others who have run such courses report the same behavior). The course contents were essentially a review of basic material, with lots of examples; most of those attending did not know enough to follow real advanced material. The courses were really about uncovering and correcting bad habits that attendees had picked up over time (often, a technique was discovered to fix a problem and then subsequently adopted for more general use).

What about general software engineering skills? A minimalist knowledge approach to software engineering is cost effective because most code does not exist long enough to make it worthwhile investing in reducing future maintenance costs. Yes, it is more expensive for those that survive to become commonly used, but think of all the savings from not investing in those that did not survive. Software engineering decisions should not be driven by surviorship bias.

The first requirement of any commercial software system is to attract paying customers. In a rapidly changing market, being first with a saleable product can be the difference between life and death. Minimizing software engineering effort saves time and money (in the short term). If the product is a success, there will be money to pay for what needs to be done, if the product fails nobody cares. I have seen a lot of software systems that are a commercial success and a complete software engineering mess; successful, well engineered software is less common (or perhaps they just don’t need me to help them out).

Software engineering mediocrity is not only viable, for most people it’s the outcome of making a cost/benefit decision to invest their learning time in the application domain, not software engineering (or computer language).

Of course, nobody wants to be seen as being mediocre (for some people, mediocre overstates their skill level); their behavior is premium mediocre.

There are a few application areas where software engineering skills are needed, e.g., safety critical software and warehouse scale computing. A few high profile cases are hiding the reality that whatever works is cost effective for most software solutions.

Evidence for 28 possible compilers in 1957

In two earlier posts I discussed the early compilers for languages that are still widely used today and a report from 1963 showing how nothing has changed in programming languages

The Handbook of Automation Computation and Control Volume 2, published in 1959, contains some interesting information. In particular Table 23 (below) is a list of “Automatic Coding Systems” (containing over 110 systems from 1957, or which 54 have a cross in the compiler column):

Computer System Name or Developed by Code M.L. Assem Inter Comp Oper-Date Indexing Fl-Pt Symb. Algeb.

Acronym

IBM 704 AFAC Allison G.M. C X Sep 57 M2 M 2 X

CAGE General Electric X X Nov 55 M2 M 2

FORC Redstone Arsenal X Jun 57 M2 M 2 X

FORTRAN IBM R X Jan 57 M2 M 2 X

NYAP IBM X Jan 56 M2 M 2

PACT IA Pact Group X Jan 57 M2 M 1

REG-SYMBOLIC Los Alamos X Nov 55 M2 M 1

SAP United Aircraft R X Apr 56 M2 M 2

NYDPP Servo Bur. Corp. X Sep 57 M2 M 2

KOMPILER3 UCRL Livermore X Mar 58 M2 M 2 X

IBM 701 ACOM Allison G.M. C X Dec 54 S1 S 0

BACAIC Boeing Seattle A X X Jul 55 S 1 X

BAP UC Berkeley X X May 57 2

DOUGLAS Douglas SM X May 53 S 1

DUAL Los Alamos X X Mar 53 S 1

607 Los Alamos X Sep 53 1

FLOP Lockheed Calif. X X X Mar 53 S 1

JCS 13 Rand Corp. X Dec 53 1

KOMPILER 2 UCRL Livermore X Oct 55 S2 1 X

NAA ASSEMBLY N. Am. Aviation X

PACT I Pact Groupb R X Jun 55 S2 1

QUEASY NOTS Inyokern X Jan 55 S

QUICK Douglas ES X Jun 53 S 0

SHACO Los Alamos X Apr 53 S 1

SO 2 IBM X Apr 53 1

SPEEDCODING IBM R X X Apr 53 S1 S 1

IBM 705-1, 2 ACOM Allison G.M. C X Apr 57 S1 0

AUTOCODER IBM R X X X Dec 56 S 2

ELI Equitable Life C X May 57 S1 0

FAIR Eastman Kodak X Jan 57 S 0

PRINT I IBM R X X X Oct 56 82 S 2

SYMB. ASSEM. IBM X Jan 56 S 1

SOHIO Std. Oil of Ohio X X X May 56 S1 S 1

FORTRAN IBM-Guide A X Nov 58 S2 S 2 X

IT Std. Oil of Ohio C X S2 S 1 X

AFAC Allison G.M. C X S2 S 2 X

IBM 705-3 FORTRAN IBM-Guide A X Dec 58 M2 M 2 X

AUTOCODER IBM A X X Sep 58 S 2

IBM 702 AUTOCODER IBM X X X Apr 55 S 1

ASSEMBLY IBM X Jun 54 1

SCRIPT G. E. Hanford R X X X X Jul 55 Sl S 1

IBM 709 FORTRAN IBM A X Jan 59 M2 M 2 X

SCAT IBM-Share R X X Nov 58 M2 M 2

IBM 650 ADES II Naval Ord. Lab X Feb 56 S2 S 1 X

BACAIC Boeing Seattle C X X X Aug 56 S 1 X

BALITAC M.I.T. X X X Jan 56 Sl 2

BELL L1 Bell Tel. Labs X X Aug 55 Sl S 0

BELL L2,L3 Bell Tel. Labs X X Sep 55 Sl S 0

DRUCO I IBM X Sep 54 S 0

EASE II Allison G.M. X X Sep 56 S2 S 2

ELI Equitable Life C X May 57 Sl 0

ESCAPE Curtiss-Wright X X X Jan 57 Sl S 2

FLAIR Lockheed MSD, Ga. X X Feb 55 Sl S 0

FOR TRANSIT IBM-Carnegie Tech. A X Oct 57 S2 S 2 X

IT Carnegie Tech. C X Feb 57 S2 S 1 X

MITILAC M.I.T. X X Jul 55 Sl S 2

OMNICODE G. E. Hanford X X Dec 56 Sl S 2

RELATIVE Allison G.M. X Aug 55 Sl S 1

SIR IBM X May 56 S 2

SOAP I IBM X Nov 55 2

SOAP II IBM R X Nov 56 M M 2

SPEED CODING Redstone Arsenal X X Sep 55 Sl S 0

SPUR Boeing Wichita X X X Aug 56 M S 1

FORTRAN (650T) IBM A X Jan 59 M2 M 2

Sperry Rand 1103A COMPILER I Boeing Seattle X X May 57 S 1 X

FAP Lockheed MSD X X Oct 56 Sl S 0

MISHAP Lockheed MSD X Oct 56 M1 S 1

RAWOOP-SNAP Ramo-Wooldridge X X Jun 57 M1 M 1

TRANS-USE Holloman A.F.B. X Nov 56 M1 S 2

USE Ramo-Wooldridge R X X Feb 57 M1 M 2

IT Carn. Tech.-R-W C X Dec 57 S2 S 1 X

UNICODE R Rand St. Paul R X Jan 59 S2 M 2 X

Sperry Rand 1103 CHIP Wright A.D.C. X X Feb 56 S1 S 0

FLIP/SPUR Convair San Diego X X Jun 55 SI S 0

RAWOOP Ramo-Wooldridge R X Mar 55 S1 1

8NAP Ramo-Wooldridge R X X Aug 55 S1 S 1

Sperry Rand Univac I and II AO Remington Rand X X X May 52 S1 S 1

Al Remington Rand X X X Jan 53 S1 S 1

A2 Remington Rand X X X Aug 53 S1 S 1

A3,ARITHMATIC Remington Rand C X X X Apr 56 SI S 1

AT3,MATHMATIC Remington Rand C X X Jun 56 SI S 2 X

BO,FLOWMATIC Remington Rand A X X X Dec 56 S2 S 2

BIOR Remington Rand X X X Apr 55 1

GP Remington Rand R X X X Jan 57 S2 S 1

MJS (UNIVAC I) UCRL Livermore X X Jun 56 1

NYU,OMNIFAX New York Univ. X Feb 54 S 1

RELCODE Remington Rand X X Apr 56 1

SHORT CODE Remington Rand X X Feb 51 S 1

X-I Remington Rand C X X Jan 56 1

IT Case Institute C X S2 S 1 X

MATRIX MATH Franklin Inst. X Jan 58

Sperry Rand File Compo ABC R Rand St. Paul Jun 58

Sperry Rand Larc K5 UCRL Livermore X X M2 M 2 X

SAIL UCRL Livermore X M2 M 2

Burroughs Datatron 201, 205 DATACODEI Burroughs X Aug 57 MS1 S 1

DUMBO Babcock and Wilcox X X

IT Purdue Univ. A X Jul 57 S2 S 1 X

SAC Electrodata X X Aug 56 M 1

UGLIAC United Gas Corp. X Dec 56 S 0

Dow Chemical X

STAR Electrodata X

Burroughs UDEC III UDECIN-I Burroughs X 57 M/S S 1

UDECOM-3 Burroughs X 57 M S 1

M.I.T. Whirlwind ALGEBRAIC M.I.T. R X S2 S 1 X

COMPREHENSIVE M.I.T. X X X Nov 52 Sl S 1

SUMMER SESSION M.I.T. X Jun 53 Sl S 1

Midac EASIAC Univ. of Michigan X X Aug 54 SI S

MAGIC Univ. of Michigan X X X Jan 54 Sl S

Datamatic ABC I Datamatic Corp. X

Ferranti TRANSCODE Univ. of Toronto R X X X Aug 54 M1 S

Illiac DEC INPUT Univ. of Illinois R X Sep 52 SI S

Johnniac EASY FOX Rand Corp. R X Oct 55 S

Norc NORC COMPILER Naval Ord. Lab X X Aug 55 M2 M

Seac BASE 00 Natl. Bur. Stds. X X

UNIV. CODE Moore School X Apr 55 |

Chart Symbols used:

Code R = Recommended for this computer, sometimes only for heavy usage. C = Common language for more than one computer. A = System is both recommended and has common language. Indexing M = Actual Index registers or B boxes in machine hardware. S = Index registers simulated in synthetic language of system. 1 = Limited form of indexing, either stopped undirectionally or by one word only, or having certain registers applicable to only certain variables, or not compound (by combination of contents of registers). 2 = General form, any variable may be indexed by anyone or combination of registers which may be freely incremented or decremented by any amount. Floating point M = Inherent in machine hardware. S = Simulated in language. Symbolism 0 = None. 1 = Limited, either regional, relative or exactly computable. 2 = Fully descriptive English word or symbol combination which is descriptive of the variable or the assigned storage. Algebraic A single continuous algebraic formula statement may be made. Processor has mechanisms for applying associative and commutative laws to form operative program. M.L. = Machine language. Assem. = Assemblers. Inter. = Interpreters. Compl. = Compilers. |

Are the compilers really compilers as we know them today, or is this terminology that has not yet settled down? The computer terminology chapter refers readers interested in Assembler, Compiler and Interpreter to the entry for Routine:

“Routine. A set of instructions arranged in proper sequence to cause a computer to perform a desired operation or series of operations, such as the solution of a mathematical problem.

…

Compiler (compiling routine), an executive routine which, before the desired computation is started, translates a program expressed in pseudo-code into machine code (or into another pseudo-code for further translation by an interpreter).

…

Assemble, to integrate the subroutines (supplied, selected, or generated) into the main routine, i.e., to adapt, to specialize to the task at hand by means of preset parameters; to orient, to change relative and symbolic addresses to absolute form; to incorporate, to place in storage.

…

Interpreter (interpretive routine), an executive routine which, as the computation progresses, translates a stored program expressed in some machine-like pseudo-code into machine code and performs the indicated operations, by means of subroutines, as they are translated. …”

The definition of “Assemble” sounds more like a link-load than an assembler.

When the coding system has a cross in both the assembler and compiler column, I suspect we are dealing with what would be called an assembler today. There are 28 crosses in the Compiler column that do not have a corresponding entry in the assembler column; does this mean there were 28 compilers in existence in 1957? I can imagine many of the languages being very simple (the fashionability of creating programming languages was already being called out in 1963), so producing a compiler for them would be feasible.

The citation given for Table 23 contains a few typos. I think the correct reference is: Bemer, Robert W. “The Status of Automatic Programming for Scientific Problems.” Proceedings of the Fourth Annual Computer Applications Symposium, 107-117. Armour Research Foundation, Illinois Institute of Technology, Oct. 24-25, 1957.

Recent Comments