Archive

Analysis of Cost Performance Index for 338 projects

Project are estimated using a variety of resources. For those working at the sharp end, time is the pervasive resource. From the business perspective, the primary resource focus is on money; spending money to develop software that will make/save money.

Cost estimation data is much rarer than time estimation data (which itself is very thin on the ground).

The paper “An empirical study on a single company’s cost estimations of 338 software projects” (no public pdf currently available) by Christian Schürhoff, Stefan Hanenberg (who kindly sent me a copy of the data), and Volker Gruhn immediately caught my attention. What I am calling the Adesso dataset contains 4,713 rows relating to 338 fixed-price software projects implemented by Adesso SE (a German software and consulting company) between 2011 and the middle of 2016.

Cost estimation data is so very rare because of its commercial sensitivity. This paper deals with the commercial sensitivity issue by not releasing actual cost data, but by releasing data on a ratio of costs; the Cost Performance Index (CPI):

where:  are the actual costs (i.e., money spent) up to the current time, and

are the actual costs (i.e., money spent) up to the current time, and  is the earned value (a marketing term for the costs estimated for the planned work that has actually been completed up to the current time).

is the earned value (a marketing term for the costs estimated for the planned work that has actually been completed up to the current time).

if  , then more was spent than estimated (i.e., project is behind schedule or was underestimated), while if

, then more was spent than estimated (i.e., project is behind schedule or was underestimated), while if  , then less was spent than estimated (i.e., project is ahead of schedule or was overestimated).

, then less was spent than estimated (i.e., project is ahead of schedule or was overestimated).

The progress of a project’s implementation, in monetary terms, can be tracked by regularly measuring its CPI.

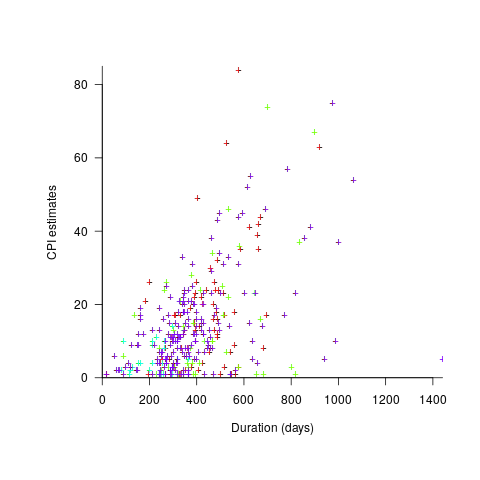

The Adesso dataset lists final values for each project (number of days being the most interesting), and each project’s CPI at various percent completed points. The plot below shows the number of CPI estimates for each project, against project duration; the assigned project numbers clustered into four bands and four colors are used to show projects in each band (code+data):

Presumably, projects that made only a handful of CPI estimates used other metrics to monitor project progress.

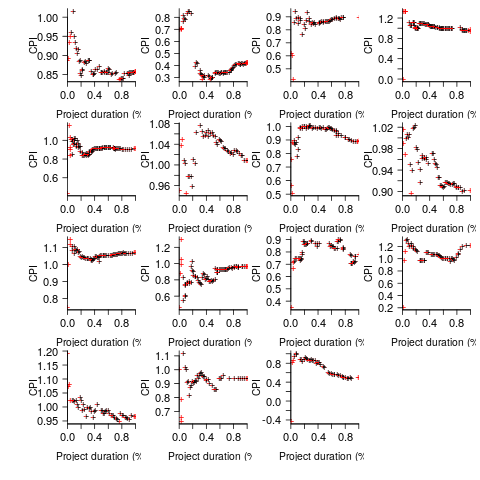

What are the patterns of change in a project’s CPI during its implementation? The plot below shows every CPI for each of 15 projects, with at least 44 CPI estimates, during implementation (code+data):

A commonly occurring theme, that will be familiar to those who have worked on projects, is that large changes usually occur at the start of the project, and then things settle down.

To continue as a going concern, a commercial company needs to make a profit. Underestimating a project may result in its implementation losing money. Losing money on some projects is not a problem, provided that the loses are cancelled out by overestimated projects making more money than planned.

While the mean CPI for the Adesso projects is 1.02 (standard deviation of 0.3), projects vary in size (and therefore costs). The data does not include project man-hours, but it does include project duration. The weighted mean, using duration as a proxy for man-hours, is 0.96 (standard deviation 0.3).

Companies cannot have long sequences of underestimated projects, creditors and shareholders will eventually call a halt. The Adesso dataset does not include any date information, so it is not possible to estimate the average CPI over shorter durations, e.g., one year.

I don’t have any practical experience of tracking project progress using earned value or CPI, and have only read theory papers on the subject (many essentially say that earned value is a great metric and everybody ought to be using it). Tips and suggestions welcome.

Pricing by quantity of source code

Software tool vendors have traditionally licensed their software on a per-seat basis, e.g., the cost increases with the number of concurrent users. Per-seat licensing works well when there is substantial user interaction, because the usage time is long enough for concurrent usage to build up. When a tool can be run non-interactively in the cloud, its use is effectively instantaneous. For instance, a tool that checks source code for suspicious constructs. Charging by lines of code processed is a pricing model used by some tool vendors.

Charging by lines of code processed creates an incentive to reduce the number of lines. This incentive was once very common, when screens supporting 24 lines of 80 characters were considered a luxury, or the BASIC interpreter limited programs to 1023 lines, or a hobby computer used a TV for its screen (a ‘tiny’ CRT screen, not a big flat one).

It’s easy enough to splice adjacent lines together, and halve the cost. Well, ease of splicing depends on programming language; various edge cases have to be handled (somebody is bound to write a tool that does a good job).

How does the tool vendor respond to a (potential) halving of their revenue?

Blindly splicing pairs of lines creates some easily detectable patterns in the generated source. In fact, some of these patterns are likely to be flagged as suspicious, e.g., if (x) a=1;b=2; (did the developer forget to bracket the two statements with { }).

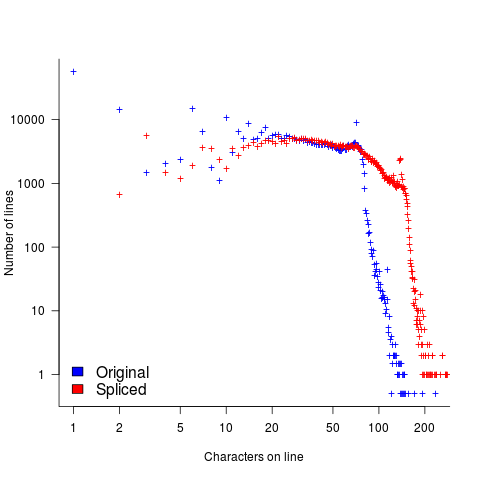

The plot below shows the number of lines in gcc 2.95 containing a given number of characters (left, including indentation), and the same count after even-numbered lines (with leading whitespace removed) have been appended to odd-numbered lines (code+data, this version of gcc was using in my C book):

The obvious change is the introduction of a third straight’ish line segment (the increase in the offset of the sharp decline might be explained away as a consequence of developers using wider windows). By only slicing the ‘right’ pairs of lines together, the obvious patterns won’t be present.

Using lines of codes for pricing has the advantage of being easy to explain to management, the people who sign off the expense, who might not know much about source code. There are other metrics that are much harder for developers to game. Counting tokens is the obvious one, but has developer perception issues: Brackets, both round and curly. In the grand scheme of things, the use/non-use of brackets where they are optional has a minor impact on the token count, but brackets have an oversized presence in developer’s psyche.

Counting identifiers avoids the brackets issue, along with other developer perceptions associated with punctuation tokens, e.g., a null statement in an else arm.

If the amount charged is low enough, social pressure comes into play. Would you want to work for a company that penny pinches to save such a small amount of money?

As a former tool vendor, I’m strongly in favour of tool vendors making a healthy profit.

Creating an effective static analysis requires paying lots of attention to lots of details, which is very time-consuming. There are lots of not particularly good Open source tools out there; the implementers did all the interesting stuff, and then moved on. I know of several groups who got together to build tools for Java when it started to take-off in the mid-90s. When they went to market, they quickly found out that Java developers expected their tools to be free, and would not pay for claimed better versions. By making good enough Java tools freely available, Sun killed the commercial market for sales of Java tools (some companies used their own tools as a unique component of their consulting or service offerings).

Could vendors charge by the number of problems found in the code? This would create an incentive for them to report trivial issues, or be overly pessimistic about flagging issues that could occur (rather than will occur).

Why try selling a tool, why not offer a service selling issues found in code?

Back in the day a living could be made by offering a go-faster service, i.e., turn up at a company and reduce the usage cost of a company’s applications, or reducing the turn-around time (e.g., getting the daily management numbers to appear in less than 24-hours). This was back when mainframes ruled the computing world, and usage costs could be eye-watering.

Some companies offer bug-bounties to the first person reporting a serious vulnerability. These public offers are only viable when the source is publicly available.

There are companies who offer a code review service. Having people review code is very expensive; tools are good at finding certain kinds of problem, and investing in tools makes sense for companies looking to reduce review turn-around time, along with checking for more issues.

When will Microsoft stop bothering to sell compilers?

The amount of money Microsoft make from selling compilers is peanuts compared to the profits from their Office and OS products (they may even lose money), and this will never change.

In the 80s, when the IBM PC was first introduced and began to take over the computing world, the Microsoft C compiler was not the market leader and not the favorite among developers who had a choice (large companies preferred to buy from large companies and so Microsoft C did well in the corporate market).

The developer market back then was not large and companies selling compilers soon found themselves selling into a saturated market, and many disappeared.

C++ came along and compiler companies jumped on this bandwagon, giving them a new product to sell for a few years.

As an OS vendor, Microsoft needed its own compiler to appear credible to developers. The libraries (the one part of the compiler suite that everybody liked) came along for free, courtesy of being an OS vendor. The IDE, Visual Studio, has grown on developers over time and has gotten a lot better. Visual C++ became the dominant Windows compiler because it was the last man standing.

Like all compilers, Visual C++ supports its own way of doing things in various parts of the language. Use of compiler language foibles are the mechanism by which application developers build porting cost barriers, to other compilers, into their code. Any large application built using Visual C++ will need a lot of effort to port to another compiler, a benefit for Microsoft.

The porting cost generated by compiler differences is a two-way street. Porting applications from another compiler to Visual C++ could be just as expensive as porting from Visual C++.

Microsoft can remove the cost of porting to Windows by supporting llvm within Visual Studio (I don’t ever see them supporting gcc for licensing reasons), plus command line support so that existing make files work and at the end of last year they sort of did just that (they bolted the llvm C/C++ front end onto the code generators for Visual C++; they obviously did not want to cause embarrassment by making it easy for developers to compare the performance of generated code ;-).

There is a large potential downside for Microsoft supporting llvm, it provides an in-your-face opportunity for current Visual C++ users to try another compiler (I suspect a goodly number have never touched any other C+ compiler). The problem with existing Visual C++ user accessing other compilers is not about code quality (Microsoft compilers have never been known for generating the fastest code), it is about making it easy for developers to learn how to make their code portable to other compilers.

Why would a company want to support a version of its product that compiles with Visual C++ abd a version that compiles with llvm? In the short term expediency, but in the long term it makes sense to use a single compiler.

Microsoft has ported it’s Office products to non-Windows platforms. Was Visual C++ involved? Using Visual C++ would have meant extending it to support the language extensions that occur in the headers files present on other platforms. There are advantages to using the compiler used to build the OS and I suspect this is what the Office team did (I don’t have any inside knowledge).

Is the only long term customer of Visual C++ the Windows development group? I wonder how hard it would be to tweak Windows+llvm so that one could build the other?

The writing is on the wall for Visual C++; how long does it have? Five years, 10?

I would not be surprised if there are a few very large customers, with many millions of lines of code they do not want to touch, who would put up the money to keep the development group on life support. Visual studio could be around 50 years from now, kept alive by the need to compile huge dinosaurs that are not quite important enough or cause enough grief to warrant rewriting. Perhaps it will end up being the oldest commercial compiler still in production use.

Recent Comments