Archive

Predicting the future with data+logistic regression

Predicting the peak of data fitted by a logistic equation is attracting a lot of attention at the moment. Let’s see how well we can predict the final size of a software system, in lines of code, using logistic regression (code+data).

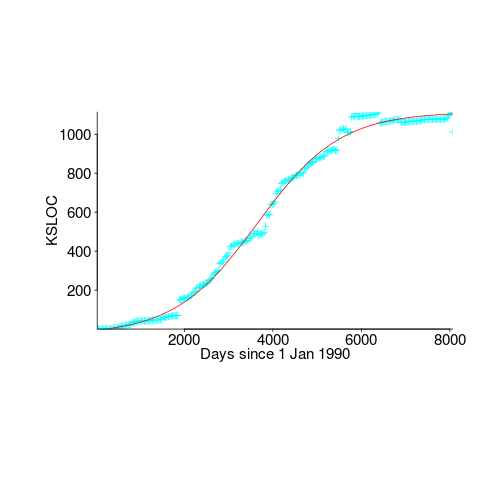

First up is the size of the GNU C library. This is not really a good test, since the peak (or rather a peak) has been reached.

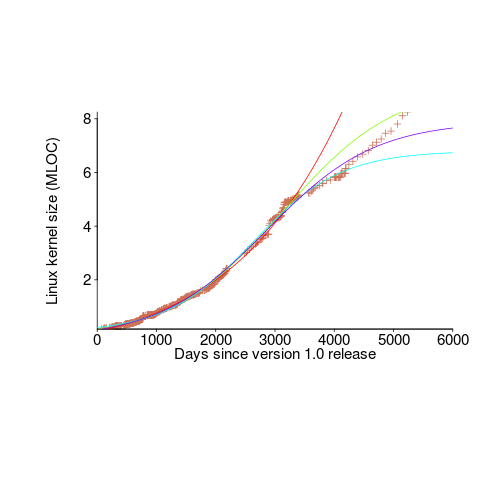

We need a system that has not yet reached an easily recognizable peak. The Linux kernel has been under development for many years, and lots of LOC counts are available. The plot below shows a logistic equation fitted to the kernel data, assuming that the only available data was up to day: 2,900, 3,650, 4,200, and 5,000+. Can you tell which fitted line corresponds to which number of days?

The underlying ‘problem’ is that we are telling the fitting software to fit a particular equation; the software does what it has been told to do, and fits a logistic equation (in this case).

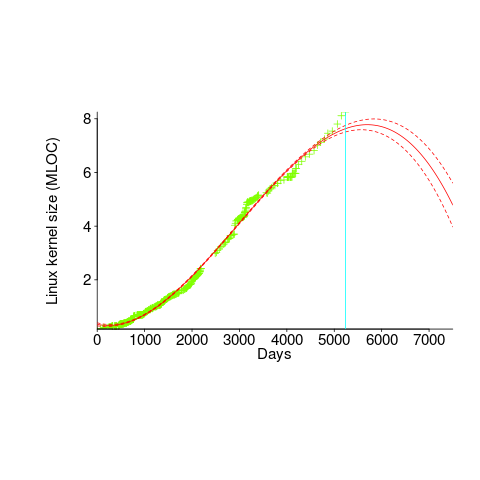

A cubic polynomial is also a great fit to the existing kernel data (red line to the left of the blue line), and this fitted equation can be extended into future (to the right of the blue line); dotted lines are 95% confidence bounds. Do any readers believe the future size of the Linux kernel predicted by this cubic model?

Predicting the future requires lots of data on the underlying processes that drive events. Modeling events is an iterative process. Build a model, check against reality, adjust model, rinse and repeat.

If the COVID-19 experience trains people to be suspicious of future predictions made by models, it will have done something positive.

Time taken to compile a source file

How long will it take to compile a source file?

When computers were a lot slower than they are today, this question was of general interest. Job scheduling is more effective when reliable runtime estimates are available, and developers want to know if there is enough time to get a coffee before the compile finishes.

An embarrassing fact about compile time performance, used to be that a large percentage of compile time was spent doing lexical analysis [“The cost of lexical analysis”, I cannot find an online copy]. Why was this embarrassing? Compiler writers like to boast about all the fancy optimizations their compiler does; but doing fancy stuff consumes lots of resources, so why were compilers spending so much of their time doing simple things like lexical analysis? The reality was that fancy compiler optimizations were not commercially viable until developer computers contained tens of megabytes of memory, i.e., very few pre-1990 compilers did any real optimization (people are still fussing over lexer performance).

An analysis of the data in Captain Dennis Miller’s Masters thesis (late Rome period), finds compile time is proportional to the square root of the number of tokens in the source (code+data); more complicated models are a slightly better fit. Where did square root come from? I expected a linear relationship, but would be willing to go with log. The measurements are from Ada compilers in the mid 1980s. I know several people who worked on Ada compilers during that time, and they were implementing the latest fancy optimizations (Ada was going to be the next big thing and the venture capital was flowing; big companies, with big computers were going to be paying lots of money to use Ada, but then microcomputers came along). I think that square root is driven by OS resource limitations, the compilers are using lots of memory and a noticeable amount of time is spent swapping.

So computers got a lot faster and people lost interest in estimates of how long it would take to compile individual files. I have not seen any interest in predicting how long it would take to compile whole projects (just complaints about how long it takes). There has been some work on progress indicators, updated as compilation progresses, which is a step in the right direction. Perhaps somebody has recorded compile time information and thrown machine learning at it; I usually ignore machine learning papers applied to software engineering and perhaps I have missed something. Pointers to project compile time prediction work welcome.

Then along came just-in-time compilation. Now people want to estimate how long it will take to generate machine code from some intermediate form, that is being interpreted.

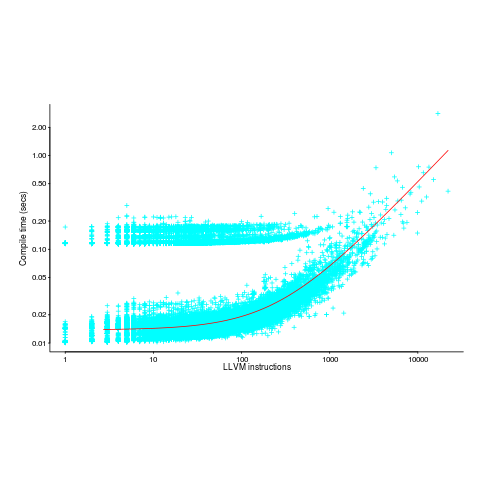

The plot below (thanks to Rafael Auler for kindly supplying the data from his paper) shows the time taken to generate code from functions containing a given number of LLVM instructions (an intermediate code), at optimization level O3. The red line is a regression fit to one of the ‘arms’ and shows constant time for less than 100’ish instructions and then a linear relationship. I have no idea why the time is roughly constant for a large number of functions.

There is a lot of variation for function containing the same number of instructions. This is to be expected when lots of different optimizations are being tried; sometimes a function will contain lots of the kind of code that a particular optimization spends lot of times process and sometimes the code will not contain anything interesting (i.e., no optimizations are found).

Predictive Modeling: 15th COW workshop

I was at a very interesting workshop on Predictive Modeling and Search Based Software Engineering on Monday/Tuesday this week and am going to say something about the talks that interested me. The talks were recorded and the videos will appear on the web site in a few weeks. The CREST Open Workshop (COW) runs roughly once a month and the group leader, Mark Harman, is always on the lookout for speakers, do let him know if you are in the area.

- Tim Menzies talked about how models built from one data set did well on that dataset but often not nearly as well on another (i.e., local vs global applicability of models). Academics papers usually fail to point out that that any results might not be applicable outside of the limited domain examined, in fact they often give the impression of being generally applicable.

Me: Industry likes global solutions because it makes life simpler and because local data is often not available. It is a serious problem if, for existing methods, data on one part of a companies software development activity is of limited use in predicting something about a different development activity in the same company and completely useless at predicting things at a different company.

- Yuriy Brun talked about something that is so obviously a good idea it is hard to believe that it had not been done years ago. The idea is to have your development environment be aware of what changes other software developers have made to their local copies of source files you also have checked out from version control. You are warned as soon your local copy conflicts with somebody else’s local copy, i.e., a conflict would occur if you both check in your local copy to the central repository. This warning has the potential to save lots of time by having developers talk to each about resolving the conflict before doing any more work that depends on the conflicting change.

Crystal is a plug-in for Eclipse that implements this functionality and Visual studio support is expected in a couple of releases time.

I have previously written about how multi-core processors will change software development tools and I think this idea falls into that category.

- Martin Shepperd presented a very worrying finding. An analysis of the results published in 18 papers dealing with fault prediction found that the best predictor (over 60%) of agreement between results in different papers was co-authorship. That is, when somebody co-authored a paper with another person any other papers they published were more likely to agree with other results published by that person than with results published by somebody they had not co-authored a paper with. This suggests that each separate group of authors is doing something different that significantly affects their results; this might be differences in software packages being used, differences in configuration options or tuning parameters, so something else.

It might be expected that agreement between results would depend on the techniques used, but Shepperd et als analysis found this kind of dependency to be very small.

An effect is occurring that is not documented in the published papers; this is not how things are supposed to be. There was lots of interest in obtaining the raw data to replicate the analysis.

- Camilo Fitzgerald talked about predicting various kinds of feature request ‘failures’ and presented initial results based on data mined from various open source projects; possible ‘failures’ included a new feature being added and later removed and significant delay (e.g., 1 year) in implementing a requested feature. I have previously written about empirical software engineering only being a few years old and this research is a great example of how whole new areas of research are being opened up by the availability of huge amounts of data on open source projects.

One hint for PhD students: It is no good doing very interesting work if you don’t keep your web page up to date so people can find out more about it

I talked to people who found other presentations very interesting. They might have failed to catch my eye because my interest or knowledge of the subject is low or I did not understand their presentation (a few gave no background or rationale and almost instantly lost me); sometimes the talks during coffee were much more informative.

Recent Comments