Archive

The first computer I owned

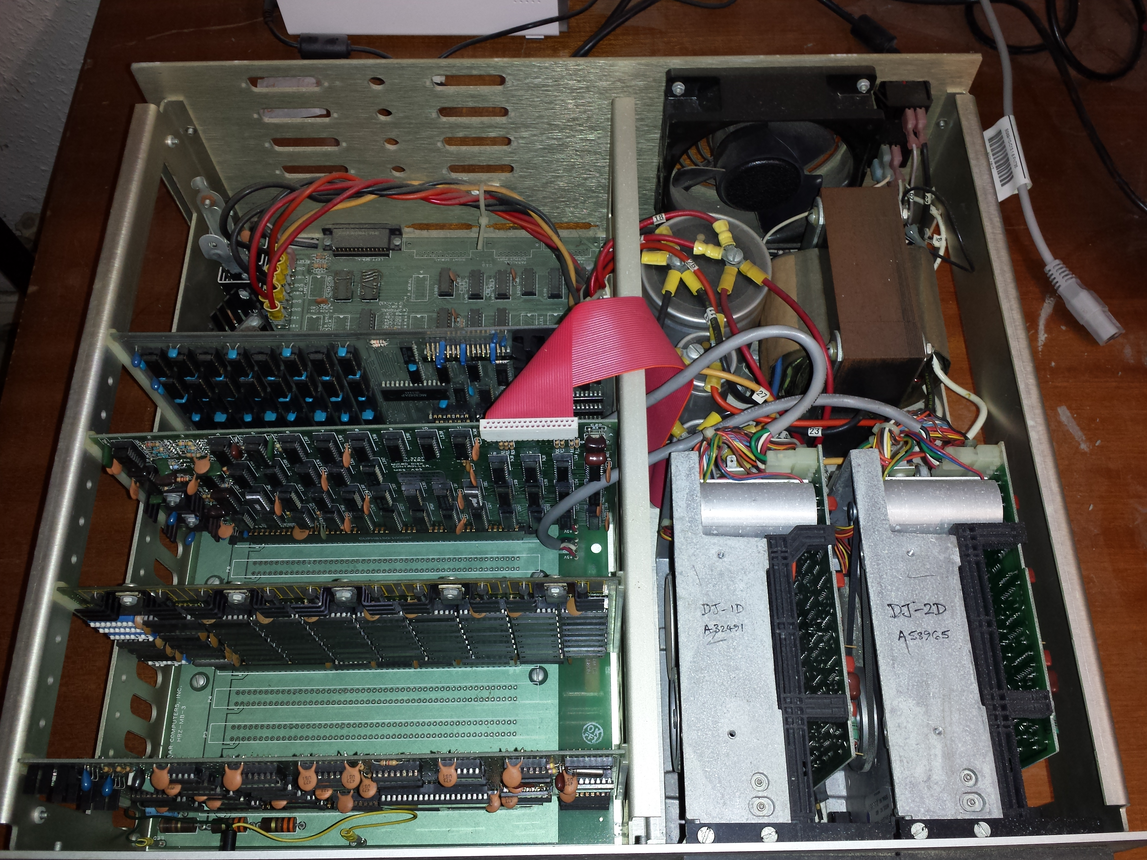

The first computer I owned was a North Star Horizon. I bought it in kit form, which meant bags of capacitors, resistors, transistors, chips, printed circuit boards, etc, along with the circuit diagrams for each board. These all had to be soldered in the right holes, the chips socketed (no surface mount soldering for such a low volume system), and wires connected. I was amazed when the system booted the first time I powered it up; debugging with the very basic equipment I had would have been a nightmare. The only missing component was the power supply transformer, and a trip to the London-based supplier sorted that out. I saved a months’ salary by building the kit (which cost me 4-months salary, and I was one of the highest paid people in my circle).

The few individuals who bought a computer in the late 1970s bought either a Horizon or a Commodore Pet (which was more expensive, but came with an integrated monitor and keyboard). Computer ownership really started to take off when the BBC micro came along at the end of 1981, and could be bought for less than a months’ salary (at least for a white-collar worker).

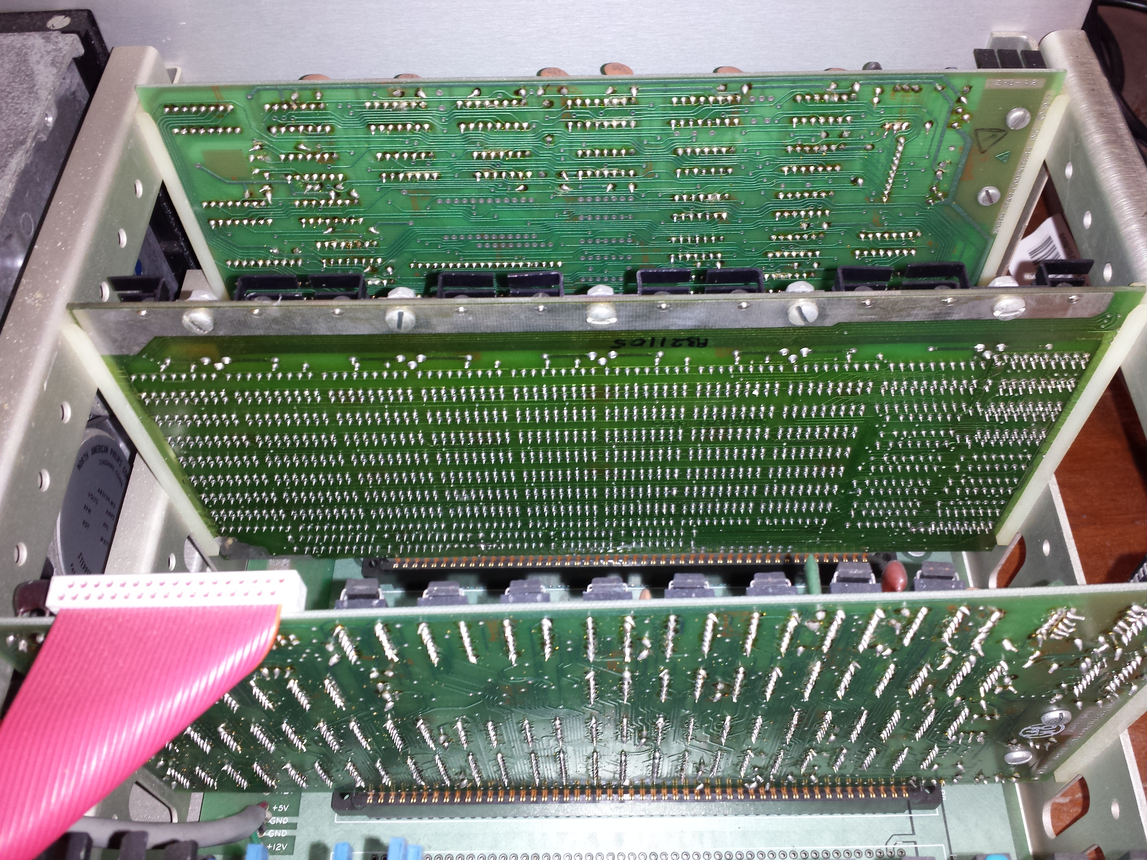

My Horizon contained a Z80A clocking at 4MHz, 32K of RAM, and two 5 1/4-inch floppy drives (each holding 360K; the Wikipedia article says the drives held 90K, mine {according to the labels on the floppies, MD 525-10} are 40-track, 10-sector, double density). I later bought another 32K of memory; the system ROM was at 56K, and contained 4K of code, various tricks allowed the 4K above 60K to be used (the consistent quality of the soldering on one of the boards below identifies the non-hand built board).

The OS that came with the system was CP/M, renamed to CP/M-80 when the Intel 8086 came along, and will be familiar to anybody used to working with early versions of MS-DOS.

As a fan of Pascal, my development environment of choice was UCSD Pascal. The C compiler of choice was BDS C.

Horizon owners are total computer people 🙂 An emulator, running under Linux and capable of running Horizon disk images, is available for those wanting a taste of being a Horizon owner. I didn’t see any mention of audio emulation in the documentation; clicks and whirls from the floppy drive were a good way of monitoring compile progress without needing to look at the screen (not content with using our Horizon’s at home, another Horizon owner and I implemented a Horizon emulator in Fortran, running on the University’s Prime computers). I wonder how many Nobel-prize winners did their calculations on a Horizon?

The Horizon spec needs to be appreciated in the context of its time. When I worked in application support at the University of Surrey, users had a default file allocation of around 100K’ish (memory is foggy). So being able to store stuff on a 360K floppy, which could be purchased in boxes of 10, was a big deal. The mainframe/minicomputers of the day were available with single-digit megabytes, but many previous generation systems had under 100K of RAM. There were lots of programs out there still running in 64K. In terms of cpu power, nearly all existing systems were multi-user, and a less powerful, single-user, cpu beats sharing a more powerful cpu with 10-100 people.

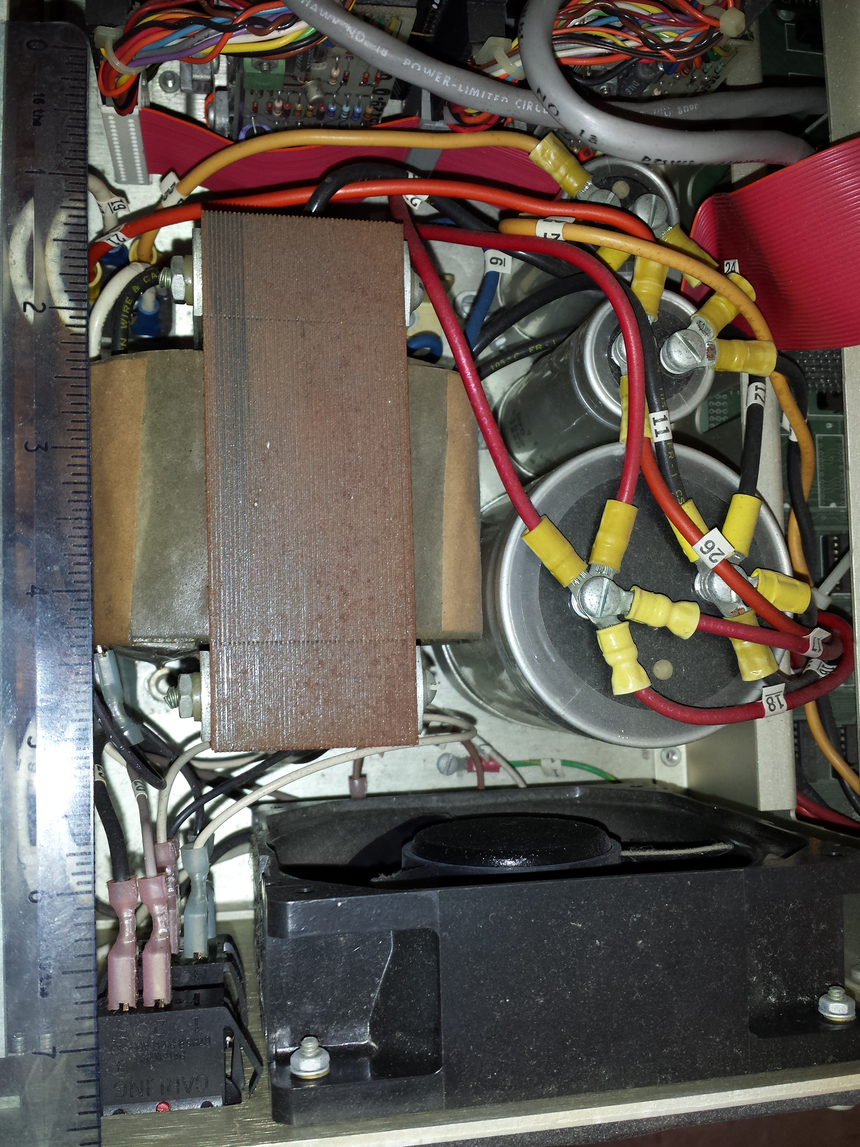

In terms of sheer weight, visual appearance and electrical clout, the Horizon power supply far exceeds those seen in today’s computers, which look tame by comparison (two of those capacitors are 4-inches tall):

My Horizon has been sitting in the garage for 32-years, and tucked away in unused rooms for years before that. The main problem with finding out whether it still works is finding a device to connect to the 25-pin serial port. I have an old PC with a 9-pin serial port, but I have spent enough of my life fiddling around with serial-port cables and Kermit to be content trying a simpler approach. I connect the power supply and switched it on. There was a loud crack and a flash on the disk-controller board; probably a tantalum capacitor giving up the ghost (easy enough to replace). The primary floppy drive did spin up and shutdown after some seconds (as expected), but the internal floppy engagement arm (probably not its real name) does not swing free when I open the bay door (so I cannot insert a floppy).

I am hoping to find a home for it in a computer museum, and have emailed the two closest museums. If these museums are not interested, the first person to knock on my door can take it away, along with manuals and floppies.

Update: This North Star can now be seen at the Retro Computer Museum.

First language taught to undergraduates in the 1990s

The average new graduate is likely to do more programming during the first month of a software engineering job, than they did during a year as an undergraduate. Programming courses for undergraduates is really about filtering out those who cannot code.

Long, long ago, when I had some connection to undergraduate hiring, around 70-80% of those interviewed for a programming job could not write a simple 10-20 line program; I’m told that this is still true today. Fluency in any language (computer or human) takes practice, and the typical undergraduate gets very little practice (there is no reason why they should, there are lots of activities on offer to students and programming fluency is not needed to get a degree).

There is lots of academic discussion around which language students should learn first, and what languages they should be exposed to. I have always been baffled by the idea that there was much to be gained by spending time teaching students multiple languages, when most of them barely grasp the primary course language. When I was at school the idea behind the trendy new maths curriculum was to teach concepts, rather than rote learning (such as algebra; yes, rote learning of the rules of algebra); the concept of number-base was considered to be a worthwhile concept and us kids were taught this concept by having the class convert values back and forth, such as base-10 numbers to base-5 (base-2 was rarely used in examples). Those of us who were good at maths instantly figured it out, while everybody else was completely confused (including some teachers).

My view is that there is no major teaching/learning impact on the choice of first language; it is all about academic fashion and marketing to students. Those who have the ability to program will just pick it up, and everybody else will flounder and do their best to stay away from it.

Richard Reid was interested in knowing which languages were being used to teach introductory programming to computer science and information systems majors. Starting in 1992, he contacted universities roughly twice a year, asking about the language(s) used to teach introductory programming. The Reid list (as it became known), was regularly updated until Reid retired in 1999 (the average number of universities included in the list was over 400); one of Reid’s ex-students, Frances VanScoy, took over until 2006.

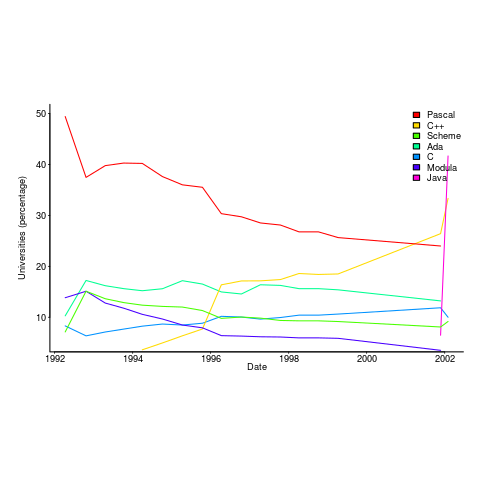

The plot below is from 1992 to 2002, and shows languages in the top with more than 3% usage in any year (code+data):

Looking at the list again reminded me how widespread Pascal was as a teaching language. Modula-2 was the language that Niklaus Wirth designed as the successor of Pascal, and Ada was intended to be the grown up Pascal.

While there is plenty of discussion about which language to teach first, doing this teaching is a low status activity (there is more fun to be had with the material taught to the final year students). One consequence is lack of any real incentive for spending time changing the course (e.g., using a new language). The Open University continued teaching Pascal for years, because material had been printed and had to be used up.

C++ took a while to take-off because of its association with C (which was very out of fashion in academia), and Java was still too new to risk exposing to impressionable first-years.

A count of the total number of languages listed, between 1992 and 2002, contains a few that might not be familiar to readers.

Ada Ada/Pascal Beta Blue C

1087 1 10 3 667

C/Java C/Scheme C++ C++/Pascal Eiffel

1 1 910 1 29

Fortran Haskell HyperTalk ISETL ISETL/C

133 12 2 30 1

Java Java/Haskell Miranda ML ML/Java

107 1 48 16 1

Modula-2 Modula-3 Oberon Oberon-2 ObjPascal

727 24 26 7 22

Orwell Pascal Pascal/C Prolog Scheme

12 2269 1 12 752

Scheme/ML Scheme/Turing Simula Smalltalk SML

1 1 14 33 88

Turing Visual-Basic

71 3 |

I had never heard of Orwell, a vanity language foisted on Oxford Mathematics and Computation students. It used to be common for someone in computing departments to foist their vanity language on students; it enabled them to claim the language was being used and stoked their ego. Is there some law that enables students to sue for damages?

The 1990s was still in the shadow of the 1980s fashion for functional programming (which came back into fashion a few years ago). Miranda was an attempt to commercialize a functional language compiler, with Haskell being an open source reaction.

I was surprised that Turing was so widely taught. More to do with the stature of where it came from (university of Toronto), than anything else.

Fortran was my first language, and is still widely used where high performance floating-point is required.

ISETL is a very interesting language from the 1960s that never really attracted much attention outside of New York. I suspect that Blue is BlueJ, a Java IDE targeting novices.

Writing language standards is a cottage industry

In the beginning programming language standards were written by one country’s National Standards body (e.g., ANSI did C/Cobol/Fortran for the USA and BSI did Pascal for the UK) and other countries were free to write their own version, adopt the existing work or do nothing (I don’t know of any country writing their own version, a few countries sometimes stuck their own front page on an existing document and the majority did nothing; update 4 Dec 2012, thanks to David Muxworthy for pointing out that around 1974 the UK, US, Japan and ECMA were all independently developing a standard for BASIC, by 1982 this had evolved to just ANSI and ECMA).

The UK people who created the Pascal Standard wanted the rest of the world (i.e., the US) to adopt it and the way to do this was to have it adopted as an ISO Standard. The experience of making this happen convinced the folk at BSI that in future language standards should be produced as an international effort within ISO (those pesky Americans wanted changes made to the document before they would vote for it).

During the creation of the first C Standard various people from Europe joined the ANSI committee, X3J11, so they could take part. Initially the US members were not receptive to the European request for a mechanism to handle keyboards that did not contain certain characters (e.g., left/right square brackets) but responded promptly on hearing that those (pesky) Europeans planned to publish an ISO C Standard that would contain those changes to the ANSI Standard needed to support trigraphs; the published ANSI Standard included support for trigraphs. The C ANSI committee were very receptive to the idea of future work being done at the ISO level; Bill Plauger/Tom Plum did a lot of good work to ensure it happened.

The C++ language came along and long story short an ISO committee was set up to create an ISO Standard for it, then Java came along and the Java Study Group failed to become an ISO committee and then various nonspecific language committees happened.

A look at the SC22 web site shows that ISO Standards exist for Forth and ECMAscript (it has not yet been updated to include Ruby) with no corresponding ISO committees. What is going on?

One could be cynical and say that special interests are getting a document of their choosing accepted by ECMA and then abusing the ISO fast track procedure to sidestep the need to setup an international committee that has the authority to create a document of its choosing. The reality is that unless a language is very widely used by lots of people (e.g., in the top five or so most commonly used languages) there are unlikely to be enough people (or employers) willing and able to commit the time and money needed to be actively involved in an ISO Standard committee.

Once a documented has been fast tracked to become ISO Standard any updates to it are supposed to be carried out under ISO rules (i.e., an ISO committee). In practice this is not happening with ECMAscript which continues to be very active (I don’t know what is happening with Forth or how the Ruby people plan to handle any updates), holding bi-monthly meetings; over the years they have fast tracked two revisions to the original fast tracked document (the UK did raise the issue during balloting but nothing came of it, I don’t think anybody really cares).

Would moving the ECMAscript development work from ECMA to ISO make a worthwhile difference? There might be a few people out there who would attend an ISO meeting who are not currently attending ECMA meetings (to join ECMA companies with five or less employees pay an annual fee of 3,500 Swiss francs {about the same number of US dollars} and larger companies pay a lot more) but I suspect the number would not be large enough to make up for the extra hassle of running an ISO committee (e.g., longer ISO balloting timescales).

Production of programming language standards is really a cottage industry that relies on friends in high places (e.g., companies with an existing membership of ECMA or connections into the local country standards’ body) for them to appear on the international stage.

What I know of Dennis Ritchie’s involvement with C

News that Dennis Ritchie died last weekend surfaced today. This private man was involved in many ground breaking developments; I know something about one of the languages he designed, C, so I will write about that. Ritchie has written about the development of C language.

Like many language designers the book he wrote “The C Programming Language” (coauthored with Brian Kernighan in 1978) was the definitive reference for users; universally known as K&R. The rapid growth in C’s popularity led to lots of compilers being written, exposing the multiple ways it was possible to interpret some of the wording in K&R.

In 1983 ANSI set up a committee, X3J11, to create a standard for C. With one exception Ritchie was happy to keep out of the fray of standardization; on only one occasion did he feel strongly enough to step in and express an opinion and the noalias keyword disappeared from the draft (the restrict keyword surfaced in C99 as a different kind of beast).

A major contribution to the success of the C Standard was the publication of an “ANSI Standard” version of K&R (there was a red “ANSI C” stamp on the front cover and the text made use of updated constructs like function prototypes and enumeration types), its second edition in 1988. Fans of the C Standard could, and did, claim that K&R C and ANSI C were the same language (anybody using the original K&R was clearly not keeping up with the times).

Ritchie publicly admitted to making one mistake in the design of C. He thinks that the precedence of the & and | binary operators should have been greater than the == operator. I can see his point, but an experiment I ran a few years ago suggested it is amount of experience using a set of operator precedence rules that is the primary contributor to developer knowledge of the subject.

Some language designers stick with their language, enhancing it over the years (e.g., Stroustrup with C++), while others move on to other languages (e.g., Wirth with Pascal, Modula-2 and Oberon). Ritchie had plenty of other interesting projects to spend his time on and took neither approach. As far as I can tell he made little or no direct contribution to C99. As head of the research department that created Plan 9 he must have had some input to the non-Standard features of their C compiler (e.g., no support for #if and support for unnamed structures).

While the modern C world may not be affected by his passing, his ability to find simple solutions to complicated problems will be a loss to the projects he was currently working on.

Memory capacity and commercial compiler development

When I started out in the compiler business in the 80s many commercial compilers were originally written by one person. A very good person who dedicated himself (I have never heard of a woman doing this) to the job (i.e., minimal social life) could produce a commercially viable product for a non-huge language (e.g., Fortran, Pascal, C, etc and not C++, Ada, etc) in 12-18 months. Companies who decide to develop a compiler in-house tend to use a team of people and take longer because that is how they work, and they don’t want to depend on one person and anyway such a person might not be available to them.

Commercially viable compiler development stayed within the realm of an individual effort until sometime in the early 90s. What put the single individual out of business was the growth in computer memory capacity into the hundreds of megabytes and beyond. Compilers have to be able to run within the limits of developer machines; you won’t sell many compilers that require 100M of memory if most of your customers don’t have machines with 100M+ of memory.

Code optimizers eat memory and this prevented many optimizations that had been known about for years appearing in commercial products. Once the machines used for software development commonly contained 100M+ of memory compiler vendors could start to incorporate these optimizations into their products.

Improvements in processor speed also helped. But developers are usually willing to let the compiler take a long time to optimize the code going into a final build, provided development compiles run at a reasonable speed.

The increase in memory capacity created the opportunity for compilers to improve and when some did improve they made it harder for others to compete. Suddenly an individual had to spend that 12-18 months, plus many more months implementing fancy optimizations; developing a commercially viable compiler outgrew the realm of individual effort.

Some people claim that the open source model was the primary driver in killing off commercial C compiler development. I disagree. My experience of licensing compiler source was that companies preferred a commercial model they were familiar with and reacted strongly against the idea of having to make available any changes they made to the code of an open source program. GCC (and recently llvm) succeeded because many talented people contributed fancy optimizations and these could be incorporated because developer machines contained lots of memory. If storage had not dramatically increased gcc would probably not be the market leader it is today.

Criteria for knowing a language

What does it mean for somebody to claim to know a computer language? In the commercial world it means the person is claiming to be capable of fluently (i.e., only using knowledge contained in their head and without having to unduly ponder) reading, and writing code in some generally accepted style applicable to that language. The academic world generally sets a much lower standard of competence (perhaps because most of its inhabitants leave before any significant expertise is acquired). If I had a penny for every recent graduate who claimed to know a language and was incapable of writing a program that read in a list of integers and printed their sum (I know companies that set tougher problems but they do not seem to have higher failure rates), I would be a rich man.

One experiment asked 21 postgraduate and academic staff which of the following individuals they would regard as knowing Java:

The results were:

_ NO YES

A 21 0

B 18 3

C 16 5

D 8 13

E 0 21

These answers reflect the environment from which the subjects were drawn. When I wrote compilers for a living I did not consider that anybody knew a language unless they had written a compiler for it, a point of view echoed by other compiler writers I knew.

I’m not sure that commercial developers would be happy with answer (E), in fact they could probably expand (E) into five separate questions that tested the degree to which a person was able to combine various elements of the language to create a meaningful whole. In the commercial world stage (E) is where people are expected to start.

The criteria used to decide whether somebody knows a language depends on which group of people you talk to; academics, professional developers and compiler writers each have their own in-group standards. In a sense the question is irrelevant, a small amount of language knowledge applied well can be used to do a reasonable job of creating a program for most applications.

Recent Comments