Archive

Compiler validation used to be a big thing

Compiler validation used to be a big thing; a NIST quarterly validated products list could run to nearly 150 pages, and approaching 1,000 products (not all were compilers).

Why did compiler validation stop being a thing?

Running a compiler validation service (NIST was also involved with POSIX, graphics, and computer security protocols validation) costs money. If there are enough people willing to pay (NIST charged for the validation service), the service pays for itself.

The 1990s was a period of consolidation, lots of computer manufacturers went out of business and Micro Focus grew to dominate the Cobol compiler business. The number of companies willing to pay for validation fell below the number needed to maintain the service; the service was terminated in 1998.

The source code of the Cobol, Fortran and SQL + others tests that vendors had to pass (to appear for 12 months in the validated products list) is still available; the C validation suite costs money. But passing these tests, then paying NIST’s fee for somebody to turn up and watch the compiler pass the tests, no longer gets your product’s name in lights (or on the validated products list).

At the time, those involved lamented the demise of compiler validation. However, compiler validation was only needed because many vendors failed to implement parts of the language standard, or implemented them differently. In many ways, reducing the number of vendors is a more effective means of ensuring consistent compiler behavior. Compiler monoculture may spell doom for those in the compiler business (and language standards), but is desirable from the developers’ perspective.

How do we know whether today’s compilers implement the requirements contained in the corresponding ISO language standard? You could argue that this is a pointless question, i.e., gcc and llvm are the language standard; but let’s pretend this is not the case.

Fuzzing is good for testing code generation. Checking language semantics still requires expert human effort, and lots of it. People have to extract the requirements contained in the language specification, and write code that checks whether the required behavior is implemented. As far as I know, there are only commercial groups doing this, i.e., nothing in the open source world; pointers welcome.

The Ockham Sound Analysis Criteria

Yesterday a headline on a tool vendor blog caught my eye “… meets NIST high assurance standards”. What is this high assurance standard that I had not heard about before?

It turns out to be the Ockham Sound Analysis Criteria (which is not a standard, but is sort of connected to assurance at some level). I have been following the NIST group (now known as SAMATE) behind this work since their first meeting (the only one I have attended); their Static Analysis Tool Exposition (SATE) work is a great idea, but I imagine it has been an uphill battle convincing tool vendors to publicly expose the strength and weaknesses of their tools.

Passing the Ockham Sound Analysis Criteria requires that a static analysis tool detect “…a minimum of 60% of buggy sites OR of non-buggy sites.”, with no false-positives (which I take to mean that no incorrect warnings generated, i.e., the tool cannot incorrectly say “there is a fault here” or “there are no faults here”).

The obvious low cost tool implementation is to pattern detect the known problems in the known test suite (called the Juliet test suite) and output warnings about them. The only way for SAMATE to stop companies doing this (or at least tuning their tools to pass the suite) is to regularly change the test cases used.

I think I understand the rationale being the no false-positive requirement (SAMATE are using the marketing term “sound”). NIST want static analysis tools to be usable by people who don’t know anything about software; a strange idea I know, but the Nuclear Regulatory Commission have wanted to do this in the past.

Not being willing to accept false-positives kills innovation. New analysis techniques invariably start out being unreliable and improve over time.

I suspect that few vendors will have any interest in claiming to meet the Ockham Sound Analysis Criteria (apart from the ones that pattern match on the tests to satisfy some contract requirement). There is too much downside (new tests could put a vendor in the position of having to make a big investment just to continue to meet the criteria) for almost no upside (does anybody make purchase decisions based on this criteria?)

I think that the tool vendor (TrustinSoft) found they could make the claim and being relatively new in the tools market thought it might mean something to customers (I doubt it will, as their sales people are probably finding out). Of course what customers really want tool vendors to tell them is that their code does not contain any problems.

Trying to sell analysis tools to the Nuclear Regulatory Commission

Over the last few days there has been an interesting, and in places somewhat worrying, discussion going on in the Safety Critical mailing list about the US Nuclear Regulatory Commission. I thought I would tell my somewhat worrying story about dealing with the NRC.

In 1996 the NRC posted a request for information for a tool that I thought my company stood a reasonable chance of being able to meet (“NRC examines source code in nuclear power plant safety systems during the licensing process. NRC is interested in finding commercially available tools that can locate and provide information about the following programming practices…”). I responded, answered the questions on the form I received and was short listed to make a presentation to the NRC.

The presentation took place at the offices of National Institute of Standards and Technology, the government agency helping out with the software expertise.

From our brief email exchanges I had guessed that nobody at the NRC/NIST end knew much about C or static analysis. A typical potential customer occurrence that I was familiar with handling.

Turned up, four or so people from NRC+one(?) from NIST, gave a brief overview and showed how the tool detected the constructs they were interested in, based on test cases I had written after reading their requirements (they had not written any but did give me some code that they happened to have, that was, well, code they happened to have; a typical potential customer occurrence that I was familiar with handling).

Why did the tool produce all those messages? Well, those are the constructs you want flagged. A typical potential customer occurrence that I was familiar with handling.

Does any information have to be given to the tool, such as where to find header files (I knew that they had already seen a presentation from another tool vendor, these managers who appeared to know nothing about software development had obviously picked up this question from that presentation)? Yes, but it is very easy to configure this information… A typical potential customer occurrence that I was familiar with handling.

I asked how they planned to use the tool and what I had to do to show them that this tool met their requirements.

We want one of our inspectors to be able turn up at a reactor site and check their source code. The inspector should not need to know anything about software development and so the tool must be able to run automatically without any options being given and the output must be understandable to the inspector. Not a typical potential customer occurrence and I had no idea about how to handle it (I did notice that my mouth was open and had to make a conscious effort to keep it closed).

No, I would not get to see their final report and in fact I never heard from them again (did they find any tool vendor who did not stare at them in disbelief?)

The trip was not a complete waste of time, a few months earlier I had been at a Java study group meeting (an ISO project that ultimately failed to convince Sun to standarize Java through the ISO process) with some NIST folk who worked in the same building and I got to chat with them again.

A few hours later I realised that perhaps the question I should have asked was “What kind of software are people writing at nuclear facilities that needs an inspector to turn up and check?”

Abramowitz and Stegun mark II

Like me I imagine many readers have owned a copy of Handbook of Mathematical Functions (or to use its more well known name “Abramowitz and Stegun”, after its two editors). Some time ago I heard that an updated handbook was being created, time passed and last year the “NIST Handbook of Mathematical Functions” was published, the companion web site has been slowing evolving over the years.

I did not hear anybody raving about the updated handbook and it was priced at more than twice that of the original (whose copyright was in the public domain and thus open to Dover to print a low cost edition {and others to make available online}, NIST are claiming copyright over the updated version which is published by Cambridge University press), so did not rush out to buy a copy.

I recently placed a large order with Amazon US and was tempted by a temporary price reduction to buy the NIST handbook (tip for Europeans: it is often possible to make big savings by ordering from amazon.com, which seems to ship from Germany and arrives a few days later than orders placed with amazon.co.uk),

Summary recommendation:

- Should somebody who has the original handbook buy the update? Probably not.

- If somebody had a choice of either, which should they pick? I would go for the original handbook.

The major difference between the handbooks are that the substantial number of precomputed tables of values of functions are not included in the update and there are 12 new chapters covering subjects not included (or not given much prominence) in the original. A not so important difference is the switch from black&white to color in the update, this works well in the online version (on the CD shipped with the book) but works poorly in print form; if a book is intended to be printed its color usage needs to be optimized for reflected light which has different characteristics than the transmitted light of a display..

The argument for removing the tables of values is that software packages can now be used to obtain these. In practice I rarely use the tables of values for this purpose; I use the tables to find the range of function input values that will generate a given rang of output values, or to see how output values change with changes in input values. For me omitting these tables in the update was a big mistake; ok the number of significant digits could have been reduced (to say five) to save some paper. The new chapters often contain various tables of numbers, but they are not extensive, but a conscious decisions seems to have been made to remove tables from existing chapters.

From a user interface point of view I don’t like the glossy paper used in the update, presumably caused by the switch to color which does not work well in the printed version; the angle of the page has to be constantly shifted to reduce glare from overhead lights and the handbook is noticeably heavier even though the page count is down by around 20% (886 vs 1030, excluding index which is substantially improved in the update).

The original has lots of tables, matte pages that don’t glare and is surprisingly light for such a big book. Time will tell whether I find the new chapters useful.

C compiler validation is 21 today!

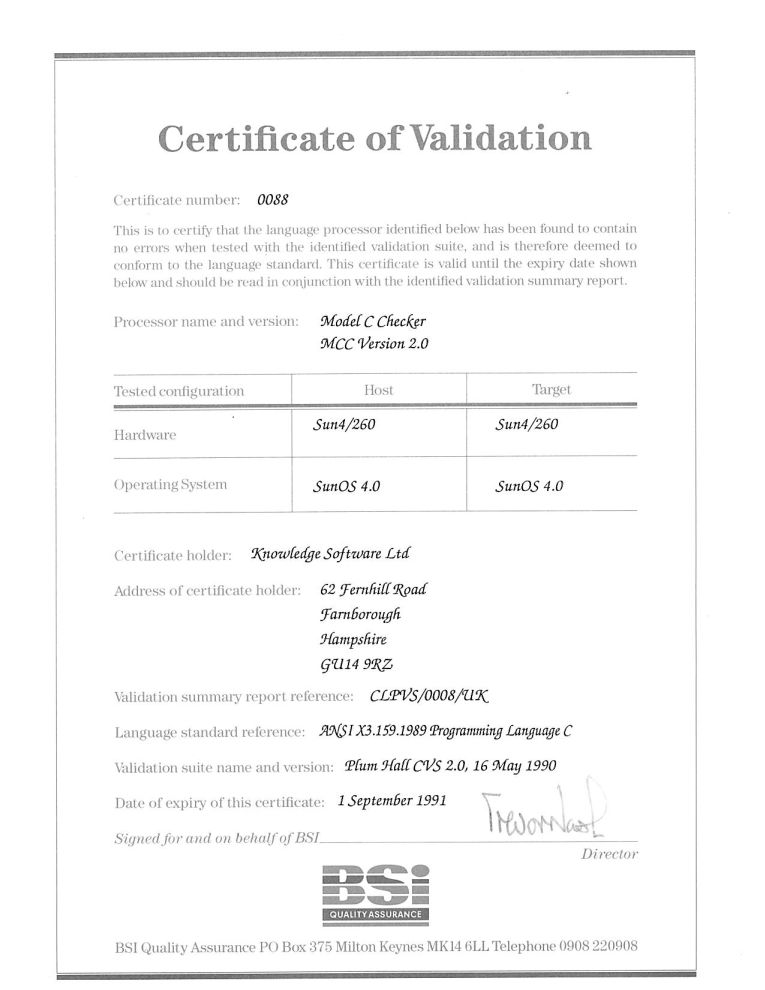

Today, 1 September 2011, is the 21th anniversary of the first formally validated C compilers. The three ‘equal first’ validated compilers were the Model Implementation C Checker from Knowledge Software, Topspeed C from JPI (run by the people who created Turbo Pascal) and the INMOS C compiler (derived from the Norcroft C compiler written by Alan Mycroft+others, the author of the longest response document seen during the review of the C89 draft standard).

Back in the day the British Standards Institution testing group run by John Souter were the world leaders in compiler validation and were very proactive in adding support for a new language. NIST, the equivalent US body, did not offer such a service until a few years later. Those companies in a position to have their compilers validated (i.e., the compiler passed the validation suite) were pressing BSI to be first; the ‘who is first’ issue was resolved by giving all certificates the same date (the actual validation process of a person from BSI, Neil Martin now Director of Test in the Winterop Team at Microsoft, turning up to ‘witness’ the compiler passing the tests happened several weeks earlier).

Testing C compilers was different from other language compilers in that sufficient demand existed to support commercial production and maintenance of test suites (the production of validation suites for previous language compilers had been government funded). After a review of the available test suites BSI chose to use the Plum Hall suite; after a similar review NIST chose to use the Perennial suite (I got involved in trying to figure out for NIST how well this suite covered the requirements contained in the C Standard).

For a while C compiler validation was big business (as in big fish, very small pond). But the compiler validation market is dependent on there being lots of compilers, which requires market fragmentation and to a lesser extent lots of different OSs and hardware platforms (each needing a separate validation). The 1990s saw market consolidation, gcc becoming good enough for commercial use and a shift of developer mind share to C++. Dwindling revenue resulted in BSI’s compiler validation group being shut down after a few years and NIST’s followed in 1998.

Is compiler validation relevant today? When the first C Standard was published a lot of compilers in common use had some significant behavioural differences compared to what the Standard specified. Over time these compilers have either disappeared or been upgraded (a potential customer once asked me the benefits I saw in them licensing the Knowledge Software front end and the reply to one of my responses, “you can tell your customers that the compiler is standard’s compliant”, was that this was not a benefit as they had been claiming this for years). Improvements in Intel’s x86 processor also had a hand in improving compiler Standard’s conformance; the various memory models used by the x86 processor was a huge headache for compiler writers whose products often behaved very differently under different memory models; the arrival of the Pentium with its flat 32-bit address space meant this issue disappeared over time.

These days I suspect that the major compilers targeting platforms where portability is expected (portability is often not a big expectation in the embedded world) are sufficiently compatible that developers are willing to overlook small differences with the Standard. Differences in third party libraries, GUIs and other frameworks have been the big headache for many years now.

Would the ‘platform portability’ compilers, that’s probably gcc, Microsoft, products using EDG’s front end, and perhaps llvm in the coming years, pass the latest version of the PlumHall and Perennial suites?

- The gcc team do not have access to either company’s suite. The gcc regression tests are a poor substitute for a proper compiler validation suite (even though they cost many thousands of dollars commercial compiler writers often buy both companies products because they are good value for money as a testing resource {the Fortran 78 validation suite source gives some idea of how much work is actually involved). I would expect gcc to fail some of the tests but have no idea how many or serious the failures would be.

- Microsoft have said they don’t have plans to support C99 (it took a lot of prodding to get them interested in formally validating against C90).

- I think the llvm team are in the same position as gcc, but perhaps somebody at Apple has access to one or more of the commercial suites (I don’t know).

- EDG are into standard’s conformance and I would expect them to pass both suites.

The certificate is printed on high quality, slightly yellow paper; the template wording is in a subdued gray ink while the customer information is in a very bold black ink. I don’t know whether this is to make life difficult for counterfeiters, but I could not get any half decent photographs and the color scanner had to be switched to black&white.

Validation was good for one year and I saw no worthwhile benefit in paying BSI £5,000 to renew for another year. Few people knew about the one year rule and I did not enlighten them. In the Ada compiler market the one year rule was a major problem, but lets leave that for another time.

Recent Comments