Archive

Time taken to compile a source file

How long will it take to compile a source file?

When computers were a lot slower than they are today, this question was of general interest. Job scheduling is more effective when reliable runtime estimates are available, and developers want to know if there is enough time to get a coffee before the compile finishes.

An embarrassing fact about compile time performance, used to be that a large percentage of compile time was spent doing lexical analysis [“The cost of lexical analysis”, I cannot find an online copy]. Why was this embarrassing? Compiler writers like to boast about all the fancy optimizations their compiler does; but doing fancy stuff consumes lots of resources, so why were compilers spending so much of their time doing simple things like lexical analysis? The reality was that fancy compiler optimizations were not commercially viable until developer computers contained tens of megabytes of memory, i.e., very few pre-1990 compilers did any real optimization (people are still fussing over lexer performance).

An analysis of the data in Captain Dennis Miller’s Masters thesis (late Rome period), finds compile time is proportional to the square root of the number of tokens in the source (code+data); more complicated models are a slightly better fit. Where did square root come from? I expected a linear relationship, but would be willing to go with log. The measurements are from Ada compilers in the mid 1980s. I know several people who worked on Ada compilers during that time, and they were implementing the latest fancy optimizations (Ada was going to be the next big thing and the venture capital was flowing; big companies, with big computers were going to be paying lots of money to use Ada, but then microcomputers came along). I think that square root is driven by OS resource limitations, the compilers are using lots of memory and a noticeable amount of time is spent swapping.

So computers got a lot faster and people lost interest in estimates of how long it would take to compile individual files. I have not seen any interest in predicting how long it would take to compile whole projects (just complaints about how long it takes). There has been some work on progress indicators, updated as compilation progresses, which is a step in the right direction. Perhaps somebody has recorded compile time information and thrown machine learning at it; I usually ignore machine learning papers applied to software engineering and perhaps I have missed something. Pointers to project compile time prediction work welcome.

Then along came just-in-time compilation. Now people want to estimate how long it will take to generate machine code from some intermediate form, that is being interpreted.

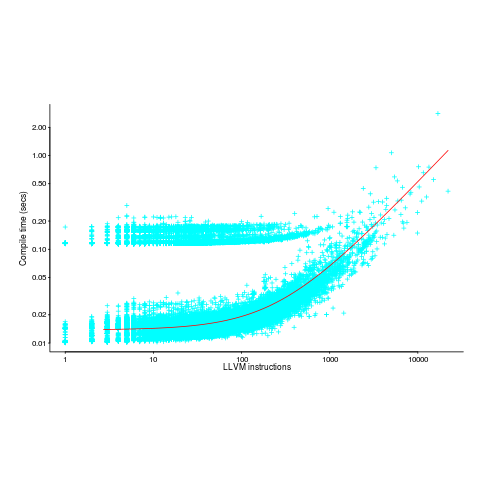

The plot below (thanks to Rafael Auler for kindly supplying the data from his paper) shows the time taken to generate code from functions containing a given number of LLVM instructions (an intermediate code), at optimization level O3. The red line is a regression fit to one of the ‘arms’ and shows constant time for less than 100’ish instructions and then a linear relationship. I have no idea why the time is roughly constant for a large number of functions.

There is a lot of variation for function containing the same number of instructions. This is to be expected when lots of different optimizations are being tried; sometimes a function will contain lots of the kind of code that a particular optimization spends lot of times process and sometimes the code will not contain anything interesting (i.e., no optimizations are found).

Fibonacci and JIT compilers

To maximize a compiler’s ability to generate high quality code many programming language standards do not specify the order in which the operands of a binary operator are evaluated. Most of the time the order of operand evaluation does not matter because all orders produce same final result. For instance in x + y the value of x may be obtained followed by obtaining the value of y and the two values added, alternatively the value of y may be obtained first followed by obtaining the value of x and the two values added; optimizers make use of local context information (e.g., holding one of the values in a register so it is immediately available for use in the evaluation of multiple instances of x) to work out which order produces the highest quality code.

If one of the operands is a function call that modifies the value of the other operand the result may depend on the order of evaluation. For instance, in:

int x; int set_x(void) { x=1; return 1; } int two_values(void) { x=0; return x + set_x(); } |

a left to right evaluation order of the return expression in two_values produces the value 1, while a right to left evaluation order produces the value 2. Every now and again developers accidentally write code that does something like this and are surprised to see program behavior change when they use different compiler options, a new version of the compiler or a different compiler (all things that can result in the order of evaluation changing for a given expression).

It is generally assumed that two calls to two_values within the same program will always produce the same answer, i.e., the decision on which order of evaluation to use is made at compile time and never changes while a program is running. Neither the C++ or C Standard requires that the evaluation order be fixed prior to program execution and use of a just-in-time compiler could result in the value returned by two_values alternating between 1 and 2. Some languages (e.g., Java) for which JIT compilers are expected to be used specify the exact order in which operands have to be evaluated.

One possible way of finding out whether a JIT compiler is being used for your C/C++ program is to test the values returned by two calls to two_values. A JIT compiler may, but is not required, to return different values on each call. Of course a decide-prior-to-execution C/C++ compiler does have the option of optimizing the following function to return true or false on the basis that the standard permits this behavior, but I would be very surprised to see this occur in practice:

bool Schrödinger(void) { return two_values() == two_values(); } |

The runtime variability offered by JIT compilers makes it possible to write a program whose output can be any value between  and

and  (the

(the  ‘th Fibonacci number, where

‘th Fibonacci number, where n is read from the input):

#include <stdio.h> int x; int set_x(int ret_val) { x=1; return ret_val; } int two_unspec(void) { x=0; return x + set_x(1); } int add_zero(val) { x=0; return val - x + set_x(0); } int fib(int fib_num) { if (fib_num > 3) return fib(fib_num-2) + add_zero(fib(fib_num-1)); else if (fib_num == 3) return two_unspec(); else return 1; } int main(void) { int n; scanf("%d", &n); printf("Fibonacci %d = %d\n", n, filb(n)); } |

The C-semantics tool will ‘execute’ this program and produce a list of the  possible outputs (a PhD project under active development; the upper limit is currently around the 6th Fibonacci number which requires 11 hours and produces a 50G log file).

possible outputs (a PhD project under active development; the upper limit is currently around the 6th Fibonacci number which requires 11 hours and produces a 50G log file).

A decide-prior-to-execution compiler has a maximum choice of four possible outputs for a given input value and for a given executable the output produced by different input values will be perfectly correlated.

Recent Comments