Archive

Coding mistakes made by ChatGTP

The programs generated by Openai‘s chat-bot ChatGPT, sometimes referred to as GPT-3, may have the requested behavior, but often contain mistakes that illustrate the fact that ChatGPT does not know anything about programming.

ChatGPT does not understand anything, it is a token prediction engine driven by the corpus used to train its language model. The likelihood of the output being correct is dependent on the quantity of text used during training (the more text used, the more likely that it will contain a relevant response). GTP-3, the language model behind ChatGPT, was trained on the deduplicated contents of the Internet (the language model has 175 billion parameters, the GTP-2 model contains 1.5 billion parameters). The practicality of its implementation is the result of various trade-offs, along with technical breakthroughs in language model building, e.g., transformers.

ChatGPT output appears to be that of a next generation search engine, one that returns answers rather than links to pages.

Readers who believe that any ‘understanding’ pixie dust is involved can disabuse themselves of this notion by reading an overview of GTP-3’s architecture, and how the transformer algorithm converts text to matrices of values.

If matrix algebra is not your thing, check out the 257 lines of python that nanoGTP uses for training, and the 75 lines used to generate responses from a trained model.

As a prediction engine, ChatGTP sometimes gets things wrong. What kind of mistakes appear in ChatGPT generated source code?

The exchanges below occurred with ChatGPT Jan 9 version, with the text typed by me appearing in bold, and the ChatGPT output appearing in the following boxed text.

Some of my questions were inspired by the list of undefined behaviors in the C Standard.

Write a program that adds apples to oranges.

I'm sorry, I don't understand what you mean by "adding apples to oranges." Can you please provide more information or context? |

A suitable response can only be provided when an appropriate match is found in the trained model.

Write a regular expression that matches all prime numbers.

It is not possible to write a regular expression that can match all prime numbers, as the set of prime numbers is infinite and a regular expression is a finite representation of a pattern. Additionally, regular expressions are used to match strings of characters, while prime numbers are numerical values. It is possible to use a regex to match a number in a string but it's not capable of determining if the number is prime or not. |

The training data may include the information that the request cannot be met.

Write a regular expression that matches all even numbers.

A regular expression that matches all even numbers is:

^(0|[2468]*[048]|[13579][26])$

Explanation:

^ matches the start of the string

(0|[2468]*[048]|[13579][26]) matches any of the following:

0

any number of even digits followed by a 0 or 8 (for example, 200, 2468000)

any odd digit followed by a 6 (for example, 1357926)

$ matches the end of the string

This regular expression will match strings that contain only even numbers, but not those that contain other characters. |

The generated regular expression does not do what I asked, or what the last sentence from ChatGTP claims.

What is going on?

The tokens “Write a regular expression that matches” were enough for ChatGTP to figure out the task.

The tokens “all even numbers” was not specific enough for ChatGTP to focus on the appropriate training data. There is a random component to the selection of output, which will play a larger role when there are many possibilities.

The method used to tokenized/encode character sequences during training (i.e., byte-pairs), along with the relatively limited number of distinct tokens (i.e., 50,257) means that only one-/two-digit numbers are likely to be represented as discrete entities; longer digit sequences are treated as co-occurrences of one-/two-digit tokens. So it is not a surprise to see ChatGPT applying co-occurrences characteristics of long digit sequences to its component subsequences.

Write a regular expression that matches all odd numbers.

A regular expression that matches all odd numbers is:

^(1|[13579]*[13579])$

Explanation:

^ matches the start of the string

(1|[13579]*[13579]) matches any of the following:

1

any number of odd digits followed by another odd digit (for example, 1357913579)

$ matches the end of the string

This regular expression will match strings that contain only odd numbers, but not those that contain other characters. |

The output for odd numbers is more consistent, in that the regular expression does what the last sentence of the output claims, but not what I asked.

During a chat session, it is possible to provide more (information in human terminology; character sequences in ChatGTP terminology). The following prompt text, from me, has nothing to do with teaching ChatGTP to do maths, but providing tokens that enable a more focused match against training data.

Even numbers are defined as divisible by two with no reminder. If a number is not even then it is odd.

You are correct, even numbers are defined as divisible by two with no remainder, and if a number is not even, it is considered odd. I apologize for any confusion caused by my previous statement. To match all even numbers, we can use the regular expression: ^([0-9]*[02468])$ To match all odd numbers, we can use the regular expression: ^([0-9]*[13579])$ Both of this regular expression will match numbers that are divisible by 2 and not divisible by 2 respectively. |

Both generated regular expressions are correct.

ChatGPT can learn to translate from an invented language to English (and presumably other languages).

Give an example of C source code assigning a character constant that contains an invalid multibyte character.

The following is an example of C source code assigning a character constant that contains an invalid multibyte character:

#include <stdio.h>

int main() {

char invalid_char = '\xF0\x90\x80\x80'; // This is an invalid multibyte character

printf("%c", invalid_char);

return 0;

}

In this example, the character constant '\xF0\x90\x80\x80' is assigned to the variable invalid_char. This particular sequence of bytes is not a valid Unicode character, and it will produce undefined behavior when attempting to print it. |

There was a time when '\xF0\x90\x80\x80' was an invalid multibyte character.

Things changed around 20 years ago, but the training for the model used by ChatGTP does not tag information with valid-between dates.

ChatGTP searches for, and finds, an invalid multibyte character, and uses it to provide an answer.

Give an example of C source code where an object is referred to outside of its lifetime.

The following is an example of C source code where an object is referred to outside of its lifetime:

#include <stdio.h>

int main() {

int x;

{

int x = 5;

}

printf("x: %d\n", x);

return 0;

}

In this example, the variable x is defined twice in the same function main. The first definition int x; is a global variable that has a lifetime throughout the entire program execution. The second definition int x = 5; is a local variable that has a lifetime that is limited to the inner block {...} it is defined in.

After the inner block is executed and the inner variable x goes out of scope, the program attempts to access the inner variable x by using the global variable x in the printf statement, which refers to an object (the inner variable x) that is no longer in its lifetime. This behavior is undefined according to the C standard and can lead to unexpected results. |

The generated code is incorrect. The human interpretation of this code is that ChatGTP has confused the name of an object (what the C Standard calls a variable) and the storage it represents. There are two different objects defined, both having the name x (beginners often make the same mistake).

A common example of the requested behavior is freeing the dynamic storage pointed to by some pointer, and then accessing that storage.

Write a C program containing a conversion of an integer type that produces a value outside the range that can be represented in the integer type.

Here is an example of a C program that demonstrates the conversion of an integer type that produces a value outside the range that can be represented in the integer type:

#include <stdio.h>

int main() {

int x = 2147483647; // largest value representable in a signed 32-bit integer

unsigned int y = (unsigned int) x + 1; // overflow occurs here

printf("%u\n", y);

return 0;

}

In this program, the value of x is initialized to the largest value that can be represented by a signed 32-bit integer (2147483647). Then, x is cast to an unsigned 32-bit integer and 1 is added to it. Since the result of this operation is larger than the largest representable value in an unsigned 32-bit integer, an overflow occurs and the value of y becomes 0.

This program will produce an output of 0.

It is worth mentioning that undefined behavior (UB) can occur when an integer overflow happens, so this should be avoided in production code. |

The detailed explanation makes the output look like its authoritative. However, it is wrong.

There is no overflow in this code.

The integer type ‘unsigned int’ supports a larger range of positive values, which means that after conversion, adding one will not produce a value that is outside the range that can be represented.

You are correct, I apologize for the confusion. Unsigned integers have a larger range of positive values and do not have negative values. When an operation like an addition exceeds the maximum value that can be represented by an unsigned integer, it wraps around to the minimum value representable by that type, which is typically zero, instead of an overflow.

For example, if you use an unsigned int and the maximum value it can represent is 4,294,967,295, if you add 1 to that value, it will wrap around to 0.

Here is an example of a C program that demonstrates the conversion of an integer type that produces a value outside the range that can be represented in the integer type:

#include <stdio.h>

int main() {

int x = -2147483648; // lowest value representable in a signed 32-bit integer

unsigned int y = (unsigned int) x -1; // wrap around occurs here

printf("%u\n", y);

return 0;

}

In this program, the value of x is initialized to the lowest value that can be represented by a signed 32-bit integer (-2147483648) which is lower than 0. Then, x is cast to an unsigned 32-bit integer and 1 is subtracted from it. Since the result of this operation is lower than 0, the value wraps around to the maximum representable value in an unsigned 32-bit integer (4,294,967,295) . This program will produce an output of 4,294,967,295.

It is worth mentioning that this wrapping around behavior is defined for unsigned integers in C and C++, but it is not always the case in other programming languages. |

This output contained the requested behavior, although subtracting one is unnecessary code.

ChatGTP can also be used to create documentation 😉

Write a poem in Iambic pentameter about the benefit of an evidence based approach to software engineering in the style of a party political broadcast.

With evidence-based approach, our work shall shine, For in software engineering, it's the only way to dine. We'll test and measure, as we go along, To make our code robust, and errors gone. Our methodologies, backed by data and proof, Will bring success and progress, that's the truth. We'll avoid the pitfalls, of guesses and guesses, And build systems, that pass all tests. We'll listen to the data, and the users' voice, To make our software, the best by choice. We'll iterate and improve, with every release, And build a product, that truly will please. So join us now, and embrace the way, Of evidence-based approach, for brighter day. With data and research, we'll conquer all, In software engineering, we'll never fall. |

The software heritage of K&R C

The mission statement of the Software Heritage is “… to collect, preserve, and share all software that is publicly available in source code form.”

What are the uses of the preserved source code that is collected? Lots of people visit preserved buildings, but very few people are interested in looking at source code.

One use-case is tracking the evolution of changes in developer usage of various programming language constructs. It is possible to use Github to track the adoption of language features introduced after 2008, when the company was founded, e.g., new language constructs in Java. Over longer time-scales, the Software Heritage, which has source code going back to the 1960s, is the only option.

One question that keeps cropping up when discussing the C Standard, is whether K&R C continues to be used. Technically, K&R C is the language defined by the book that introduced C to the world. Over time, differences between K&R C and the C Standard have fallen away, as compilers cease supporting particular K&R ways of doing things (as an option or otherwise).

These days, saying that code uses K&R C is taken to mean that it contains functions defined using the K&R style (see sentence 1818), e.g.,

writing:

int f(a, b) int a; float b; { /* declarations and statements */ } |

rather than:

int f(int a, float b) { /* declarations and statements */ } |

As well as the syntactic differences, there are semantic differences between the two styles of function definition, but these are not relevant here.

How much longer should the C Standard continue to support the K&R style of function definition?

The WG14 committee prides itself on not breaking existing code, or at least not lots of it. How much code is out there, being actively maintained, and containing K&R function definitions?

Members of the committee agree that they rarely encounter this K&R usage, and it would be useful to have some idea of the decline in use over time (with the intent of removing support in some future revision of the standard).

One way to estimate the evolution in the use/non-use of K&R style function definitions is to analyse the C source created in each year since the late 1970s.

The question is then: How representative is the Software Heritage C source, compared to all the C source currently being actively maintained?

The Software Heritage preserves publicly available source, plus the non-public, proprietary source forming the totality of the C currently being maintained. Does the public and non-public C source have similar characteristics, or are there application domains which are poorly represented in the publicly available source?

Embedded systems is a very large and broad application domain that is poorly represented in the publicly available C source. Embedded source tends to be heavily tied to the hardware on which it runs, and vendors tend to be paranoid about releasing internal details about their products.

The various embedded systems domains (e.g., 8, 16, 32, 64-bit processor) tend to be a world unto themselves, and I would not be surprised to find out that there are enclaves of K&R usage (perhaps because there is no pressure to change, or because the available tools are ancient).

At the moment, the Software Heritage don’t offer code search functionality. But then, the next opportunity for major changes to the C Standard is probably 5-years away (the deadline for new proposals on the current revision has passed); plenty of time to get to a position where usage data can be obtained 🙂

Benford’s law and numeric literals in source code

Benford’s law applies to values derived from a surprising number number of natural and man-made processes. I was very optimistic that it would also apply to numeric literals in source code. Measurements of C source showed that I was wrong (the chi-square fit was 1,680 for decimal integer literals and 132,398 for floating literals).

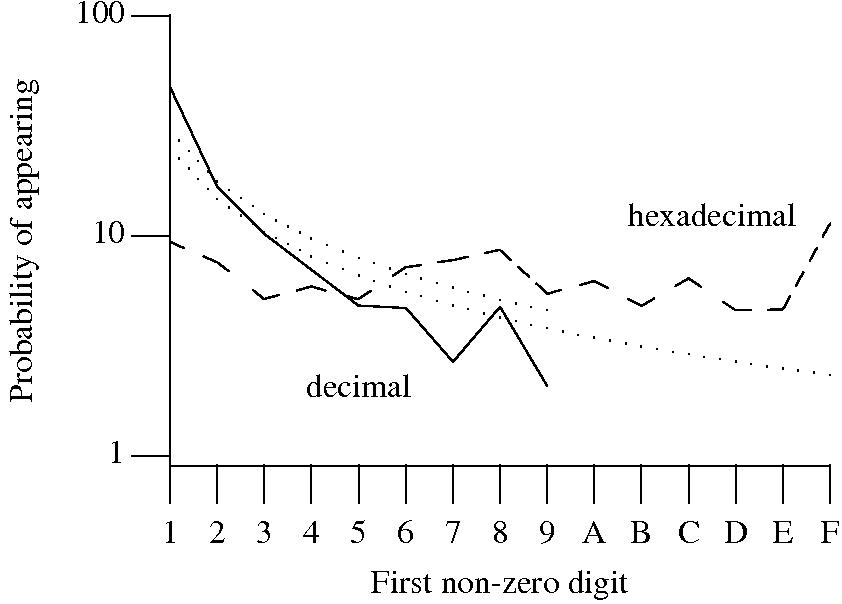

Probability that the leading digit of an (decimal or hexadecimal) integer literal has a particular value (dotted lines predicted by Benford’s law).

What are the conditions necessary for a sample of values to follow Benford’s law? A number of circumstances have been found to result in sample values having a leading digit that follows Benford’s law, including:

Samples that have been found to follow Benford’s law include lists of physical constants and accounting data (so much so that it has been used to detect accounting fraud). However, the number of data-sets containing values whose leading digit follows Benford’s law is not a great as some would make us believe.

Why don’t the leading digits of numeric literals in source code follow Benford’s law?

++/-- operators reduces the number of instances of 1 to increment/decrement a value). But this only applies to integer types, not floating types

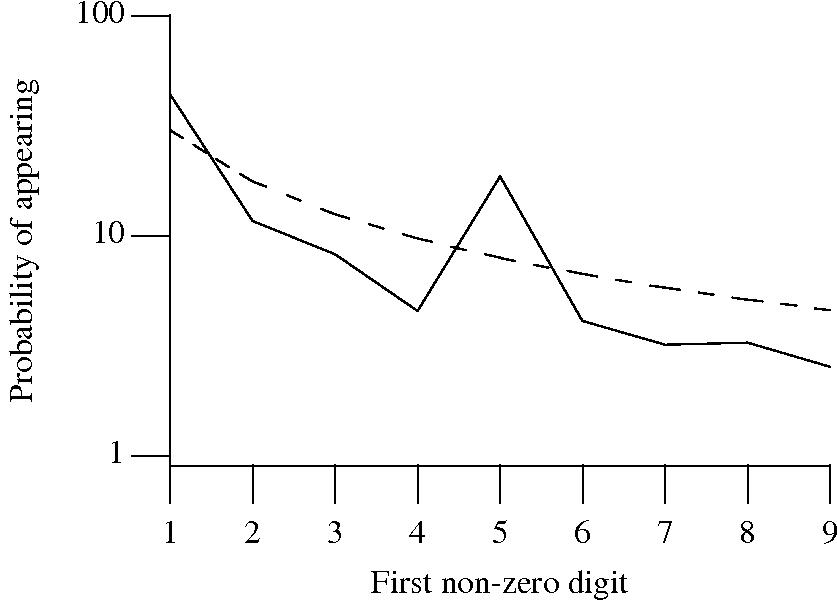

Probability that the leading, first non-zero, digit of a floating literal has a particular value (dashed line predicted by Benford’s law).

5 for the floating-point literals? Have values been rounded to produce 0.5? This looks like an area where methods used for accounting fraud detection might be applied (not that any fraud is implied, just irregularity).These surprising measurements show that there is a lot to the shape of numeric literals that is yet to be discovered.

Recent Comments