Archive

2021 in the programming language standards’ world

Last Tuesday I was on a Webex call (the British Standards Institute’s use of Webex for conference calls predates COVID 19) for a meeting of IST/5, the committee responsible for programming language standards in the UK.

There have been two developments whose effect, I think, will be to hasten the decline of the relevance of ISO standards in the programming language world (to the point that they are ignored by compiler vendors).

- People have been talking about switching to online meetings for years, and every now and again someone has dialed-in to the conference call phone system provided by conference organizers. COVID has made online meetings the norm (language working groups have replaced face-to-face meetings with online meetings). People are looking forward to having face-to-face meetings again, but there is talk of online attendance playing a much larger role in the future.

The cost of attending a meeting in person is the perennial reason given for people not playing an active role in language standards (and I imagine other standards). Online attendance significantly reduces the cost, and an increase in the number of people ‘attending’ meetings is to be expected if committees agree to significant online attendance.

While many people think that making it possible for more people to be involved, by reducing the cost, is a good idea, I think it is a bad idea. The rationale for the creation of standards is economic; customer costs are reduced by reducing

diversityincompatibilities across the same kind of product., e.g., all standard conforming compilers are consistent in their handling of the same construct (undefined behavior may be consistently different). When attending meetings is costly, those with a significant economic interest tend to form the bulk of those attending meetings. Every now and again somebody turns up for a drive-by-shooting, i.e., they turn up for a day to present a paper on their pet issue and are never seen again.Lowering the barrier to entry (i.e., cost) is going to increase the number of drive-by shootings. The cost of this spray of pet-issue papers falls on the regular attendees, who will have to spend time dealing with enthusiastic, single issue, newbies,

- The International Organization for Standardization (ISO is the abbreviation of the French title) has embraced the use of inclusive terminology. The ISO directives specifying the Principles and rules for the structure and drafting of ISO and IEC documents, have been updated by the addition of a new clause: 8.6 Inclusive terminology, which says:

“Whenever possible, inclusive terminology shall be used to describe technical capabilities and relationships. Insensitive, archaic and non-inclusive terms shall be avoided. For the purposes of this principle, “inclusive terminology” means terminology perceived or likely to be perceived as welcoming by everyone, regardless of their sex, gender, race, colour, religion, etc.

New documents shall be developed using inclusive terminology. As feasible, existing and legacy documents shall be updated to identify and replace non-inclusive terms with alternatives that are more descriptive and tailored to the technical capability or relationship.”

The US Standards body, has released the document INCITS inclusive terminology guidelines. Section 5 covers identifying negative terms, and Section 6 deals with “Migration from terms with negative connotations”. Annex A provides examples of terms with negative connotations, preceded by text in bright red “CONTENT WARNING: The following list contains material that may be harmful or

traumatizing to some audiences.”“Error” sounds like a very negative word to me, but it’s not in the annex. One of the words listed in the annex is “dummy”. One member pointed out that ‘dummy’ appears 794 times in the current Fortran standard, (586 times in ‘dummy argument’).

Replacing words with negative connotations leads to frustration and distorted perceptions of what is being communicated.

I think there will be zero real world impact from the use of inclusive terminology in ISO standards, for the simple reason that terminology in ISO standards usually has zero real world impact (based on my experience of the use of terminology in ISO language standards). But the use of inclusive terminology does provide a new opportunity for virtue signalling by members of standards’ committees.

While use of inclusive terminology in ISO standards is unlikely to have any real world impact, the need to deal with suggested changes of terminology, and new terminology, will consume committee time. Most committee members tend to a rather pragmatic, but it only takes one or two people to keep a discussion going and going.

Over time, compiler vendors are going to become disenchanted with the increased workload, and the endless discussions relating to pet-issues and inclusive terminology. Given that there are so few industrial strength compilers for any language, the world no longer needs formally agreed language standards; the behavior that implementations have to support is controlled by the huge volume of existing code. Eventually, compiler vendors will sever the cord to the ISO standards process, and outside of the SC22 bubble nobody will notice.

2019 in the programming language standards’ world

Last Tuesday I was at the British Standards Institute for a meeting of IST/5, the committee responsible for programming language standards in the UK.

There has been progress on a few issues discussed last year, and one interesting point came up.

It is starting to look as if there might be another iteration of the Cobol Standard. A handful of people, in various countries, have started to nibble around the edges of various new (in the Cobol sense) features. No, the INCITS Cobol committee (the people who used to do all the heavy lifting) has not been reformed; the work now appears to be driven by people who cannot let go of their involvement in Cobol standards.

ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard, has been updated and we were asked for a UK position on the document being published. Abstain seemed to be the only sensible option.

Our WG20 representative reported that the ongoing debate over pile of poo emoji has crossed the chasm (he did not exactly phrase it like that). Vendors want to have the freedom to specify code-points for use with their own emoji, e.g., pineapple emoji. The heady days, of a few short years ago, when an encoding for all the world’s character symbols seemed possible, have become a distant memory (the number of unhandled logographs on ancient pots and clay tablets was declining rapidly). Who could have predicted that the dream of a complete encoding of the symbols used by all the world’s languages would be dashed by pile of poo emoji?

The interesting news is from WG9. The document intended to become the Ada20 standard was due to enter the voting process in June, i.e., the committee considered it done. At the end of April the main Ada compiler vendor asked for the schedule to be slipped by a year or two, to enable them to get some implementation experience with the new features; oops. I have been predicting that in the future language ‘standards’ will be decided by the main compiler vendors, and the future is finally starting to arrive. What is the incentive for the GNAT compiler people to pay any attention to proposals written by a bunch of non-customers (ok, some of them might work for customers)? One answer is that Ada users tend to be large bureaucratic organizations (e.g., the DOD), who like to follow standards, and might fund GNAT to implement the new document (perhaps this delay by GNAT is all about funding, or lack thereof).

Right on cue, C++ users have started to notice that C++20’s added support for a system header with the name version, which conflicts with much existing practice of using a file called version to contain versioning information; a problem if the header search path used the compiler includes a project’s top-level directory (which is where the versioning file version often sits). So the WG21 committee decides on what it thinks is a good idea, implementors implement it, and users complain; implementors now have a good reason to not follow a requirement in the standard, to keep users happy. Will WG21 be apologetic, or get all high and mighty; we will have to wait and see.

2018 in the programming language standards’ world

I am sitting in the room, at the British Standards Institution, where today’s meeting of IST/5, the committee responsible for programming languages, has just adjourned (it’s close to where I have to be in a few hours).

BSI have downsized us, they no longer provide a committee secretary to take minutes and provide a point of contact. Somebody from a service pool responds (or not) to emails. I did not blink first to our chair’s request for somebody to take the minutes 🙂

What interesting things came up?

It transpires that reports of the death of Cobol standards work may be premature. There are a few people working on ‘new’ features, e.g., support for JSON. This work is happening at the ISO level, rather than the national level in the US (where the real work on the Cobol standard used to be done, before being handed on to the ISO). Is this just a couple of people pushing a few pet ideas or will it turn into something more substantial? We will have to wait and see.

The Unicode consortium (a vendor consortium) are continuing to propose new pile of poo emoji and WG20 (an ISO committee) were doing what they can to stay sane.

Work on the Prolog standard, now seems to be concentrated in Austria. Prolog was the language to be associated with, if you were on the 1980s AI bandwagon (and the Japanese were going to take over the world unless we did something about it, e.g., spend money); this time around, it’s machine learning. With one dominant open source implementation and one commercial vendor (cannot think of any others), standards work is a relic of past glories.

In pre-internet times there was an incentive to kill off committees that were past their sell-by date; it cost money to send out mailings and document storage occupied shelf space. In an electronic world there is no incentive to spend time killing off such committees, might as well wait until those involved retire or die.

WG23 (programming language vulnerabilities) reported lots of interest in their work from people involved in the C++ standard, and for some reason the C++ committee people in the room started glancing at me. I was a good boy, and did not mention bored consultants.

It looks like ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard is going to be updated to reflect LBS 5.0; something that was not certain few years ago.

2017 in the programming language standards’ world

Yesterday I was at the British Standards Institution for a meeting of IST/5, the committee responsible for programming languages.

The amount of management control over those wanting to get to the meeting room, from outside the building, has increased. There is now a sensor activated sliding door between the car-park and side-walk from the rear of the building to the front, and there are now two receptions; the ground floor reception gets visitors a pass to the first floor, where a pass to the fifth floor is obtained from another reception (I was totally confused by being told to go to the first floor, which housed the canteen last time I was there, and still does, the second reception is perched just inside the automatic barriers to the canteen {these barriers are also new; the food is reasonable, but not free}).

Visitors are supposed to show proof that they are attending a meeting, such as a meeting calling notice or an agenda. I have always managed to look sufficiently important/knowledgeable/harmless to get in without showing any such documents. I was asked to show them this time, perhaps my image is slipping, but my obvious bafflement at the new setup rescued me.

Why does BSI do this? My theory is that it’s all about image, BSI is the UK’s standard setting body and as such has to be seen to follow these standards. There is probably some security standard for rules to follow to prevent people sneaking into buildings. It could be argued that the name British Standards is enough to put anybody off wanting to enter the building in the first place, but this does not sound like a good rationale for BSI to give. Instead, we have lots of sliding doors/gates, multiple receptions (I suspect this has more to do with a building management cat fight over reception costs), lifts with no buttons ‘inside’ for selecting floors, and proof of reasons to be in the building.

There are also new chairs in the open spaces. The chairs have very high backs and side-baffles that surround the head area, excellent for having secret conversations and in-tune with all the security. These open areas are an image of what people in the 1970s thought the future would look like (BSI is a traditional organization after all).

So what happened in the meeting?

Cobol standard’s work becomes even more dead. PL22.4, the US Cobol group is no more (there were insufficient people willing to pay membership fees, so the group was closed down).

People are continuing to work on Fortran (still the language of choice for supercomputer Apps), Ada (some new people have started attending meetings and support for @ is still being fought over), C, Internationalization (all about character sets these days). Unprompted somebody pointed out that the UK C++ panel seemed to be attracting lots of people from the financial industry (I was very professional and did not relay my theory that it’s all about bored consultants wanting an outlet for their creative urges).

SC22, the ISO committee responsible for programming languages, is meeting at BSI next month, and our chairman asked if any of us planned to attend. The chair’s response, to my request to sell the meeting to us, was that his vocabulary was not up to the task; a two-day management meeting (no technical discussions permitted at this level) on programming languages is that exciting (and they are setting up a special reception so that visitors don’t have to go to the first floor to get a pass to attend a meeting on the ground floor).

2015 in the programming language standard’s world

Last Tuesday I was at the British Standards Institute for a meeting of IST/5, the programming languages committee.

My suggestion that the Cobol 2014 standard may be the last revision of that language appears to be coming true; there has been a steep decline in membership of the US Cobol committee (this is where all the work is done, with the rest of the world joining this committee or rubber stamping what comes out of it), and nobody has expressed interest in being involved in new work items.

Fortran appears to be going strong, with a revised standard planned for 2017.

In October C++ are rectifying the fact that they have not meet in Hawaii for three years. In fairness I ought to point out that the Fortran committee, when hosted by INCITS/PL22.3, regularly hold meetings in Las Vegas (I’m told its because the hotel rooms are cheap; Nevada is where US underground atom bomb tests were located and lots of super-computers executing programs written in Fortran were involved, or perhaps readers can think of an alternative explanation that does not invoke secret government organizations).

I found out that PL/1 is still an ISO Standard.

Work on the C and Ada standards continues.

Prolog has a new convenor, Ulrich Neumerkel. There was a meeting during April in Dresden, Germany but no minutes have been published. Did anybody attend?

ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard is almost 10 years behind the specification published by the organization who actually does the work. Some voices have expressed an interest in updating the ISO document. What does ISO’s version of the Linux Standard Base have to do with the committee responsible for programming languages?

Well, a long time ago in a galaxy far away, or the late 1980s in London, some people decided to set up a committee that specified O/S related library functions callable from C programs. SC22, programming languages, was the existing ISO committee having the closest fit with this new working group; initially it produced a specification that went under the name POSIX. Jump forward 15 years and Linux was the big POSIX success story (ok, the Linux people might see things differently) and dare I suggest that one of the motivations for creating ISO/IEC 23360-1:2006 might have been to bask in the reflected success of Linux. I understand the motivation of people involved in the standard’s process for wanting to published an update that reflects the current state of play (seriously out of date standards degrade the brand), but I don’t see why the Linux Foundation would be interested in going through the hassle of making this happen (unless they are having a mid-life crisis and are seeking approval of their work from an authority figure). Watch this blog for a 2016 status update.

Will programming languages now have to follow ISO fast-track rules?

A while back I wrote about how updated versions of ECMAscript (i.e., the Standard for Javascript) had twice been fast tracked to replace an existing ISO Standard, however the ISO rules require that once a document becomes one of its standards all future work be done using the ISO process (i.e., you only supposed to get the one original fast track and then you have to get at least half a dozen countries to say they will actively participate in ongoing work). Thirteen years after I asked why it was being allowed to happen (as I recall I only raised the issue because I thought I had misunderstood the rules, not because I had a burning desire to enforce them) the issue has suddenly sprung to life (we are talking Standard’s world ‘sudden’ here), with a question being raised at the last SC22 meeting and a more detailed one being prepared by BSI for the next meeting (they occur once per year).

The Elephant in the room here is ISO/IEC 29500:2008, not a programming language but Microsoft’s Office Open XML; there was quite a bit of fuss when this was fast tracked.

If the ISO rules on one-time only use of the fast track process was limited to programming languages I imagine the bureaucrats in Geneva would probably never get to hear about it (SC22 would probably conclude that there was not enough interest in the various documents outside of the submitting country to form an active ISO working group; so leave well alone).

ISO sells over 19,000 standards and has better things to do than spend time on the goings on in an unfashionable part of the galaxy, unless, that is, it has the potential to generate lots of fuss that undermines credibility.

Will Microsoft try to fast track an updated version of ISO 29500? I don’t even know if they are updating it. The possibility that ISO 29500 might be updated and submitted for fast track will make it hard for SC22 to agree to any future fast-track updates to existing ISO Standards it is responsible for.

The following is a list of documents that have been fast tracked to become an ISO Standard:

ECMAScript: ECMA-262 (1st edn) = ISO 16262:1998 ECMA-262 (3rd edn) = ISO 16262:1999 ECMA-262 (5th edn) = ISO 16262:2011 C#: ECMA-334 (2nd edn) = ISO 23270:2003 ECMA-334 (4th edn) = ISO 23270:2006 CLI: ECMA-335 (2nd edn) = ISO 23271:2003 ECMA-335 (6th edn) = ISO 23271:2012 ECMA standards fast-tracked to ISO and not yet revised: ECMA-149 PCTE part 1 = ISO 13719-1:1998 ECMA-158 PCTE part 2 = ISO 13719-2:1998 ECMA-162 PCTE part 3 = ISO 13719-3:1998 ECMA-230 PCTE IDL binding = ISO 13719-4:1998 ECMA-367 EIFFEL = ISO 25436:2006 ECMA-372 C++/CLI -> DIS 26926; failed DIS ballot and project cancelled Replaced rather than revised under JTC1 rules: CHILL (from CCITT): ISO standards 9496:1989, 9496:1995, 9496:1998, 9496:2003 MUMPS/M (from Mass Gen Hospital/ANSI): ISO standards 11756:1992, 11756:1999 Non-ECMA documents fast-tracked through ISO and not yet revised: FORTH (from FORTH Inc): ISO 15145:1997 JEFF (from J consortium): ISO 20970:2002 Ruby (from Japanese Industrial Standards Committee): ISO/IEC 30170:2012

Writing language standards is a cottage industry

In the beginning programming language standards were written by one country’s National Standards body (e.g., ANSI did C/Cobol/Fortran for the USA and BSI did Pascal for the UK) and other countries were free to write their own version, adopt the existing work or do nothing (I don’t know of any country writing their own version, a few countries sometimes stuck their own front page on an existing document and the majority did nothing; update 4 Dec 2012, thanks to David Muxworthy for pointing out that around 1974 the UK, US, Japan and ECMA were all independently developing a standard for BASIC, by 1982 this had evolved to just ANSI and ECMA).

The UK people who created the Pascal Standard wanted the rest of the world (i.e., the US) to adopt it and the way to do this was to have it adopted as an ISO Standard. The experience of making this happen convinced the folk at BSI that in future language standards should be produced as an international effort within ISO (those pesky Americans wanted changes made to the document before they would vote for it).

During the creation of the first C Standard various people from Europe joined the ANSI committee, X3J11, so they could take part. Initially the US members were not receptive to the European request for a mechanism to handle keyboards that did not contain certain characters (e.g., left/right square brackets) but responded promptly on hearing that those (pesky) Europeans planned to publish an ISO C Standard that would contain those changes to the ANSI Standard needed to support trigraphs; the published ANSI Standard included support for trigraphs. The C ANSI committee were very receptive to the idea of future work being done at the ISO level; Bill Plauger/Tom Plum did a lot of good work to ensure it happened.

The C++ language came along and long story short an ISO committee was set up to create an ISO Standard for it, then Java came along and the Java Study Group failed to become an ISO committee and then various nonspecific language committees happened.

A look at the SC22 web site shows that ISO Standards exist for Forth and ECMAscript (it has not yet been updated to include Ruby) with no corresponding ISO committees. What is going on?

One could be cynical and say that special interests are getting a document of their choosing accepted by ECMA and then abusing the ISO fast track procedure to sidestep the need to setup an international committee that has the authority to create a document of its choosing. The reality is that unless a language is very widely used by lots of people (e.g., in the top five or so most commonly used languages) there are unlikely to be enough people (or employers) willing and able to commit the time and money needed to be actively involved in an ISO Standard committee.

Once a documented has been fast tracked to become ISO Standard any updates to it are supposed to be carried out under ISO rules (i.e., an ISO committee). In practice this is not happening with ECMAscript which continues to be very active (I don’t know what is happening with Forth or how the Ruby people plan to handle any updates), holding bi-monthly meetings; over the years they have fast tracked two revisions to the original fast tracked document (the UK did raise the issue during balloting but nothing came of it, I don’t think anybody really cares).

Would moving the ECMAscript development work from ECMA to ISO make a worthwhile difference? There might be a few people out there who would attend an ISO meeting who are not currently attending ECMA meetings (to join ECMA companies with five or less employees pay an annual fee of 3,500 Swiss francs {about the same number of US dollars} and larger companies pay a lot more) but I suspect the number would not be large enough to make up for the extra hassle of running an ISO committee (e.g., longer ISO balloting timescales).

Production of programming language standards is really a cottage industry that relies on friends in high places (e.g., companies with an existing membership of ECMA or connections into the local country standards’ body) for them to appear on the international stage.

February 2012 news in the programming language standard’s world

Yesterday I was at the British Standards Institute for a meeting of the programming languages committee. Some highlights and commentary:

- The first Technical Corrigendum (bug fixes, 47 of them) for Fortran 2008 was approved.

- The Lisp Standard working group was shutdown, through long standing lack of people interested in taking part; this happened at the last SC22 meeting, the UK does not have such sole authority.

- WG14 (C Standard) has requested permission to start a new work item to create a new annex to the standard containing a Secure Coding Standard. Isn’t this the area of expertise of WG23 (Language vulnerabilities)? Well, yes; but when the US Department of Homeland Security is throwing money at cyber security increasing the number of standards’ groups working on the topic creates more billable hours for consultants.

- WG21 (C++ Standard) had 73 people at their five day meeting last week (ok, it was in Hawaii). Having just published a 1,300+ page Standard which no compiler yet comes close to implementing they are going full steam ahead creating new features for a revised standard they aim to publish in 2017. Does the “Hear about the upcoming features in C++” blogging/speaker circuit/consulting gravy train have that much life left in it? We will see.

The BSI building has new lifts (elevators in the US). To recap, lifts used to work by pressing a button to indicate a desire to change floors, a lift would arrive, once inside one or more people needed press buttons specifying destination floor(s). Now the destination floor has to be specified in advance, a lift arrives and by the time you have figured out there are no buttons to press on the inside of the lift the doors open at the desired floor. What programming language most closely mimics this new behavior?

Mimicking most languages of the last twenty years the ground floor is zero (I could not find any way to enter a G). This rules out a few languages, such as Fortran and R.

A lift might be thought of as a function that can be called to change floors. The floor has to be specified in advance and cannot be changed once in the lift, partial specialization of functions and also the lambda calculus springs to mind.

In a language I just invented:

// The lift specified a maximum of 8 people lift = function(p_1, p_2="", p_3="", p_4="", p_5="", p_6="", p_7="", p_8="") {...} // Meeting was on the fifth floor first_passenger_5th_floor = function lift(5); second_passenger_4th_floor = function first_passenger_fifth_floor(4); |

the body of the function second_passenger_4th_floor is a copy of the body of lift with all the instances of p_1 and p_2 replaced by the 5 and 4 respectively.

Few languages have this kind of functionality. The one that most obviously springs to mind is Lisp (partial specialization of function templates in C++ does not count because they are templates that are still in need of an instantiation). So the ghost of the Lisp working group lives on at BSI in their lifts.

C compiler validation is 21 today!

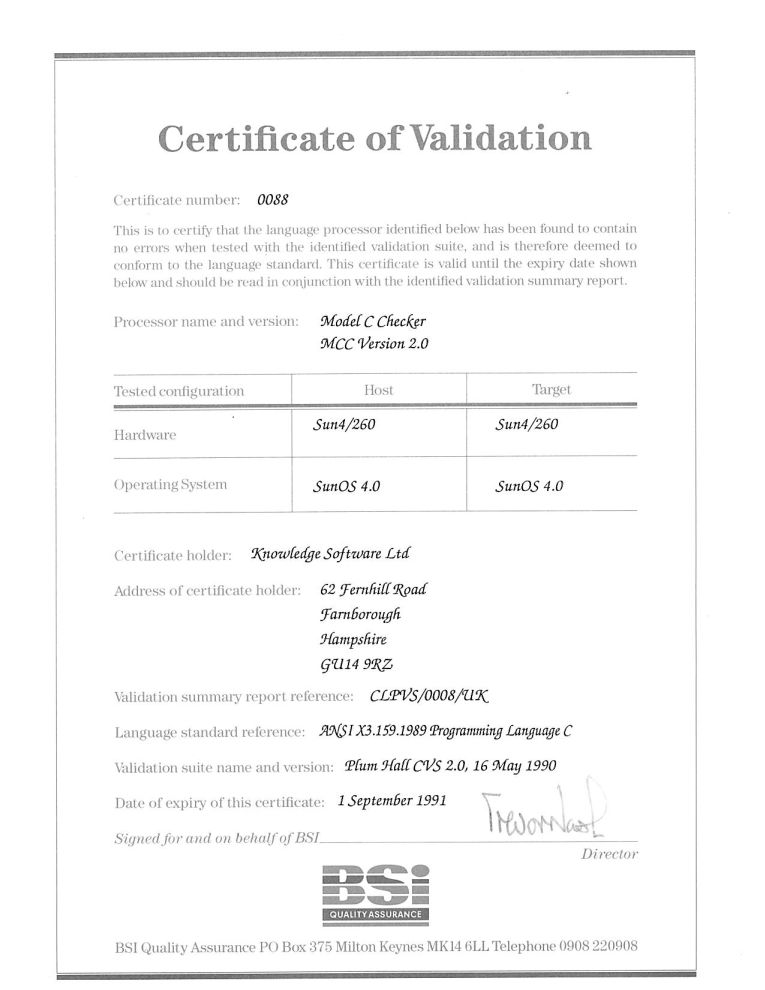

Today, 1 September 2011, is the 21th anniversary of the first formally validated C compilers. The three ‘equal first’ validated compilers were the Model Implementation C Checker from Knowledge Software, Topspeed C from JPI (run by the people who created Turbo Pascal) and the INMOS C compiler (derived from the Norcroft C compiler written by Alan Mycroft+others, the author of the longest response document seen during the review of the C89 draft standard).

Back in the day the British Standards Institution testing group run by John Souter were the world leaders in compiler validation and were very proactive in adding support for a new language. NIST, the equivalent US body, did not offer such a service until a few years later. Those companies in a position to have their compilers validated (i.e., the compiler passed the validation suite) were pressing BSI to be first; the ‘who is first’ issue was resolved by giving all certificates the same date (the actual validation process of a person from BSI, Neil Martin now Director of Test in the Winterop Team at Microsoft, turning up to ‘witness’ the compiler passing the tests happened several weeks earlier).

Testing C compilers was different from other language compilers in that sufficient demand existed to support commercial production and maintenance of test suites (the production of validation suites for previous language compilers had been government funded). After a review of the available test suites BSI chose to use the Plum Hall suite; after a similar review NIST chose to use the Perennial suite (I got involved in trying to figure out for NIST how well this suite covered the requirements contained in the C Standard).

For a while C compiler validation was big business (as in big fish, very small pond). But the compiler validation market is dependent on there being lots of compilers, which requires market fragmentation and to a lesser extent lots of different OSs and hardware platforms (each needing a separate validation). The 1990s saw market consolidation, gcc becoming good enough for commercial use and a shift of developer mind share to C++. Dwindling revenue resulted in BSI’s compiler validation group being shut down after a few years and NIST’s followed in 1998.

Is compiler validation relevant today? When the first C Standard was published a lot of compilers in common use had some significant behavioural differences compared to what the Standard specified. Over time these compilers have either disappeared or been upgraded (a potential customer once asked me the benefits I saw in them licensing the Knowledge Software front end and the reply to one of my responses, “you can tell your customers that the compiler is standard’s compliant”, was that this was not a benefit as they had been claiming this for years). Improvements in Intel’s x86 processor also had a hand in improving compiler Standard’s conformance; the various memory models used by the x86 processor was a huge headache for compiler writers whose products often behaved very differently under different memory models; the arrival of the Pentium with its flat 32-bit address space meant this issue disappeared over time.

These days I suspect that the major compilers targeting platforms where portability is expected (portability is often not a big expectation in the embedded world) are sufficiently compatible that developers are willing to overlook small differences with the Standard. Differences in third party libraries, GUIs and other frameworks have been the big headache for many years now.

Would the ‘platform portability’ compilers, that’s probably gcc, Microsoft, products using EDG’s front end, and perhaps llvm in the coming years, pass the latest version of the PlumHall and Perennial suites?

- The gcc team do not have access to either company’s suite. The gcc regression tests are a poor substitute for a proper compiler validation suite (even though they cost many thousands of dollars commercial compiler writers often buy both companies products because they are good value for money as a testing resource {the Fortran 78 validation suite source gives some idea of how much work is actually involved). I would expect gcc to fail some of the tests but have no idea how many or serious the failures would be.

- Microsoft have said they don’t have plans to support C99 (it took a lot of prodding to get them interested in formally validating against C90).

- I think the llvm team are in the same position as gcc, but perhaps somebody at Apple has access to one or more of the commercial suites (I don’t know).

- EDG are into standard’s conformance and I would expect them to pass both suites.

The certificate is printed on high quality, slightly yellow paper; the template wording is in a subdued gray ink while the customer information is in a very bold black ink. I don’t know whether this is to make life difficult for counterfeiters, but I could not get any half decent photographs and the color scanner had to be switched to black&white.

Validation was good for one year and I saw no worthwhile benefit in paying BSI £5,000 to renew for another year. Few people knew about the one year rule and I did not enlighten them. In the Ada compiler market the one year rule was a major problem, but lets leave that for another time.

Recent Comments