Archive

Array bound checking in C: the early products

Tools to support array bound checking in C have been around for almost as long as the language itself.

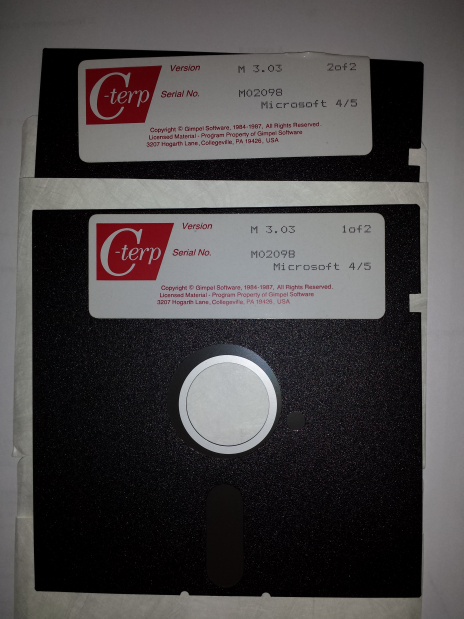

I recently came across product disks for C-terp; at 360k per 5 1/4 inch floppy, that is a C compiler, library and interpreter shipped on 720k of storage (the 3.5 inch floppies with 720k and then 1.44M came along later; Microsoft 4/5 is the version of MS-DOS supported). This was Gimpel Software’s first product in 1984.

The Model Implementation C checker work was done in the late 1980s and validated in 1991.

Purify from Pure Software was a well-known (at the time) checking tool in the Unix world, first available in 1991. There were a few other vendors producing tools on the back of Purify’s success.

Richard Jones (no relation) added bounds checking to gcc for his PhD. This work was done in 1995.

As a fan of bounds checking (finding bugs early saves lots of time) I was always on the lookout for C checking tools. I would be interested to hear about any bounds checking tools that predated Gimpel’s C-terp; as far as I know it was the first such commercially available tool.

Hedonism: The future economics of compiler development

In the past developers paid for compilers, then gcc came along (followed by llvm) and now a few companies pay money to have a bunch of people maintain/enhance/support a new cpu; developers get a free compiler. What will happen once the number of companies paying money shrinks below some critical value?

The current social structure has an authority figure (ISO committees for C and C++, individuals or companies for other languages) specifying the language, gcc/llvm people implementing the specification and everybody else going along for the ride.

Looking after an industrial strength compiler is hard work and requires lots of know-how; hobbyists have little chance of competing against those paid to work full time. But as the number of companies paying for support declines, the number of people working full-time on the compilers will shrink. Compiler support will become a part-time job or hobby.

What are the incentives for a bunch of people to get together and spend their own time maintaining a compiler? One very powerful incentive is being able to decide what new language features the compiler will support. Why spend several years going to ISO meetings arguing with everybody about whether the next version of the standard should support your beloved language construct, it’s quicker and easier to add it directly to the compiler (if somebody else wants their construct implemented, let them write the code).

The C++ committee is populated by bored consultants looking for an outlet for their creative urges (the production of 1,600 page documents and six extension projects is driven by the 100+ people who attend meetings; a lot fewer people would produce a simpler C++). What is the incentive for those 100+ (many highly skilled) people to attend meetings, if the compilers are not going to support the specification they produce? Cut out the middle man (ISO) and organize to support direct implementation in the compiler (one downside is not getting to go to Hawaii).

The future of compiler development is groups of like-minded hedonists (in the sense of agreeing what features should be in a language) supporting a compiler for the bragging rights of having created/designed the languages features used by hundreds of thousands of developers.

Average maintenance/development cost ratio is less than one

Part of the (incorrect) folklore of software engineering is that more money is spent on maintaining an application than was spent on the original development.

Bossavit’s The Leprechauns of Software Engineering does an excellent job of showing that the probably source of this folklore did not base their analysis on any cost data (I’m not going to add to an already unwarranted number of citations by listing the source).

I have some data, actually two data sets, each measuring a different part of the problem, i.e., 1) system lifetime and 2) maintenance/development costs. Both sets of measurements apply to IBM mainframe software, so a degree of relatedness can be claimed.

Analyzing this data suggests that the average maintenance/development cost ratio, for a IBM applications, is around 0.81 (code+data). The data also provides a possible explanation for the existing folklore in terms of survivorship bias, i.e., most applications do not survive very long (and have a maintenance/development cost ratio much less than one), while a few survive a long time (and have a maintenance/development cost ratio much greater than one).

At any moment around 79% of applications currently being maintained will have a maintenance/development cost ratio greater than one, 68% a ratio greater than two and 51% a ratio greater than five.

Another possible cause of incorrect analysis is the fact we are dealing with ratios; the harmonic mean has to be used, not the arithmetic mean.

Existing industry practice of not investing in creating maintainable software probably has a better cost/benefit than the alternative because most software is not maintained for very long.

Early compiler history

Who wrote the early compilers, when did they do it and what were the languages compiled?

Answering these questions requires defining what we mean by ‘compiler’ and deciding what counts as a language.

Donald Knuth does a masterful job of covering the early development of programming languages. People were writing programs in high level languages well before the 1950s and Konrad Zuse is the forgotten pioneer from the 1940s (it did not help that Germany had just lost a war and people were not inclined to given German’s much public credit).

What is the distinction between a compiler and an assembler? Some early ‘high-level’ languages look distinctly low-level from our much later perspective; some assemblers support fancy subroutine and macro facilities that give them a high-level feel.

Glennie’s Autocode is from 1952 and depending on where the compiler/assembler line is drawn might be considered a contender for the first compiler. The team led by Grace Hopper produced A-0, a fancy link-loaded, in 1952; they called it a compiler, but today we would not consider it to be one.

Talking of Grace Hopper, from a biography of her technical contributions she sounds like a person who could be technical but chose to be management.

Fortran and Cobol hog the limelight of early compiler history.

Work on the first Fortran compiler started in the summer of 1954 and was completed 2.5 years later, say the beginning of 1957. A very solid claim to being the first compiler (assuming Autocode and A-0 are not considered to be compilers).

Compilers were created for Algol 58 in 1958 (a long dead language implemented in Germany with the war still fresh in people’s minds; not a recipe for wide publicity) and 1960 (perhaps the first one-man-band implemented compiler; written by Knuth, an American, but still a long dead language). The 1958 implementation sounds like it has a good claim to being the second compiler after the Fortran compiler.

In December 1960 there were at least two Cobol compilers in existence (one of which was created by a team containing Grace Hopper in a management capacity). I think the glory attached to early Cobol compiler work is the result of having a female lead involved in the development team of one of these compilers. Why isn’t the other Cobol compiler ever mentioned? Why is the Algol 58 compiler implementation that occurred before the Cobol compiler ever mentioned?

What were the early books on compiler writing?

“Algol 60 Implementation” by Russell and Randell (from 1964) still appears surprisingly modern.

Principles of Compiler Design, the “Dragon book”, came out in 1977 and was about the only easily obtainable compiler book for many years. The first half dealt with the theory of parser generators (the audience was CS undergraduates and parser generation used to be a very trendy topic with umpteen PhD thesis written about it); the book morphed into Compilers: Principles, Techniques, and Tools. Old-timers will reminisce about how their copy of the book has a green dragon on the front, rather than the red dragon on later trendier (with less parser theory and more code generation) editions.

Collecting all of the publicly available source code

Collecting together all the software ever written is impossible, but collecting everything that can be found would create something very useful (since I have always been interested in source code analysis, my opinion is biased).

Collecting together source available on the internet is easy. Creating a copy of Github is one of the first actions of anybody with ambitions of collecting lots of source (back in the day it was collecting shareware 5″ 1/4, then 3″ 1/2 floppy discs, then CDs and then DVDs).

The very hard to collect items “exist” on line-printer paper, punched cards, rolls of punched tape and mag tape. These are the items where serious collectors should concentrate their efforts (NASA lost a lot of Voyager data when magnetic particles fell off the plastic strips of tape reels in storage, because the adhesive had degraded).

The Internet Archive is doing a great job of collecting and making available ‘antique’ source code (old computer games is a popular genre; other collectors concentrate on being able to execute ROM images of games), but they are primarily US based.

Collecting the World’s source code requires collection organizations in every country. Collecting old code is a people intensive business and requires lots of local knowledge.

A new source code collection organization has recently been setup in France; the Software Heritage currently aims to collect all software that is publicly available. So far they have done what everybody else does, made a copy of Github and a couple of the well-known source repos.

I hope this organization is not just the French government throwing money at another one-upmanship US vs. France project.

If those involved are serious about collecting source code, rather than enjoying the perks of a tax-funded show project, they will realise that lots of French specific source code is dotted around the country needing to be collected now (before the media decomposes and those who know how to read it die).

Recent Comments