Eye-tracking of developers reading code is now in start-up mode

Readability has always been the meaningless go to attribute for designers of new languages and code restructuring techniques that needed a worthy sounding benefit to tout.

Market researchers, being more interested in empirical data than arm-waving, have been long time users of eye-tracking technology; gaze direction providing a direct link to where cognitive attention is being invested.

Over the last few years a small number of researchers have started measuring where software developers look when they read code. Analysing and interpreting data on eye movement while reading code is still in start-up mode. One group has started collecting data that others can use, the obligatory R packages (saccade, gazepath and itsadug) and Python library now exist, and the eye-movement in programming conference has its third meeting in November.

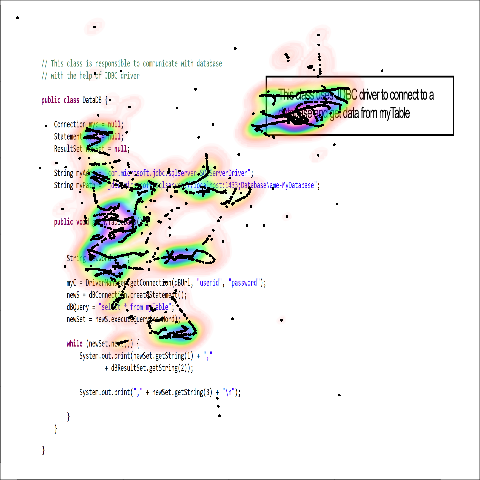

Apart from one tantalizing image (see below, code+data here, original research paper+data) my book should arrive too soon to say anything useful about code readability based on eye-tracking data.

It has taken several decades for researchers to create reasonably reliable models of attention and eye-movement for reading text. Code reading adds vertical eye-movement to the horizontal movements that occur when reading text; the models are probably going to be a lot more complicated. I discussed a few of the issues in my first book (the E-Z Reader model is still one of the top performers).

Accurately tracking eye motion during software development is technically difficult. Until recently obtaining the necessary accuracy required keeping the subject’s head fixed (achieved by having subjects clamp their teeth on a bite bar); somewhat impractical for developers wanting to view a large screen. Accurately tracking what developers are looking at requires tracking both head and eye motion. The necessary hardware is coming down in price, but still contains one too many zeroes for me to buy one to play with (I was given an Intel RealSense at a hackathon, now I just need the software…).

Next time somebody claims that so-and-so is more readable, ask them what eye-tracking research has to say on the subject.

Recent Comments